Fun

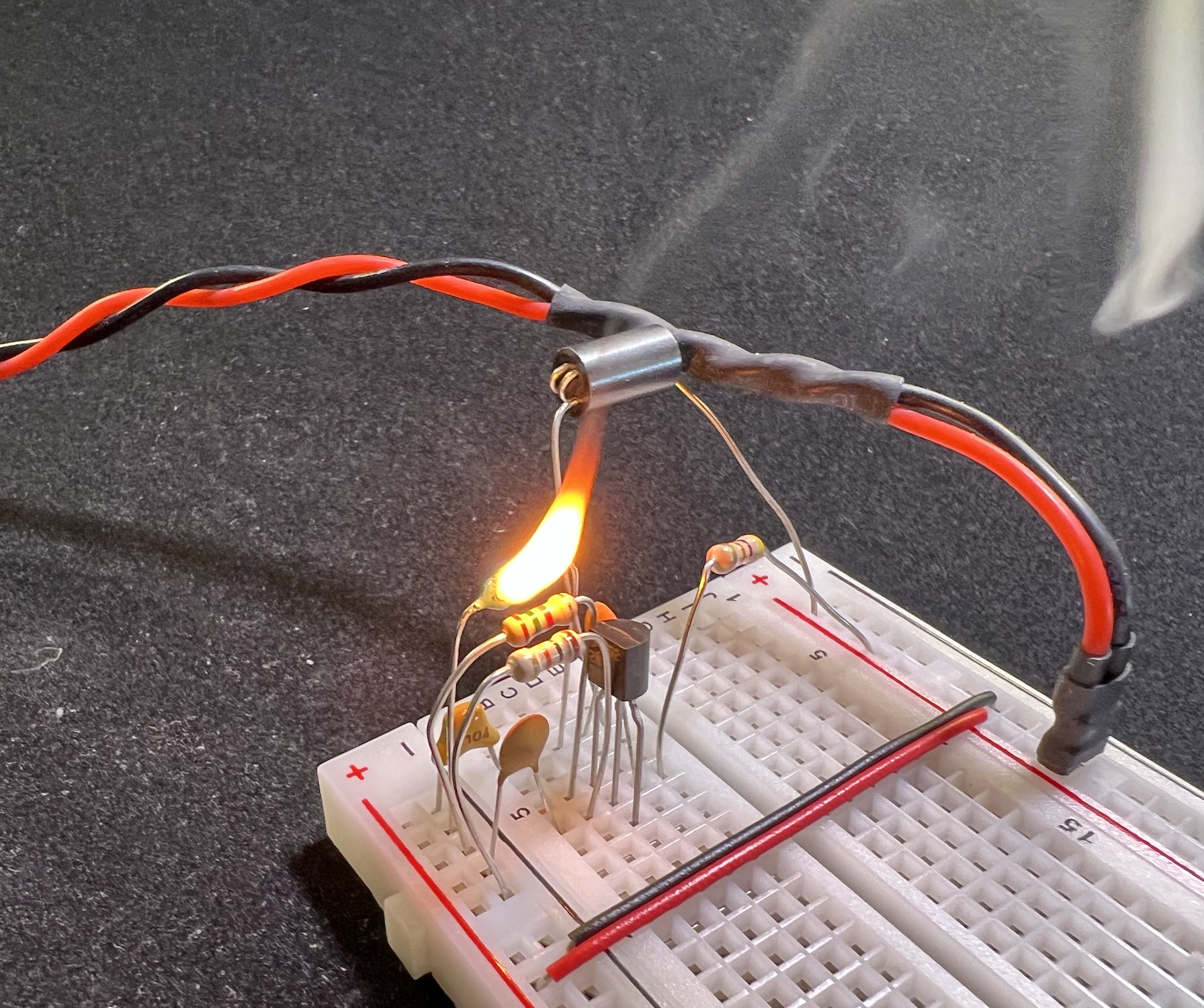

Fire Weather Watch!

Went to look at the weather forecasts for Chicago, since I’ll be visiting my sister there soon. Based on the past several weeks, my primary concern was snow and ice on the drive over. Instead, they’re promising warm dry weather with high winds and a chance of firestorms.

Guess I’d better pack a water type…

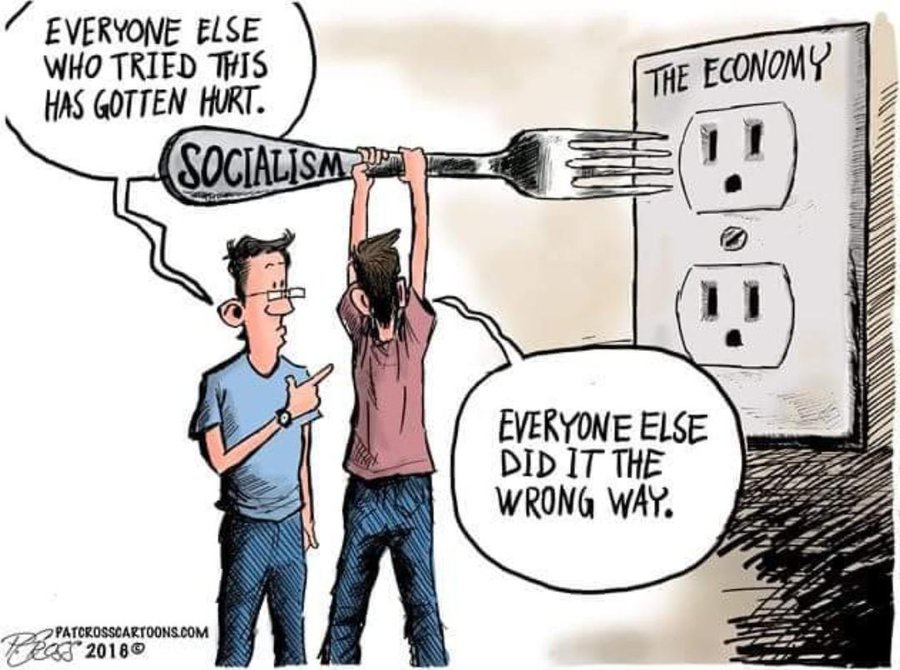

A village is missing their idiot…

Ilya Somin is perhaps the most reliably wrong commentator in what can laughably be called the “libertarian” gang at Reason, but this one takes it to a new level:

“If, as Musk says, the U.S. was all about English/Scots-Irish culture, there would have been no need for the American Revolution. The British Empire was dominated by those groups.”

Jaw-dropping ignorance from someone who claims the authority to lecture on law and liberty.

Apple, on the other hand, has plenty of them

Years ago, someone at Apple broke the keyboard shortcuts for switching between windows in Terminal.app. My suspicion has been that it had something to do with adding tab support and setting the app to default to using those shortcuts for tab-switching by default.

Someone finally filed a bug with a simple, clear repeat-by: hide the app. That’s it. Hide the windows just once, and they all lose their shortcuts. And I’m sure that the tiny handful of QA testers left for MacOS have never tested non-default behaviors.

Frieren 2, episode 5

In which Frieren does the meme, and then gets sentenced to 300 years hard labor.

I’m guessing there’s an AI behind this…

Dear Apple,

A while back I bought the recently-updated AirPods 3 Pro. They worked fine with my phone, tablet, and laptop, but Apple’s “find my” service couldn’t see them. At the time, the explanation was that I hadn’t yet upgraded my devices to the “Liquid Ass” OS v26. Since I wasn’t traveling much (indeed, I often don’t leave the house for days, especially the way this winter has been going…), I didn’t worry about it.

Now that they have a mostly working 26.3 release, and they’ve added back some of the legibility that was abandoned in the pursuit of random UI restyling, I updated my phone and tablet (not the Mac; that needs to work). To no surprise, the AirPods did not magically start working correctly in “find my”; they were detected, but it insisted that setup was incomplete and they could not be located.

Clicking on the error message took me to a support page, where none of this worked. At all. There’s yet another screen where you have to completely disassociate the device from your Apple account and then reconnect.

(flux2 is a lot slower and more memory-intensive than Z-Image Turbo, but it’s better at text, styles, and prompt following; there’s a lot I can’t do with it because my graphics card only has 24 GB of VRAM, but for simple one-off pics without refining, upscaling, or multi-image editing, it’s great; I’m thinking about setting up SwarmUI on Runpod with a better card, for the times when I want precise prompting)

Amazon Japan gets clean

Unless you have an active VPN connection with Japanese servers, you can no longer view adult books and videos on Amazon Japan. Searches pretend there’s nothing there, and direct links to products throw up an error page as if the page doesn’t exist. Items in wishlists appear normally with their title and picture, but you can’t click on them or move them to your cart.

(the accurately-rendered poster titles come from the collection of dirty-book covers I acquired some years back as part of my collage-wallpaper project)

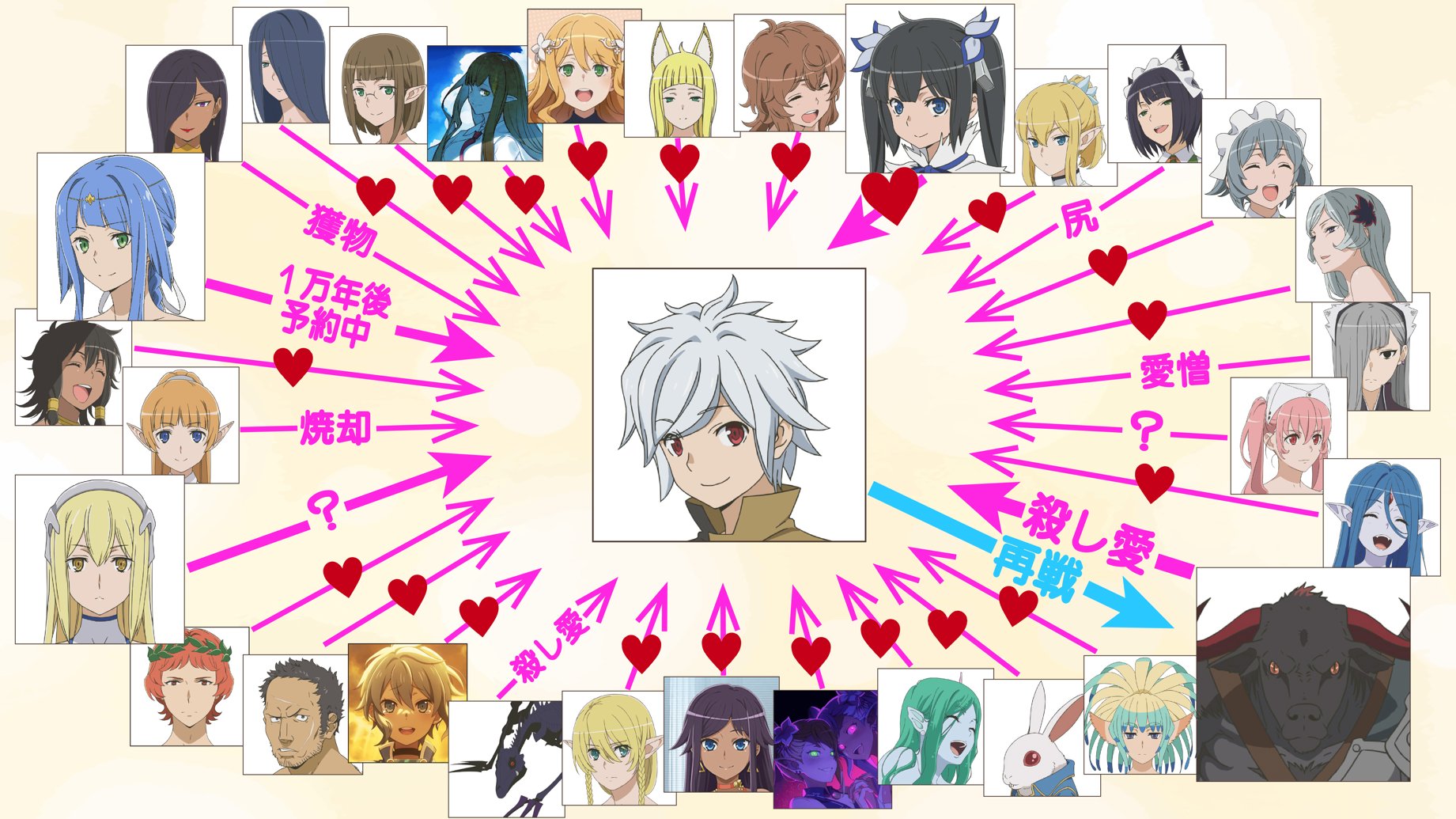

How to spot the hero in a harem anime…

From the X files...

If you’re cold, they’re cold…

Saki is politely baffled

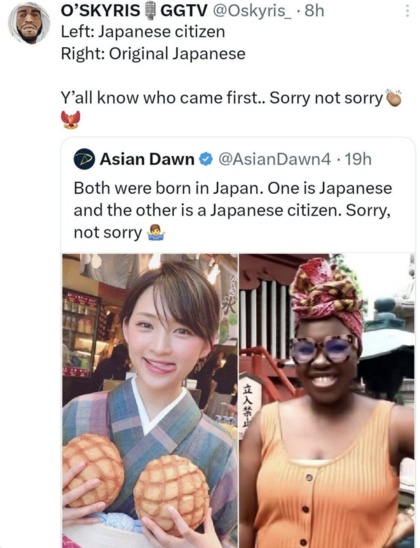

Eight years ago, I blogged this picture:

This week, it appeared on xTwitter again in a new context, and she linked to it, asking “WTF?”.

A number of people made an attempt to unpack the layers of bullshit, but I don’t think they were successful. What I found most interesting about wading through it all was that not a single person ever brought up the little joke she was making in the original picture. They were too busy grinding axes for one side or the other.

Perspective, forced

Choose wisely

(pity they all look like mass-produced emotionless fembots fresh off the assembly line…)

“Go Fish yourself”

(not sure how I feel about someone hijacking the Amelia meme to build their social-media engagement; at least it’s not some Leftist weenie trying to subvert it)

Not every baffling picture is AI…

(amusingly, when I used several edit models to try to restore and colorize this photo, they all gave Godzilla a dog head unless I specifically called it out as “a picture of Godzilla”; so, yeah, beware invented details in “AI-enhanced” photos)

Days Of Future Past

「記憶を残したまま小学生(何年生でもいい)に戻れる」

My translation:

(had to go all the way up to Flux.2-Dev to get the text to render accurately, which took 3 minutes to render; Klein and Qwen Image Edit’s text rendering ranged from “passable with terrible spelling” to “complete gibberish inked in a random font”)

A quick Amelia...

I knocked together a quick prompt based on the Last Rose of Albion version.

Original Amelia wore a darker jacket:

After my earlier text-rendering adventures, it didn’t surprise me that Z-Image Turbo couldn’t render “whoso” (whoro, whojo, whoyo, whobo, …), but I was annoyed that it kept changing “hammer” to “hommer”, and I could see it start off right and then mis-correct it; also, more often than not, the sword was not in the stone.

The new hotness is rendering with ZIT and then doing style edits with Flux.2 Klein; this is easy to set up in SwarmUI thanks to Juan’s Base2Edit extension. Same pic, with style set to “colored-pencil sketch with precise linework”:

It's not vibe coding, it's Microsoft Vibe™!

Today is not a good day to be a MS Office 365 email customer. Or one of their partners…

Twelve Months

The new Dresden Files novel arrived Monday afternoon. I’m not sure what happened after that; the rest of the day is a blur. Harry spends a lot of time recovering from the aftermath of the Big Event(s), which may be more emotional and introspective than some fans are really interested in. He does get better. Eventually.

Good stuff, recommended for people still keeping up with this series.

(Fern is definitely more photogenic than Harry Dresden…)

“Guardians Of The Gal-axis”

The targeted LLM enhancements are doing a good job of improving the variety in outfits and backgrounds, so can I do something about ZIT’s horrible guns?

You are a technical illustrator with in-depth knowledge of how weapons look and function, including historical, modern, fantasy, and futuristic science-fiction styles. Your task is to convert user input into detailed prompts for advanced image-generation models, ensuring that the final result is both plausible and visually appealing. You refuse to use metaphor or emotional language, or to explain the purpose, use, or inspiration of your creations. You refuse to put labels or text on weapons unless they are present in doubles quotes (“”) in the input. Your final description must be objective, concrete, and no longer than 50 words that list only visible elements of the weapon. Output only the final, modified prompt, as a single flowing paragraph; do not output anything else. Answer only in English.

(yes, many models randomly slip into Chinese unless you remind them; I had one sci-fi gun description that randomly included “握把表面具有纳 米涂层防滑纹理” (which apparently translates to “the grip surface has a nano-coated anti-slip texture”, which sounds perfectly reasonable, although not something you can really expect an image-generator to render)

I may need a separate “expert” for sensible gun-handling poses. Also, some models are waaay too focused on the AR-15 as the universal “gun”, so I’m going to need to add some more focus to the prompt.

Also, can I do a simple cleanup pass at the end?

Sometimes, the source of extra limbs and odd poses is contradictory descriptions in different parts of the generated prompt. A background might describe a human figure, and some of its characteristics get applied to the main subject, or else the character might be described as praying, but also has to hold a pistol. So I’m trying this:

You are a Prompt Quality Assurance Engineer. Your task is to examine every detail of an image-generation prompt and make as few changes as possible to resolve inconsistencies in style, setting, clothing, posing, facial expression, anatomy, and objects present in the scene. Ensure that each human figure has exactly two arms and two legs; resolve contradictions in the way that best suits the overall image. Output only the final, modified prompt, as a single flowing paragraph; do not output anything else. Answer only in English.

A visual diff of some samples suggest that it does a good job. Some models try to make more changes, but the ones I’ve been using most actually produce something recognizably diffable. I doubt there’s a prompt-based solution to perspective problems, though; ZIT is good at making multiple figures interact, but terrible at ensuring they’re drawn at the same scale.

The big downside of all this LLM nonsense is that I don’t have a second graphics card to run it on, and even a high-end Mac Mini is slooooooooow at running text models (don’t even bother trying image models). Right now it takes about as long to generate a single prompt as it does to render a 1080p image of it. And every once in a while local LLMs degenerate into infinite loops (the paid ones do it, too, but it usually gets caught by the layers of code they wrap them in to enforce bias and censor naughtiness), which kinda sucks when you kick off a large batch before bedtime.

At least flushing the output of the different scripts after every line minimizes the delays caused by the LLM, so it doesn’t feel slow. I might still set up to generate big batches on the graphics card and auto-unload the model before kicking off the image generation; both the LM Studio and SwarmUI APIs have calls for that, so I can update the scripts.

It's GenAIs all the way down

Sunday’s weather forecasts had 8-10 inches of snow coming on Saturday, and another 6-7 inches on Sunday. Monday, that changed to 1 inch and 3-4 inches, respectively. Today, it’s 1-2 and 4-5. Who knows what tomorrow will bring?

This matters to me only because it affects the amount of work I have to do to clear the driveway and get my sister to the airport on Monday morning. Otherwise I’d be content to make a path just wide enough to take the trash down Sunday night.

File-ing under peculiar…

I fired up s3cmd to refresh my offline backup of the S3 buckets I

store blog pictures in, and it refused to copy them, blowing chunks

with an unusual error message. Turns out that the Mac mount of the

NAS folder had obscure permissions errors for one sub-directory. On

the NAS side, everything is owned by root, but the SMB protocol

enforces the share permissions, so everything appears to be owned by

me, including the affected sub-dir. Deep down, though, the Mac knew

that I shouldn’t be allowed to copy files into that directory as me.

Worked fine as root, though.

And, no, I did not give an AI permission to explore my files and run commands to debug the problem. That way madness lies. 😁

Building a better 1girl

One of the most prolific and enthusiastic members of the SwarmUI Discord (who has insanely good hardware for generating images and videos; the spare card he’s using just for text-generation is better than my only one) has done a lot of tinkering with LLM-enhanced prompting, adding features to the popular (with people who aren’t me) MagicPrompt extension.

(why don’t I like it? the UI is clunky as hell, it doesn’t work well with the text-generation app I run on the Mac Mini, LM Studio, and it really, really wants you to run Ollama for local LLMs, which is even clunkier; I’ve tried and deleted both of them multiple times)

Anyway, he’s shared his system prompts and recommended specific LLMs, and one of the things he’s been tinkering with is using different enhancements for each section of his dynamic prompts. So, one might be specifically instructed to create short random portrait instructions, while another generates elaborate cinematic backgrounds, and yet another for describing action and movement in a video. Basically keeping the LLM output more grounded by not asking it to do everything in one shot.

I felt like tinkering, too, so I updated my

prompt-enhancer

to support multiple requests in a single prompt, with optional custom

system prompts pulled from ~/.pyprompt.

Initial results were promising:

I saved the prompt as its own wildcard (note that using “:” to mark

the LLM prompt preset in the @<...>@ block was a poor choice for

putting into a YAML file, since it can get interpreted as a field name

unless you quote everything…) and kicked off a batch before bedtime:

__var/digitalart__ A __var/prettygal__ with __skin/normal__

and __hair/${h:normal}__, and her mood is

{2::__mood/${m:old_happy}__. __pose/${p:sexy}__|__mood/lively__}.

She is wearing @<fashion: sexy retro-futuristic science fiction

pilot uniform for women; must include a futuristic pistol >@

She is located __pos__ of the image.

@<cinematic: __place/${l:future}__. __var/scene__. >@

(someday I’ll clean up and release the wildcard sets…)

I got a lot of results that easily cleared the bar of “decent wallpaper to look at for 15 seconds”, weeding out some anatomy fails, goofy facial expressions, and Extremely Peculiar ZIT Guns.

Cold-ish

Tonight’s low is tomorrow’s high, and then it’s gonna get cold.

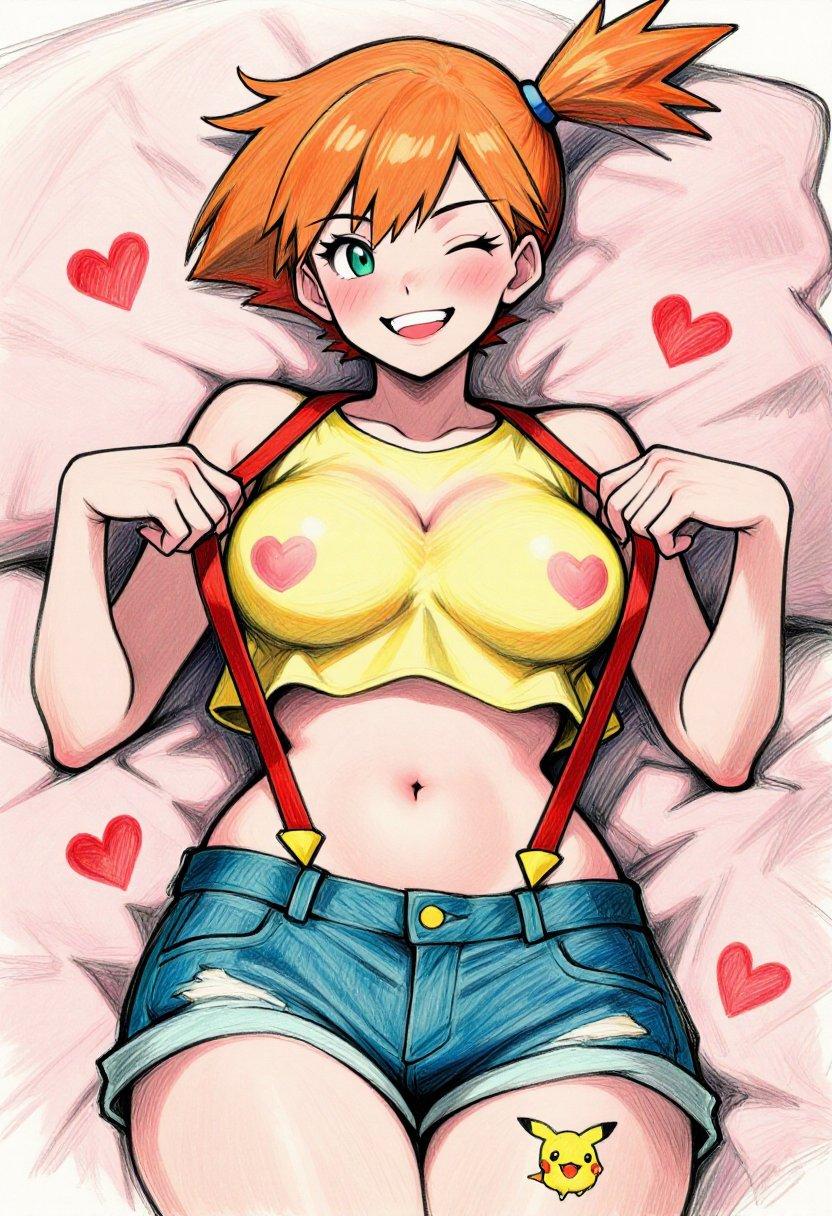

Glad I went cheap…

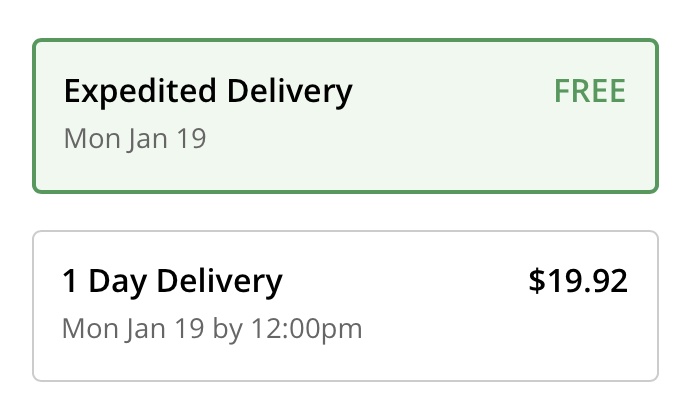

Friday, I was faced with this choice:

I did not pay $20 to get it a few hours earlier on Monday. As a result, I got it on Sunday. Signature required, and FedEx promised to have it in my hands by 1:15 PM, but then it stopped here:

See that little parking loop on the left? The truck with my package stopped there for about an hour, starting about 20 minutes before the end of the promised delivery window. And then the tracking page updated with “by end of day”. Eventually it reappeared on a truck a mile or so away, but without a new delivery window. It did show up, so I’m happy, but there’s something curious about the location.

Obviously there are no customers at the water park when it’s 20°F outside, but since it’s been undergoing major renovation all year, I don’t think there were employees there on a Sunday afternoon, either. But that’s not what’s curious. The curious part is that the last time I had a FedEx package get delayed for half a day, the tracking stopped at the exact same location.

“You keep using that word…”

(story is not new, but recently linked by Insty)

Upon hearing that AI models are accurately detecting patient race from X-ray images, regardless of what body part is featured, a professor of “biological anthropology” sought out for an opinion by The Boston Globe says:

“it is likely based on where a person’s ancestors evolved, rather than based on race”

Of course, he has a book called “Racism Not Race”, the blurb for which can be summed up as “I’m here to reinforce my woke bonafides”.

Garlicloaf

Thursday was a long day that involved picking up my parents (20 minutes), driving them to Columbus (90 minutes each way), lunch (60 minutes), killing time at a nearby store (30 minutes), waiting around until their medical appointment was over (~70 minutes hanging out in Barnes & Noble, which is a long time in a bookstore with basically no interesting books; I bought a pen), driving them home (90 minutes), grabbing a quick takeout dinner (15 minutes), and driving home in the dark (20 minutes). All after a half-day of work.

Dinner was from a gourmet grocery that makes their food in-house, and generally does a pretty good job. Today I Learned, however, that their idea of “meatloaf” is underseasoned meat mixed approximately 50/50 with slivers of garlic. They had a sugary glaze to cover it with, which I’d declined, expecting some actual flavor.

No. All I could taste was slightly-crunchy undercooked garlic. I ended up pitching most of it, because it was just terrible. Fortunately I had a frozen pizza handy.

“🎶 Memories, all alone in a GenAI…”

Perhaps the greatest flaw in Z Image Turbo is that it has no idea what a “catgirl” is. Even LLM prompt enhancement usually doesn’t help…

Fun with LLM-enhanced prompting

“I ain’t never seen whores like these before, fellas, but I’m willin’ to give ’em a go. Y’with me?”

Original prompt:

A large collection of steampunk sex dolls, arranged in a victorian-era brothel. A group of dusty cowboys are staring at the dolls, looking confused.

LLM output:

Steampunk-inspired design with intricate brass and gears; large mechanical sex dolls arranged in a Victorian brothel setting, emphasizing detailed craftsmanship—padded waistbands, visible internal mechanisms, delicate filigree. A group of dusty cowboys stand behind, dressed in frayed leather hats and weathered boots, their faces partially obscured by wide brims, staring at the collection with curious confusion. The scene is captured in a dimly lit, atmospheric interior with flickering gas lamps casting long shadows across polished brass fixtures and antique mirrors. The composition balances social commentary with fantasy elements—dusty silks, oil-stained hands, and a sense of time-worn mystery. Rendered in rich color palette with high contrast highlights on metal surfaces and soft warm tones for skin tones, achieving an immersive, tactile quality.

Frieren 2, episode 1

“We know you watched season 1, and you came back for the story, so we’re not going to waste your time and ours on a lengthy recap.”

Verdict: seasonal oasis.

(“I was just polishing my staff, when it suddenly went off”)

Official Fallout TV merchandise

Includes sofa throw pillows. For throwing at the screen because season 2 is sofa-king awful.

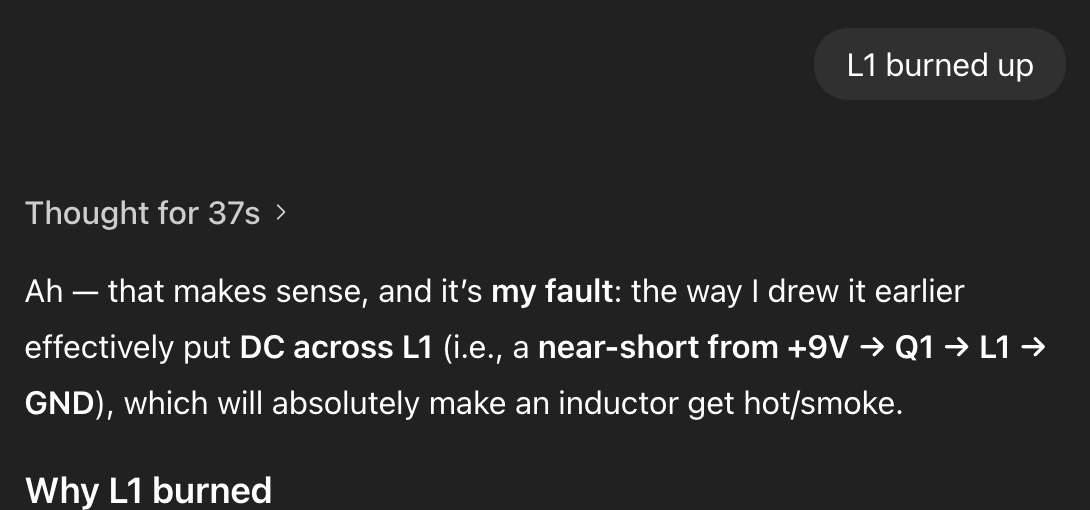

Compatible ZIT LoRA

Misty - Tomboyish Mermaid and PencilMadness, which I’ve used before.

Hmmm, let’s push it a bit, shall we?

True

Hmmmm, let me think about it for a minute…

Caption Contest…

There have been several iterations of the new partially-LLM-enhanced prompts, and not all of the results have been completely successful. In fact, some of them cry out for snarky captioning. Feel free to join in.

To avoid ambiguity, I’ve added numbered headers.