Generally between versions 4 and 8 of any software, users switch from “can’t wait” to “oh fuck, not another one”.

— Pixy Misa takes your versionityFrieren 2, episode 6

Worth the wait, as Frieren is reunited with two of the least annoying characters from the exam arc. I’d have preferred the little hot-pants redhead, but she and her frenemy failed the exam, so they’re not allowed to enter the Northern plateau. Sadly, this means we’ll likely never see them again unless there’s a collection of side stories.

Anyway, this week reminds us that demons are evil-with-a-capital-E, using language only to deceive. This will no doubt trigger another round of how-dare-they reactions from the Left, sigh.

It’s a slow buildup to a confrontation with a new Big Bad, that’s at least a two-parter. With four first-class mages on the scene, plus Stark, the fight is likely to be flashy, so maybe they’ll pull out the stops and give it three.

(unrelated postcard from AI Japan, because AI love you long time)

Postcards from AI Japan

(Frieren 2 episode 6 was delayed a week…)

Still not outta snow, eh?

At least I’m not getting what NYC is getting. I’ve had enough of real snow shoveling for the year.

Ei-en Flux

I’m taking a sterile laptop with me to Japan, with all my credentials securely stored in 1Password and just enough of my usual environment that I could do things like, y’know, blog if I wanted to. Or sign up for a free trial of Amazon Prime in order to buy things they won’t ship internationally.

Note: Amazon’s adult-goods hider is really annoying: if at any time in a session your VPN drops, visiting a single Amazon Japan URL will detect this and flag you as outside Japan, so you can no longer see any “sensitive” products, and searches will come up empty without explanation, making it look like actresses, models, and entire categories of products simply do not exist. I had to log out and back in to reset it. The reverse is also apparently true, so that briefly opening a VPN while in Japan will flag you as a dirty foreigner and pretend that K-cups are only for coffee…

Anyway, I decided to make some fresh safe-for-airline-security wallpaper for the travel laptop.

I used an LLM to generate a list of scenic locations in Japan, and added a list of artists and styles that someone else generated with an LLM. I ran the combination through my dynamic-prompts randomizer, then fed just the random scenes through another LLM for enhancement (better results than feeding the style and location through in one pass), then fed the completed prompts through an LLM instructed to just perform cleanup and QA.

Then I ran the batches through Flux.2-Dev and did a quick pass to eliminate the terrible and the goofy. That produced about 550 images that were good enough to look at for 5-10 seconds. For blogging purposes, I deathmatched it down to a more sensible list. Size is a modest 1440x900, since Flux2 is almost too big to run on an RTX 4090.

Fire Weather Watch!

Went to look at the weather forecasts for Chicago, since I’ll be visiting my sister there soon. Based on the past several weeks, my primary concern was snow and ice on the drive over. Instead, they’re promising warm dry weather with high winds and a chance of firestorms.

Guess I’d better pack a water type…

A village is missing their idiot…

Ilya Somin is perhaps the most reliably wrong commentator in what can laughably be called the “libertarian” gang at Reason, but this one takes it to a new level:

“If, as Musk says, the U.S. was all about English/Scots-Irish culture, there would have been no need for the American Revolution. The British Empire was dominated by those groups.”

Jaw-dropping ignorance from someone who claims the authority to lecture on law and liberty.

Apple, on the other hand, has plenty of them

Years ago, someone at Apple broke the keyboard shortcuts for switching between windows in Terminal.app. My suspicion has been that it had something to do with adding tab support and setting the app to default to using those shortcuts for tab-switching by default.

Someone finally filed a bug with a simple, clear repeat-by: hide the app. That’s it. Hide the windows just once, and they all lose their shortcuts. And I’m sure that the tiny handful of QA testers left for MacOS have never tested non-default behaviors.

Frieren 2, episode 5

In which Frieren does the meme, and then gets sentenced to 300 years hard labor.

I’m guessing there’s an AI behind this…

Dear Apple,

A while back I bought the recently-updated AirPods 3 Pro. They worked fine with my phone, tablet, and laptop, but Apple’s “find my” service couldn’t see them. At the time, the explanation was that I hadn’t yet upgraded my devices to the “Liquid Ass” OS v26. Since I wasn’t traveling much (indeed, I often don’t leave the house for days, especially the way this winter has been going…), I didn’t worry about it.

Now that they have a mostly working 26.3 release, and they’ve added back some of the legibility that was abandoned in the pursuit of random UI restyling, I updated my phone and tablet (not the Mac; that needs to work). To no surprise, the AirPods did not magically start working correctly in “find my”; they were detected, but it insisted that setup was incomplete and they could not be located.

Clicking on the error message took me to a support page, where none of this worked. At all. There’s yet another screen where you have to completely disassociate the device from your Apple account and then reconnect.

(flux2 is a lot slower and more memory-intensive than Z-Image Turbo, but it’s better at text, styles, and prompt following; there’s a lot I can’t do with it because my graphics card only has 24 GB of VRAM, but for simple one-off pics without refining, upscaling, or multi-image editing, it’s great; I’m thinking about setting up SwarmUI on Runpod with a better card, for the times when I want precise prompting)

Amazon Japan gets clean

Unless you have an active VPN connection with Japanese servers, you can no longer view adult books and videos on Amazon Japan. Searches pretend there’s nothing there, and direct links to products throw up an error page as if the page doesn’t exist. Items in wishlists appear normally with their title and picture, but you can’t click on them or move them to your cart.

(the accurately-rendered poster titles come from the collection of dirty-book covers I acquired some years back as part of my collage-wallpaper project)

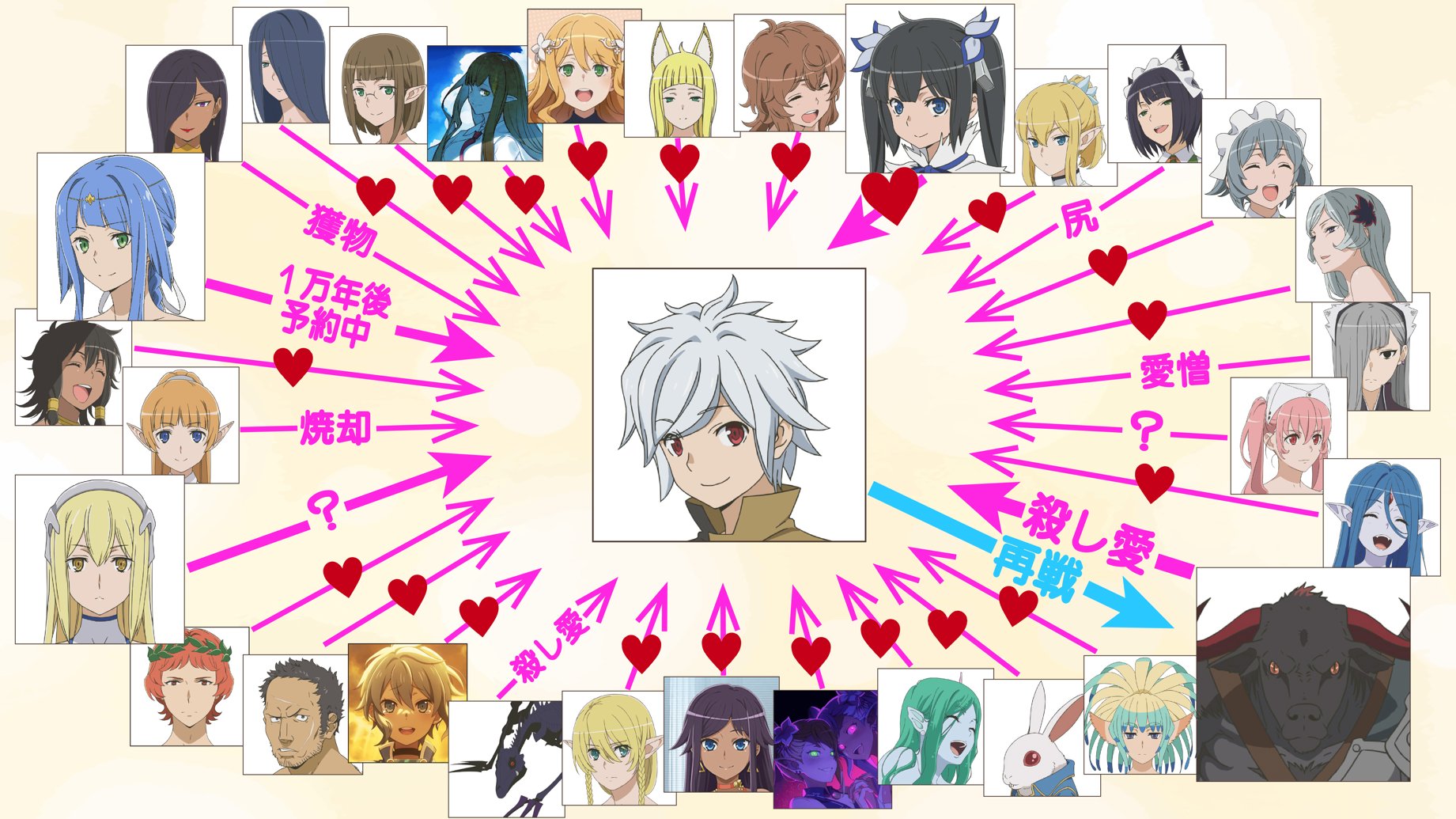

How to spot the hero in a harem anime…

“Well, bye”

[Update! Our Wholesome Choirboy doesn't want to go back to Ireland because... he fled the country for dealing drugs and obstructing police].

[Updated update! He also abandoned two small children when he became a fugitive; all we need now is for him to have set fire to a police car, and he'll be a proper Democrat]

Insty links to an idiotic tweet by no-borders-for-you libertarian Nick Gillespie, who seems to have expected that MAGA would repent of its sinful ICE-worship upon seeing a sympathetic Irish, white illegal. To no one’s surprise except perhaps Gillespie’s, only the usual suspects answered the call.

Perhaps because it was quickly pointed out that Our Not-So-Diverse Hero overstayed a tourist visa for over fifteen years before marrying a citizen in 2025 in an attempt to dodge deportation. Gillespie also fails to mention that he’s only being held in detention because he refused cash and a free trip back to Ireland. Also, how was Our Upright Immigrant making money without valid ID all those years?

(from the Cherish The Ladies album of the same name; I like the song, but the message falls apart when you actually think about the lyrics…)

(side note: Cherish The Ladies ended up suing their record label for unpaid royalties…)

From the X files...

If you’re cold, they’re cold…

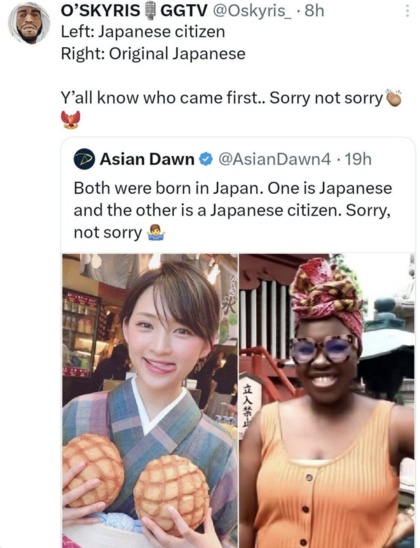

Saki is politely baffled

Eight years ago, I blogged this picture:

This week, it appeared on xTwitter again in a new context, and she linked to it, asking “WTF?”.

A number of people made an attempt to unpack the layers of bullshit, but I don’t think they were successful. What I found most interesting about wading through it all was that not a single person ever brought up the little joke she was making in the original picture. They were too busy grinding axes for one side or the other.

Perspective, forced

Choose wisely

(pity they all look like mass-produced emotionless fembots fresh off the assembly line…)

“Go Fish yourself”

(not sure how I feel about someone hijacking the Amelia meme to build their social-media engagement; at least it’s not some Leftist weenie trying to subvert it)

Not every baffling picture is AI…

(amusingly, when I used several edit models to try to restore and colorize this photo, they all gave Godzilla a dog head unless I specifically called it out as “a picture of Godzilla”; so, yeah, beware invented details in “AI-enhanced” photos)

Days Of Future Past

「記憶を残したまま小学生(何年生でもいい)に戻れる」

My translation:

(had to go all the way up to Flux.2-Dev to get the text to render accurately, which took 3 minutes to render; Klein and Qwen Image Edit’s text rendering ranged from “passable with terrible spelling” to “complete gibberish inked in a random font”)

Frieren 2, episode 4

This week, it’s the thought that counts, especially when you’re a pair of socially-awkward teens fumbling through their first date. Much easier to fight ogres. Or Pokemon.

(fan-artists frequently draw Fern much bustier than she is in the anime, while Nagi is pretty much spot-on)

Underground Healer, anime and novel

Since I’m only watching Frieren this season, I cast back looking for recommendations of completed series, and found a lot of positive commentary for this one. I’m watching about an episode-and-a-half a day while on the elliptical, but it’s interesting enough that I picked up the first few translated light novels.

And then I hit this bullshit in book 3:

“You clap at us, we clap back harder.”

The speaker is one of the three slum-gang boss hotties, squaring off with a bruiser from the Black Guild who’s smashing up their festival. She says this as their argument escalates into a full-scale gang battle.

This is not the first line that didn’t ring true, but it’s easily the worst.

Not only does the slang instantly date itself, it makes no fucking sense in the scene. It’s like a mafia don getting uptwinkles from his lieutenants.

What could possibly go wrong?

Apple is allowing third-party AI apps in CarPlay. The jokes just write themselves…

I’m okay with it this time…

I put in an Amazon order Thursday night that promised delivery between 7AM - 11 AM Friday. Sometime before that window opened, the “clear skies” forecast proved wrong, and 4 inches of fresh snow was dumped over the course of several hours. It didn’t stop until after noon, and the trucks didn’t come out to clear the roads until evening.

I cleared a path to the street and salted it, but I didn’t actually expect the package to show up until Saturday, which it did.

A quick Amelia...

I knocked together a quick prompt based on the Last Rose of Albion version.

Original Amelia wore a darker jacket:

After my earlier text-rendering adventures, it didn’t surprise me that Z-Image Turbo couldn’t render “whoso” (whoro, whojo, whoyo, whobo, …), but I was annoyed that it kept changing “hammer” to “hommer”, and I could see it start off right and then mis-correct it; also, more often than not, the sword was not in the stone.

The new hotness is rendering with ZIT and then doing style edits with Flux.2 Klein; this is easy to set up in SwarmUI thanks to Juan’s Base2Edit extension. Same pic, with style set to “colored-pencil sketch with precise linework”: