Sysadmin

Technically, it was handling it...

Woke up this morning, looked at my phone, and saw that I hadn’t received any work email since about 1:15 AM. Since I’m guaranteed to get at least one hourly cron-job result, that’s bad.

Login to mail server (good! that means the VPN is up and the servers still have power!), check the queue, and it eventually returns a number in excess of 500,000.

Almost all of them going to the qanotify alias. Sent from a single

server.

The good news is that this made it very easy to remove them all from the queue. The bad news is that I can’t just kill it at the source; QA is furiously testing stuff for CES, and I don’t know which pieces are related to that. And, no, no one in QA actually checks for email from the test services, so they won’t know until I — wait for it — email them directly.

For more fun, the specific process that’s doing it is not sending through the local server’s Postfix service, so I can’t shut it down there, either. It’s making direct SMTP connections to the central IT mail relay server.

Well, that I can fix. plonk

(this didn’t delay incoming email from outside the company, just things like cron jobs and trouble tickets and the hourly reports that customer service needs to do their jobs; so, no pressure, y’know)

First update

QA: “I see in the logs that the SMTP server isn’t responding.”

J: “Correct. And it will stay that way until this is fixed.”

(I find myself doing this a lot these days; User: “X doesn’t do Y!”, J: “Correct”)

Second update

Dev Manager: “Could you send us an example of the kind of emails that you’re seeing?”

J: “You mean the one that’s in the message you’re replying to?”

Third update

DM: “Can you give my team access to all of the actual emails?”

J: “No, I deleted the 500,000+ that were in the spool. But it looks like at least 25,000 got through to this list of people on your team, who would have known about this before I did if they didn’t have filters set up to ignore email coming from the service nodes.”

J: “And, what the hell, here’s thirty seconds of work from the shell isolating the most-reported CustomerPKs from the 25,000 emails that got through, so you can grep the logs in a useful way.”

Fourth update

John: “Ticket opened, assigned to devs, and escalated.”

(John used to work for me…)

Fifth update

Senior Dev: “Ooh, my bad; when I refactored SpocSkulker, I had it return ERROR instead of WARNING when processing an upgrade/downgrade for a customer that didn’t currently have active services. Once a minute. Each.”

SD: “Oh, and you can hand-edit the Tomcat config to point SMTP to an invalid server while you’re waiting for the new release.”

J: “Yeah, no, I’ll just keep blocking the traffic until the release is

rolled out and I’ve confirmed with tcpdump.”

(one of my many hats here used to be server-side QA for the services involved, so I immediately knew it was coming from SpocSkulker, and could have shut it off myself; but then it wouldn’t have gotten fixed until January)

Anticipated update

J receives massive fruit basket from Production team for catching this before it rolled out to them and took out their email servers.

'Fun' with HSTS

There is nothing wrong with using good old-fashioned HTTP without encryption. There are situations where it is a perfectly reasonable thing to do, and the protocol shouldn’t be blindly tagged with dire warnings about people kidnapping your dog, stealing your credit cards, and secretly replacing your spouse with Folger’s Crystals.

Browser vendors disagree, for reasons not-entirely-wholesome, so it’s been an ongoing struggle at the office to deal with people who file helpdesk tickets about broken SSL on sites that never had SSL to begin with, and don’t understand that their browser is silently rewriting URLs and hiding the evidence.

With recent browser releases, it got to the point where we had to put SSL reverse proxies in front of a bunch of internal web sites just to shut up the whining. This was non-trivial, and left a number of sites only partially functional for most of a day (because of course this was so important that it couldn’t be tested, QA’d, or released on a weekend). Because once a site gets “upgraded” to HTTPS, the browser responds to any HTTP links like CNN covering a Trump rally.

That was Tuesday. Wednesday night, I was wandering through the desert on a sand-seal with no name, and out of the corner of my eye I saw my phone sync up about a dozen emails, all complaining about this new HSTS thingie (aka “SSL Bondage”).

Someone urgently needed access to a site that was rejecting SSL connections, so he CC’d a half-dozen people along with the helpdesk email address. Several of them responded to all, creating additional tickets. Several people responded to the responses.

When I’d finished merging the 10 duplicate tickets, my one-line response was “correct. we haven’t added HTTPS to that site yet”.

Configuring a static IP in %pre

When building out a new kickstart server for CentOS 7.x/8, I vigorously ignored the cruft that had built up in the old one and started over. One useful improvement is handling the basic network configuration properly:

%pre --log=/tmp/pre.log --interpreter=/usr/bin/bash

DNS=10.201.0.2,10.201.0.3

IFACE=$(ip -4 -o a | awk '/scope global/{print $2}')

IPADDR=$(ip -4 -o a | awk '/scope global/{print $4}')

declare $(ipcalc $IPADDR -h -m -n)

cat << EOF > /tmp/network.cfg

network --onboot yes --device $IFACE --bootproto static --noipv6 --onboot=on --activate --nameserver=$DNS --hostname=$HOSTNAME --ip=${IPADDR%%/*} --netmask=$NETMASK --gateway=${NETWORK%%.0}.1

EOF

%end

%include /tmp/network.cfg

We use TXT records in DNS to automagically set static DHCP leases and

PXE config files, so that all we need for an unattended install of a

new server is the MAC address of the first NIC. This little snippet

tells Anaconda to hardcode that IP config so the server doesn’t depend

on DHCP after the install is done. This has saved our bacon many times

after power outages, because if your DHCP server doesn’t come back

quickly (or at all), dhclient eventually gives up, and then you have

to touch everything by hand to get them back online.

To get the base install down to something simple, quick, and easy to

secure (~380 RPMs, minimal network services), I dusted off the Perl

script I wrote back in 2009 that does dependency analysis based on the

repodata files comps.xml and primary.sqlite. Still works pretty

well, actually.

I deeply regret that we had to bite the bullet and start using a systemd-based release. CentOS 6.x was just getting too long in the tooth for functionality, and then one day we suddenly needed to ship a new DNS/DHCP/NTP/mail server to a new office, and the only available hardware was a NUC too new to run 6.10. My feelings about systemd can be summed up with this Ace Ventura clip.

"War. War Never Changes..."

And by that I mean the war on users filling up file systems.

When I got into this business, it was whack-a-meg, as undergrads discovered Usenet binary newsgroups and the “zurich” ftp site at MIT, and tried to be clever about hiding their stash.

Over time, it became whack-a-gig, and largely moved from porn to work-related stupidity, such as tarball-based version control (bonus for having multiple sets of tarball, compressed tarball, and unpacked source tree), two-year-old copies of Production logs (often with an accompanying set of tarballs…), laptop backups including music and video libraries, etc, etc.

This week it’s whack-a-terabyte, as I try to figure if there’s actually a legitimate reason for the accounting share to have multiple folders that are each larger than the entire company’s 13-year source-control history…

A few small repairs...

Early yesterday morning, I got email from a server whose RAID 10 array was rebuilding. As far as I could tell, one of the SSDs had briefly gone offline, just long enough to force the controller to resync it.

Mildly disturbing. I told the team to make sure we had a cold spare ready to go, and we should prep to swap it in if we saw anything else unusual before we had a chance to schedule a maintenance window.

Early this morning, two SSDs failed in that server, and this did not include the one that blipped yesterday. That reeks of RAID controller failure, and since we didn’t have an identical one on hand with identical firmware, the best bet was moving the whole damn thing to completely different hardware (more precisely, our shiny new VMware/Tegile cluster).

Fortunately it’s only half a terabyte, backed up at least three different ways, and everything’s on a 10G network, but pretty much all of engineering is twiddling their thumbs until it’s back, so “no pressure”.

Update

Start to finish, it took about 7 hours from the time we pulled the trigger on the move. A good chunk of that was spent checksumming the data and copying back the dozen or so files that were corrupted.

Now I’m just watching the rsync backup run with “-c” to make sure the corrupted data didn’t propagate. Honestly, it would be faster to blow away the destination and do a regular rsync, but then I’d have one less mostly-valid backup for N hours. I don’t really care how long it takes to run, and doing it this way reassures any management types who ask questions later.

Well, that was special...

I use Amazon’s RedHat-based Linux distribution to run this site in their cloud, with Nginx as the main web server, and Lighttpd for CGI-ish things that are reverse-proxied by Nginx.

Amazon’s been pretty good at maintaining the RPMs, to the point that I don’t worry much about running “yum update” and rebooting at frequent intervals, although I do update my test instance before the real one.

So it was not pleasant to go through a typical update, surf to my

site, and find the Lighttpd default page instead of my blog. Whoever

packaged up the latest release had it overwrite

/etc/rc.d/init.d/lighttpd, blowing away my configuration and

replacing it with the default one. And it started up before Nginx, so

it claimed the ports.

(and before you ask, I would have put my customizations in /etc/sysconfig/lighttpd if the script had been written in a way that allowed those particular changes; the workaround is going to be to copy it to a new name and disable the original)

Fortunately I keep all configs under source control, so I simply reverted that file and restarted everything, but it’s still annoying.

Unrelated, tomorrow there’ll be a double feature: terrible parody song lyrics with matching cheesecake!

My secret fetish is...

…laptop backups. I have four independent backups of my current laptop (2x Time Machine, 2x SuperDuper!), one of which is stored on a RAID 6 NAS that backs up to a second RAID 6 volume. Additionally, all source code and blog entries are under source control (Mercurial) and get pushed to a remote server; the blog also gets rsynced to two virtuals in different parts of the country. Which get backed up to the NAS.

I mention this because I just found two external drives containing full backups of my great-grand-previous laptop, plus the actual SSD pulled from it when the board failed. And then I checked the NAS, and found a disk image made from that SSD and a VMware virtual made from it.

For some reason, it doesn’t go over well at work when my first response to someone with a dead computer is, “how many hours ago was your last full backup?”

The good news is that the requirement that we encrypt laptops for people in sensitive positions provided the leverage we needed to get a PO signed for a centralized laptop backup service. Which proved itself pretty darn quickly.

Pity there’s still no cure for people who think that it’s smart to conspicuously put your laptop bag into the trunk of your car when you arrive at a restaurant in the middle of Silicon Valley…

Vacation, all I ever wanted...

“Happiness is a warm server room.”

No, wait, that’s not right.

“Happiness is rooftop building maintenance that interrupts your server-room cooling, with portable chillers that just aren’t cutting it, followed by a surprise UPS failure that takes down all your servers. While you’re out of the country.”

Could be worse. Instead of canceling our visit to a temple flea market and a shrine festival this morning, it could have happened on Friday afternoon…

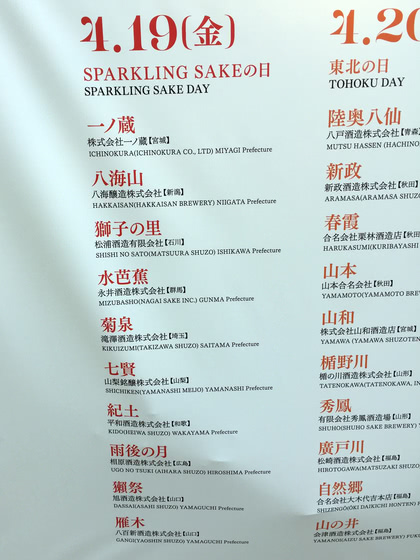

Craft Sake Week at Roppongi Hills is a brand-new event. We walked over from our hotel in Shiba, got there just before it opened at noon, chatted with the woman running the show (a charming New Zealander who wanted to make sure the limited foreign-language support she could offer was enough for people), and then spent the next four hours drinking glass after glass of really terrific saké.

Thanks to my sister’s well-honed talent for hitting it off with strangers anywhere in the world, we hooked up with a mother and daughter who were on the latter’s final Spring Break vacation before starting her Master’s program in Economics in London. After working our way through the available offerings, the four of us wandered over to the Kit-Kat Pairing Bar, which used “AI” (no actual AI were harmed by this marketing stunt) to pick the right combination of seasonal Kit-Kat and saké for each of us. Ally, the daughter, promptly cheated and ran through the questions again when she didn’t like the result. We approved.

All told, we spent over four hours drinking and chatting, leaving me drunk enough to feel it, and my far-less-massive sister well into wheeeee! territory. The walk back to the hotel involved much greeting of random pedestrians, a bit of stumbling and weaving, and some cat-herding on my part. The day ended early.

Next up, Saturday with penguins, gyoza, a shinkansen ride, and more gyoza (because our neighborhood tonkatsu curry udon joint was closed for the day).

Meanwhile, I watch our group’s Slack channel for news that the UPS is fixed and the folks on-site can bring up enough infrastructure for me to VPN in and do some sadly-necessary work.

Update

Surprisingly clean recovery, although the UPS required a visit from an electrician to get it back online again, delaying things enough that we got to the flea market a bit later in the day, which made for a somewhat sweatier shopping experience (highs up to 78 this week in Kyoto, with humidity to match).

My knees, shins, calves, and right ankle are vigorously expressing their disapproval of all the walking, while my feet are just in a “we’ll get you for this later, dude” mood. All are responding nicely to a felbinac/menthol lotion I discovered on an earlier trip and picked up as soon as we got here.

Foodwise, we’ve struck out twice trying to visit the katsu curry udon place (pro tip: if you’re going to be closed for multiple days, don’t just put 本日 (“today”) on the sign apologizing for it and leave it up for several days).

Finding Tiger Gyoza Hall more than made up for it. The Pukkuri Gyoza in particular were so good that we were tempted to say “mata ashita” on the way out. And, yes, my sister hit it off with two charming older men who spoke decent English and drank heavily, and it turned out one of them had lived in both Chicago and San Jose. Despite being named “thousand winters”, he confessed to preferring Silicon Valley’s weather over Chicago.

(but we’re still going to try for the katsu curry udon again…)