Sysadmin

30 characters is enough for anybody...

I’m pretty sure now that the root cause for my recent Atlassian

clusterfail, which support

had us work around by replacing hostnames with IP addresses in both

setenv.sh and cluster.properties, was that the hostnames were too

long.

Not the fully-qualified domain name, just the hostname component. We

have a fairly verbose naming convention, where you can search for

things like “jira-appserver” and see all environments, or

“sandbox-jira-app” and see just the ones in a single cluster (both

of these are useful for clustershell incantations). Since we’re

doing an A/B cutover for this upgrade, the new sandbox machines also

got the application version added to their hostnames, pushing them

to 31 characters.

The official limit is 63, so either someone wasn’t reading the standard, or they’re internally using a double-byte encoding like UTF-16, possibly for Windows compatibility reasons.

(Komi-san wants a second opinion)

The annoying symptom for this failure is that a bunch of the core

add-ons simply refuse to load, taking a full 5 minutes to time out

during startup. This is also the way it fails if you leave out the

allegedly-optional ehcache.* options in cluster.properties, even

when upgrading the first node.

By the way, when upgrading a Jira cluster, the node that comes up first (which does the heavy lifting for the upgrade) must be active in the load-balancer that answers to the official base URL, because when the other nodes come up, that’s the hostname they’ll contact to ask about the upgrade status, even though the cluster members are stored in the database so they know how to reach it directly. If you don’t want users to try to log in during the upgrade, you have to tinker with your load-balancer config so that only the other nodes get directed to the upgraded one, while everyone else still gets a “go away” page.

(pictures are related, in the sense of “every time I shoot down one problem with this upgrade, three more appear out of nowhere”)

Jira Jungle

Current employer blessed us with a Friday off, extending the 3-day holiday weekend to 4. Naturally, both Jira and Confluence ran their log-scanners this morning and reported thousands of instances of never-before-seen potentially serious errors, and at the same time one of our contractors was horrified to discover what looked an awful lot like a potential admin-level hack against Jira. The hack wasn’t, and the log-scanner seems to have gone completely insane, for instance reporting hundreds of instances of three completely different Confluence errors on the same server, on the same log line, which matched precisely zero of the errors.

So that’s 2.5 hours of life I want back. Also, for the entire time, the Tanya The Evil OP song Jingo Jungle was running through my head, so I had to buy it on iTunes so that I can inflict it on the rest of the team the next time we have a surprise emergency Zoom debug session.

Fun fact...

When guaranteed-non-disruptive data-center power maintenance takes down your Confluence database server, application servers that survive and reconnect may end up speaking in tongues. Specifically, the “Other Macros” screen in edit mode ended up in a mix of English and Polish. A rolling restart fixed it.

FFS

How many words in this headline are not stupid?

Introducing Crowdsec: A Modernized, Collaborative Massively Multiplayer Firewall for Linux

Yeah, get back to me when you have data...

User: “Hey, my SQL queries are timing out on the replica!”

J: “Hmmm, that error says they’re so slow that the master is trying to clean up all the rows that have changed since it started running.”

U: “Can we try it on the primary?”

J: “No, because 1) Production, and 2) error is specific to replicas.”

U: (CCs Partner)

Partner: “Our service that queries your DB, sends the results over a VPN tunnel, and ingests them into our system is working fine, and doesn’t show any delays or network issues.”

J: “That’s what you said last time, and the problem ‘just went away’ the next night.”

P: “Try running this specific query locally and tell me how it works.”

J: (examines 450-line SQL query, shrugs, runs it) “2.5 minutes.”

P: “Hmm, works here with ‘limit 10000’ and fails with ‘limit 50000’. Well, nothing we can do on our end! Shall I set up a call with our Engineering team?”

J: “Wait, what was the runtime for that query on your end before it started timing out this week? For that matter, what was the runtime when it worked with ‘limit 10000’?”

[update]

P: “Here’s a chart showing it bang-on at almost that exact same runtime for weeks, until it started timing out every single time on Friday night.”

J: “Okay, let us know when you’ve fixed that. Just for fun, try changing the query to just return the count of rows instead of the ~36 MB of data. I, um, have a hunch.”

[update]

U: “9.6 seconds.”

J: “So, you can successfully submit obnoxious queries through Partner’s interface, as long as they don’t return any significant quantity of data. Hmmm, what does that sound like?”

[update]

(long meeting full of fingerpointing with no indication of how it started failing 100% of the time like throwing a switch)

J: “I have finally managed to reproduce the failure locally, which means I’m willing to try a small config-file change to work around the problem. Reminder: we still have no hint as to the actual cause.”

…

[you are here]

Random Thoughts, Time Machine edition

Dear Amazon,

You know the drill:

Hmmm, have they done an isekai series about ending up in another world as a monster-girl samurai psychologist yet?

Fun Mac Fact

If a Time Machine backup is interrupted for any reason, it may leave

behind an unkillable backupd process. If this happens, even

automatic local snapshots will stop working until you reboot. And by

“reboot” I mean power-cycle, because MacOS doesn’t know what to do

about an unkillable system process; it kills off everything it can and

then just sits there, helpless.

Part of the problem is that the menubar indicator that’s supposed to show when a backup is active does not include the “preparing” or “stopping” stages, so if you were to, say, close your laptop lid during those stages, or change your network configuration by starting a VPN connection or switching from wired to wireless, you could trigger the problem.

For more fun, if your Time Machine backups are on a NAS, they’re stored in a disk image, which needs to be fscked periodically (part of the lengthy “verifying” stage), and must be fscked after any error. And that can take hours. And if it fails, the only solution Apple offers is to destroy your entire backup history and start over, potentially leaving you with no backups at all until the first new one completes, which, again, takes hours, especially with the default “run really slow in the background” setting enabled.

Pro tip:

sudo sysctl debug.lowpri_throttle_enabled=0

There are instructions (1, 2, but none from Apple) for how to manually fsck a TM image (possibly multiple times) and correctly mark it as usable again, a process that has the potential to take days.

And that’s why I keep two separate SuperDuper backups of my laptop in addition to the two separate TM backup drives (the “belt, suspenders, bungee cords, and super-glue” approach). Time Machine is far too fragile to rely on for anything but quick single-file restores, although it can be useful for migrating to replacement hardware that won’t boot a cloned disk.

In the standard “you’re holding it wrong” Apple way, you can’t just turn on automatic local snapshots; you have to have at least one external volume configured for automatic TM backups. In fact, the manpage seems to claim that you can’t make local snapshots at all unless you’ve got at least one external TM backup. This suggests that the optimum strategy is to use SuperDuper every day to have bootable full backups, set up TM without automatic backups, and then set up a cron job to create and manage local snapshots. And manually kick off TM backups every week or so when you’re sure you won’t need to use your computer for a few hours.

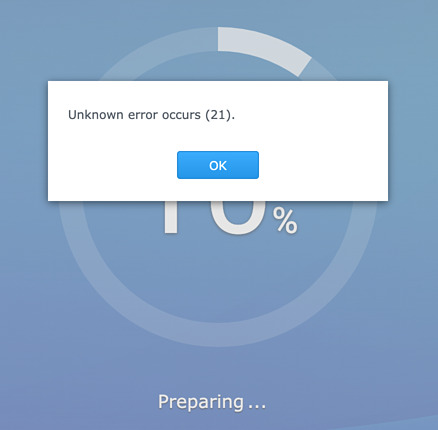

Dear Synology,

When installing a system update on one of your NAS products, having it fail with the following message is “less than encouraging”:

Re-downloading the DSM update (6.2.3-25426) and trying again didn’t help.

On the bright side, it’s still up and running.

Unrelated,

Somehow I missed an episode of Good Eats: Reloaded, so I got to watch two back-to-back yesterday: pot roast and oatmeal. I never tried the much-reviled pot roast recipe from the original episode, and Alton would be horrified to discover that I actually like single-serving instant oatmeal.

What stuck out for me was that the reloaded oatmeal recipes (1, 2) are tarted up with those trendiest of grains, quinoa and chia. The reloaded granola recipe just sounds unpleasant. The new pot roast looks decent, but I’m not going to run out and try it when it’s hot out.

Perhaps when the rainy season starts again in the fall. (although I’m actually still getting some light rain occasionally, mostly at night)

Metaphor Alert!

I went to check on the status of Corona-chan restrictions in Monterey County, only to discover that none of the authoritative DNS servers for www.co.monterey.ca.us are responding, and the records have timed out everywhere. Sounds like a virus to me!

Stage 2.2 update

Yesterday, the county agreed to beg the governor for permission to enter phase 2 of stage 2. The state has “acknowledged receipt of the Form”.

Should they approve it, dine-in restaurants, full-service car washes, shopping malls, and pet grooming will once again be legal. Not sure about haircuts, since there’s industry-specific advice that suggests yes, but they fall into a category that’s still listed as “phase 3”.

On the bright side, residential cleaning services will be permitted to reopen, which means I can have my already-pretty-damn-clean house thoroughly scrubbed.

Hopefully my dentist had the financial resources to ride this out and can reopen. Soon.

By golly, haircuts are in stage 2.2 now

…assuming salons and barbershops meet the detailed requirements to reopen, some of which assume the owner/operator has plenty of extra money to upgrade the facilities and purchase a large stock of disposable everythings. And that they can get stylists to come back to work despite making more on unemployment thanks to the extra-goodies laws.

(in many cases, stylists are basically independent operators who rent their stations, making them ineligible for unemployment benefits, but California mostly outlawed freelancers this year, so I’m not actually sure what their status is any more)

Creeping featurism, Jira plugin edition...

So there’s a third-party plugin for Jira called “Git Integration for Jira”, whose description claims that all it does is query your Gitlab server and display links to the commits that reference the current Jira issue.

Nowhere does it mention that it also defaults to sending warning emails to addresses mentioned in the commits, even if they don’t map to any of your Jira users.

Like, say, the Freeswitch mailing list.

The initial helpdesk ticket wasn’t terribly useful in figuring this out, since it consisted of a screenshot of someone’s inbox that didn’t include their email address or even a full subject line, much less something useful like, say, complete email headers. It took three rounds with one of our local devs to elicit the keywords “git integration plugin”, “project key FS”, and “smart commits”.

That last bit is what let me know I was looking at the correct configuration screen, since it’s right above the feature to randomly send email to addresses scraped from the git commit.