Fun

GenAI Girl Trouble

This week’s SwarmUI Discord theme is food. Z Image Turbo nailed the concept on the first try, but I made a few more until I had one where she was carrying the pepperoni in both hands.

(the first time I tried to upscale this, I got a bunch of extra extra-tiny people scattered around the image. Turned out that the “refiner do tiling” option was on (legacy of a more memory-intensive model), and it was generating additional people on each tile, at the appropriate scale; first time I’ve seen it do something like that)

Captions, on the other hand…

The “New Yorker” look was easy, given the artist name and a style description, but getting every word right remains a challenge for diffusion models, even when you avoid rare words. Part of the problem is that for all its virtues, the turbo part of Z Image Turbo means that it does 90% of the work in the first pass, which doesn’t leave as much room for variation and refinement. It took 10 tries to get 2 correct captions, one of which was rendered even smaller than this one.

The WWWA Central Computer says “get off my lawn!”

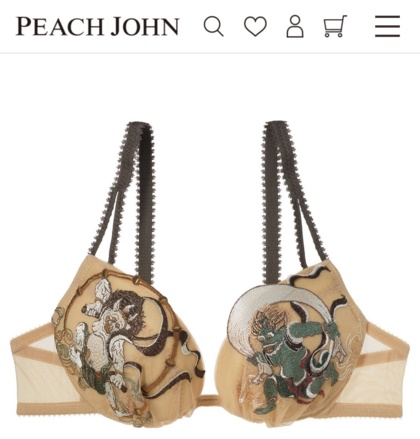

Dirty Pair 40th anniversary merchandise.

Global warming coming down

We were promised hours of snowfall starting at 1PM, so I made an early-morning run down to Jungle Jim’s for some holiday food shopping. I got out of there around 10:45AM, at which point it was just starting to sleet.

Getting home took twice as long as the trip out, because there were already several accidents on the highway, despite the fact that the roads were still clear and there was no ice.

I made it home as it started to snow just enough to require my windshield wipers, and soon after I got the car unloaded, the sky unloaded.

Boy, bet those Amazon drivers wish they’d delivered my packages yesterday as promised, rather than leaving them on the truck and taking them back to the depot for the night…

Peternal

EXIF is such a mess of a standard that the best way to work with it in

Python is a thin wrapper

around the exiftool command.

Which is a Perl script.

Coffee Dump

James Hoffmann explores the depths.

The Complete Winter Season Preview!

- Frieren 2: Yes.

(anything else, I’d first have to watch a previous season that I skipped, and I don’t know if the hot adorableness of Torture Tortura is enough to compensate for Shouty Princess Shouting)

Dear PILs,

Whoever thought it was a good idea for Pillow to silently strip metadata should be pixelated with extreme prejudice.

Also, could ya maybe flesh out the documentation a bit? I would not

know that you could add exif=somedict or pnginfo=somedict

arguments to Image.save() if I hadn’t googled examples. Just knowing

about the existence of those arguments would have helped explain why

one of the two save paths I was using was metadata-free.

“I don’t see you in our system…”

Called the home-security company to have them come out and install new sensors on the replacement doors. After suffering through a really annoying hold system, the woman who answered had no record of my account. Until I mentioned the name of the company, at which point it turned out they’d been acquired, and their phone number was transferred over to a new central dispatch system on Friday. After she logged into the correct system, she… copied down all my information by hand, because that didn’t work either. I’m supposed to get a callback, eventually.

Really doesn’t make me optimistic about their responsiveness to alarm calls.

“I don’t see you in our system…” 2

Due to a recent acquisition that should be all over the news for the business we’re in, my team is being added to their legacy IT systems; email, VPN, Jira/Confluence, etc. The Belfast team got all their invites Monday, but I got nothing. I figured they were going by region, and I pretty much am my region, so I didn’t worry about it.

The good news is that I finally got my first notification about access.

The bad news is that it was for Teams.

“so much glitter”

My Mac suddenly decided that I couldn’t open certain images and PDF files, claiming a permission problem. The well-written error message even told me how to fix it, which of course didn’t work, because it had misdiagnosed the problem. I could open the files, just not with Preview.app.

It would open 90+% of files just fine, but consistently fail on others. TL/DR: Preview.app leaks resources over time, and I’d been keeping it open because otherwise it loses my place in the PDF file I’ve been reading.

It’s not supposed to do that, either.

(in fairness, I’ve opened and closed thousands of images over the past week, often a few hundred at a time; why they wrote Preview to open all of them simultaneously, I can’t imagine, but they did. This is one of the reasons I “vibe coded” deathmatch)

Unrelated,

I am convinced that every air-fryer manufacturer staffs their cooking-guide team with people who have never seen a pizza roll. One of my Black Cyber Fortnight purchases was a Ninja Crispi Pro, and the recommended time and temperature for a bag of pizza rolls is 10-15 minutes at 450°F.

I have never seen a pizza roll survive more than 8 minutes at 375°F without bursting open and spilling out its precious lava. 10-15 at 450 is crispy-critter territory.

(okay, this was not the direction I expected Z to go with this, but I find myself unable to complain)

(discussing the previous pic on the Discord eventually led to a non-serious idea about an in-person gathering to make late-night drunk snacks)

Finally!

The key to unlocking proper pizza rolls in Z was to avoid any word that could be mistaken for Asian cuisine. “roll” means egg roll, “dumpling” means gyoza or shumai, etc; “deep-fried pillow-shaped miniature pastry” dodged the over-training bullet.

(yes, “chicken and dumpling soup” produces a thin broth with gyoza and sliced chicken floating in it; harrumph)

Feel the Uravity

(not only does she defy gravity, but also Internet Wisdom about the plausibility of the boobs-and-butt pose)

Sick weekend

Literally, in a “let’s not ask genai to illustrate that” way. Feeling much better now, but I lost nine pounds in a day and a half, and I would not recommend this weight-loss plan.

Streams: Crossed

The Kpocalypse Kometh!

KPop Demon Hunters to Ring in Dick Clark’s New Year’s Rockin’ Eve

No, I will not be watching. Not because I bounced hard on the obnoxious trailer for KDH, which I did, but because I’d rather scrub the toilets than “ring in the new year” with a live TV broadcast. My traditional New Years festivities include ordering delivery pizza, tipping heavily, and avoiding all human contact. Admittedly, it’s the same as my plan for most holidays.

(no kpop artists or undead pop-culture icons were harmed in the faprication of this genai image)

Dear Amazon,

What’s the customer-support code for “driver too lazy or stupid to walk 20 feet to the front porch”? Trick question, I know, because there’s no button for reporting on the quality of a delivery. If I navigate through “customer support” I eventually get redirected to a 20-minute-wait “chat” screen, but the odds that an English-speaking human being will respond are… “less than promising”.

Thesis

“90% of improved coffee-making technology is just baristas pretending they’re Tom Cruise in the movie Cocktail”

How to reduce memory use in Discord

I never knew that Discord was such a memory hog, to the point that it now auto-restarts when it gets above 4GB. I just got in the habit of exiting it after every session because writing a desktop application in Javascript produces a terrible user experience and is guaranteed to come with giant gaping security holes.

And you don’t want giant gaping holes exposed on the Internet.

Related,

Z-Image Turbo is so popular that even the amputee fetishists are rushing to make LoRAs catering to their specific sub-kinks. Kinda wish they weren’t, actually.

(people are raving about how easy it is to make LoRAs for ZIT, but most of the SFW styles seem to just be adding keywords to styles the model can already produce. I didn’t use any LoRAs for the above images, just long style descriptions followed by relatively short prompts (see mouseover comments))

I can haz doors

On Thursday, they finished the last door. On Friday, I wrote a check to pay off the doors. In the spring, I’ll have them come back and start on the windows. I’m very happy with the quality of work done by Wayne Overhead Doors, and the quality of the Provia doors.

Wild Last Boss: not bad so far

I figured the “reborn as my OP RPG character in the distant future of the game world” genre was played out, so I didn’t give this a chance. Fortunately someone recommended it recently, and as soon as Our Hero became Our Heroine, her voice won me over. While she’s quite versatile, for this role she’s using her Dog Days Princess Leon voice, which I’m quite fond of.

Her expository sidekick is actually the first named role for that actress.

Catgirl Output

In Z Image Turbo, short prompts tend to produce very similar images, to the point that something like “pretty young woman with slender figure” will produce 100 images of basically the same 19-year-old Chinese fashion model with obvious plastic surgery on her face.

At the same time, long detailed physical descriptions may produce too much diversity, to the point where a request for young, healthy, attractive women will occasionally produce fat ugly broads in their fifties. The elaborate dynamicprompts set that I built to shove Qwen Image out of its comfort zone is too strong for ZIT; in my batch of 5,000 SFnal cheesecakes, nearly 3% were unflatteringly heavyset. Another 3% were thicc and tasty, so it wasn’t all bad.

When I was illustrating the previous post, though, I started with “highly-detailed cartoon. six sexy half-naked catgirls on the bridge of a starship” and they came out completely nude with Barbie-doll genitals and breasts tipped with pink hearts. Adding “they are wearing cyberpunk outfits” shifted to a 3D-rendered realistic style and replaced real cat-ears with cat-ear headphones.

I went back to the original prompt and tried to dress them a little, adding “They are wearing lace bras, lace-topped stockings, lace panties, and long lace gloves”; this kept the real ears, but switched to photographic style. So I changed it to “They are wearing sexy lingerie” and it not only shifted back to 2D, it went with flat-shaded anime style.

I expect better style consistency when they release the full Z Image base model; Turbo is ridiculously fast, but there’s a clear tradeoff. In the meantime, I’ve converted the data from this repo to YAML so I can use its lengthy descriptions of many artist styles:

(echo "styles:";\

sed -e '1 s/^.*=//' artist_prompts.js | jq -r '.[]|.[]' |

sed -e 's/^/ - /' ) > artist-styles.yaml

sed -e '1 s/^.*=//' artist_prompts.js |

jq -r '. | to_entries[] | "\(.key): \(.value)"' | sed -e \

's/^/\n/' -e 's/: /:\n - /' -e 's/["//' -e 's/"]//' \

-e 's/","/\n - /g' | sed -e '/:/s/ /_/g' > artists.yaml

Doordash knows

I was quite sick on Friday, so I didn’t have any energy to cook on Saturday, and ordered dinner via Doordash. The “top dasher” had a clearly female name, but the driver who showed up at my door was a man in his mid-thirties who spoke about ten words of broken English.

Checking the site, “she” has over 13,000 successful deliveries. You can’t convince me that the company doesn’t know how many illegals are sharing accounts.

A little rusty at this snow thing...

Cleats don’t fail me now!

Went out to shovel 4 inches of snow off the driveway. Made it halfway down the hill before realizing my cleats had rusted out and snapped, and I had to walk carefully as I cleared a path with the Snowcaster and salted the ground behind me. I finished clearing the driveway after the new cleats arrived this morning, because they’ve moved the door work from Friday to Thursday.

Fortunately the sun and wind combined to mostly clear the rest of the driveway, so it went quick, but I had to at least clear off the pavers going around the house, so there’s no risk of ice ruining their day. (ICE won’t ruin their day because all the workers are Americans)

(Z don’t know grawlix, and didn’t use the emoji I included in the prompt, but it still managed to use relevant emoji)

(also, TIL grawlix is the word for pre-emoji cartoon swearing)

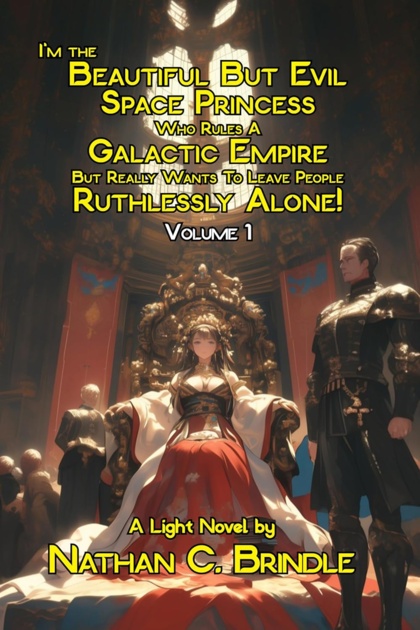

Catering to a certain audience

I’m The Beautiful But Evil Space Princess Who Rules A Galactic Empire But Really Wants To Leave People Ruthlessly Alone!

AT&T knows their audience, too

Their fiber Internet service is now ready for installs, so they sent a pair of pretty girls around the neighborhood to make the offer. Unfortunately, I’d just finished clearing the snow and ice for tomorrow, so I had no energy for them, and was much more interested in a hot shower.

Which I’d have gotten arrested for inviting them to join. Maybe they were 18.

Dear Apple,

Take advantage of the departure of your “UI Design Chief” by releasing OS updates that revert Liquid Ass and Make Text Readable Again.

Spoiler alert

As expected, the “revised edition” of Freelancers of Neptune does not improve the story in any way. I’d much rather see Jacob Holo work on book 2, and the nicest thing I can say about this book is that he clearly didn’t spend much time on it, letting the co-author airdrop in her tab-a-into-slot-b erotica. I didn’t expect to finish it, and I did not, in fact, finish it.

Speaking of cheesy porn…

Does it count if you lose your virginity to an android? is next season’s lesbian-slave-waifu show. Sadly, the trailer shows that even women in their late twenties can freak out like a teenage anime boy when confronted with a nekkid chick in her bed. From the studio that brought us Yandere Dark Elf and Chuhai Lips, which is not the flex they might think it is.

Every dog eventually gets his Waymo

(although I’m reminded of the story about the Texas researcher who was testing how people responded to animals on the road, and when he put out a realistic snake, the first person to drive by was a state trooper who not only swerved to hit the snake, but backed up over it again, then got out of his car and drew his sidearm to put a bullet in it)

Snow day!

Last door not installed Monday, because half the crew was still recovering from Thanksgiving. That’s my conclusion, anyway, based on the fact that only one of the three-man team was available, and there was no way he was going to move a pre-assembled sliding door to the back of my house and hold it in place.

Now there’s snow on the ground and a cold front moving in, so by the time they show up on Friday, it will be about 15°F, and the only thing that’s going to get rid of that snow is, sadly, me.

swarmctl

I’ve sandblasted the rough edges off of my Python script for driving

SwarmUI via its REST API. It’s now up on Github in my catchall repo as

sui.py

(name shortened because I got tired of typing it). I used it to

generate 5,000 vertical 1080p sfnal pinups with the new Z Image Turbo

model, as well as upscale some of the better ones. It also slices,

dices, and makes julienne fries, or it will when I’ve worked through

the TODO list.

(without a style LoRA, these don’t necessarily hit the “40s retro-sf” look, but Z is really good at detailed backgrounds and pulling things out of complicated prompts; this set averaged 195 words/prompt)

Dear Amazon,

According to you, the things I wanted the most in Black Friday and Cyber Monday deals on were coffee grinders (26, with prices ranging from “burger and fries” to “car payment”) and phone/watch/earphone multi-chargers (49, ranging from “small delivery pizza” to “dinner date at nice restaurant”). Also about half a dozen fermenting jugs in different sizes, and four replacement USB boards for repairing Android devices I’ve never owned.

Dear Amazon Marketing Droid Soon To Be Replaced By Generic GenAI,

Cyber Monday is perhaps not the best day to sign someone up for marketing text messages without their permission, requiring them to opt-out. Please take the person responsible for this decision out behind the barn, insert an electric cattle prod to its full wide-base-to-prevent-loss depth, and set the power to full.

I mean, come on:

2025/12/1 18:40 EST:

Amazon: Starting today, you’ll now get exclusive Amazon offers from 48092, just in time for Cyber Monday deals. Reply STOP to 48092 to opt out.

(yeah, send out that “Cyber Monday special” when there are only five hours left in the day…)

Dear Apple,

Fuck your “News” feed. I use the Stocks widget to track stocks, not headlines from Mashable (whatever that is), that open in your subscription “News” app. Guess that’s one more piece of functionality you’ve buggered up completely on the Mac in your quest to monetize every pixel.

Dear Japan,

Never change. This time I mean it.

That feeling when…

…you realize that phone is older than her mom.

🎶 Thunder and lightning… 🎶

You won’t live through the first date, but it will be worth it.

(so, how has your X “for you” feed been today…)

Reasons Z-Image Turbo is better than Qwen Image

- speed; like, by a lot

- thumbs and big toes reliably on correct side

- wider variety of faces and body types

- extra, missing, and melty limbs quite rare

- fewer EXTREME facial expressions

- less weird shit in general

- less censored (better than Barbie but not specifically trained on genitals)

- apparently quite easy to make LoRAs for

- lean, running on older hardware with less VRAM

- Even Better Models On The Way

To clarify that last one, “turbo” models are generally very fast but less capable, converging in a small number of steps but lacking the detail and breadth of the corresponding full model. ZI’s turbo model is better than most full models, so if the relationship holds true, ZI-full should be unbelievably awesome.

So far, the only negative I’ve run into is that if you don’t specify the image style clearly, it will default to “photograph with terrible skin tones”. I actually get better “photographic” images by just asking for “illustration”, although that tag will also generate flat animation, 3D animation, anime, cartoons, and the occasional painting.

Tanya, catgirls, and more fun with Z

Tanya 2, finally announced

For sometime in 2026.

Freelancers of Neptune, “revised”

Jacob Holo’s Freelancers of Neptune put a sexy catgirl on the cover (previously), which I appreciated, and rather emphatically made her not a haremette, which was also refreshing. Not only did she drive the hero crazy with her antics, she already had a boyfriend.

So he just released a “second edition” with a co-author who “added the spice” and turned it into something different:

Just remembered why I stopped reading Louie

TL/DR: in book 3 (my original notes), Merrill comes up with an incredibly stupid, guaranteed-to-backfire plan. Sure, it sets up Wacky Hijinks(TM), but the outcome was entirely predictable.

To get Rescued Runaway Young Heiress out of an arranged marriage, Louie pretends to her father that he “claimed his reward” after rescuing her from pimps/slavers. Merrill’s idea was that the threat of scandal would risk her reputation and call off the marriage, but she didn’t think it through, and nobody else spotted the flaw: her Daddy was marrying her off to advance his own position, and Louie is one of the most eligible bachelors in the kingdom.

He is, after all, the adopted son of one of the richest and most powerful wizards in the world, who’s also a personal friend and old adventuring companion of the king and the high priestess. Hell, the entire Thieves Guild is trying to figure out who his birth parents are, because he’s potentially that important. Whoopsie!

Destination: international waters

If we deport a convicted felon, and their home country won’t take them, I’m fine with just dropping them off near that country. Sink or swim, not our problem. If NGOs actually care, they can buy a fleet of patrol boats to fish them out of the water. If their home country has no seaports, or their crimes involved children, airdrop them.

More fun with Z

I fed a fresh batch of dynamic SF cheesecake prompts into my almost-ready-for-Github SwarmUI CLI to see what Z Image Turbo would do, and since it’s so much faster than Qwen, I’ve got just under a thousand images to play with already. Deathmatching them down to a reasonable number will be difficult, because the hit rate is so much better than Qwen.

I’ll limit myself to just a few for now:

(fingers are pretty good, but oversized heads are a risk…)