Fun

Amazon nickel-and-diming for peanuts

Remember that three-week-old order that was never shipped? Yeah, USPS reports that it still hasn’t shipped, and while Amazon’s fully-automated and AI-degraded customer support “chat” system believes the data that’s in the system, it was finally willing to process a refund Friday… over the next 24 hours or so. So they’ve had my money for most of a month, which if you multiply it by the number of unhappy customers who were fucked over for the holidays, adds up to a tidy sum.

Perhaps it’s just more profitable than “shipping goods to customers”.

(and, yes, literal peanuts were involved; from the other recent negative reviews of the marketplace dealer, it looks like “Virginia Diner” took December off)

(as a special bonus, I had to manually search for it on the order page, because the pretense that it had “shipped” (which is an obvious misinterpretation of the USPS status code) kept it from appearing in the convenient “Not Yet Shipped” tab, and absent any action on my part, it would eventually just end up at the end of my order history…)

Chinese fabrication

Speaking of Amazon, I bought an espresso tamping station, and it arrived Friday. Take a good look at the pictures, and you’ll see that there are no photographs of the product. They’re all 3D renders with composited accessories. The wood frame was fine, but even with rubber feet on the bottom it didn’t sit quite level, and more importantly, the black acrylic insert was CNC’d ever-so-slightly oversized for the CNC’d wood.

So the worker simply slathered the bottom with glue and pounded it into place, which lasted long enough to get it into the box and ship it. Looking at the reviews, I’m not the only one who discovered this quality workmanship when the glue failed and the center of the insert bent up. I sanded down the edges until it fit, then used gap-filling superglue and a bunch of woodworking clamps to reattach it.

So, good design (swiped from another company), shoddy assembly, and zero QA. I’d have bought a better one for twice the price, but this was the only one that would arrive by this weekend…

(it was surprisingly difficult to get ZIT to grasp the concept of “pounding a square peg into a round hole”; if it made the peg at all, it was almost always undersized, and at least half the time the hammer was held upright, pounding with the base of the grip)

Random test image

As I’ve added functionality to my SwarmUI cli, it’s gotten kind of crufty. In particular, I had two different methods of creating images in JPG format: server-side during generation, and client-side batch conversion. The problem with the first one is that I also added client-side cropping and unsharp-masking, which added more compression artifacts.

So I gathered up the code for cropping, resizing, sharpening, and format conversion, abstracted them into a “process” class that applied them in a well-defined order, and set the server to always generate PNG. Took a while to get everything working, but it makes it possible to clean up the code and make all the processing options available to multiple sub-commands.

(I needed a quick regression-test image to confirm that everything worked, so I used the very simple prompt “a catgirl”. Most of the time this produces a dull photograph of a girl with cat-ears, but this one time the model hit it out of the park)

Wintering again

First it rained heavily yesterday, then the temperature dropped 30°F last night, and then I looked out the front door this morning to find that high winds (40+ MPH) had blown my empty trash bins sixty feet down the street, and had also filled my yard with a mix of trash and recyclables from neighboring houses.

As I went out for what I thought would be a quick pickup, snow started blowing around. I got the bins back up to the house, but had to retreat and gear up before finishing the cleanup, because my fingers were starting to hurt from the bitter icy wind.

So, yeah, staying in for the rest of the day.

(style courtesy of one of the few useful ZIT LoRA, Cute Future, which really shifts the mood of my holiday cheesecake)

Teh Anime Warz

Lots of back and forth on the xitter in the never-ending battle between “people who like Japanese pop culture” and “people who insist on ‘fixing’ it in translation”. My take:

Localizers believe that they’re better writers in English than the original authors are in Japanese. If this were true, they wouldn’t be working as localizers.

Random study-buddy

(I’m really liking this particular cartoon style; it reliably comes out strong and consistent and cute; style section of prompt is “Drawing in the style of Jon Burgerman, chaotic compositions of cartoon characters, free-form doodling with bold, looping linework, flat graphic lighting, a vibrant, candy-colored palette, playful and energetic atmosphere.”)

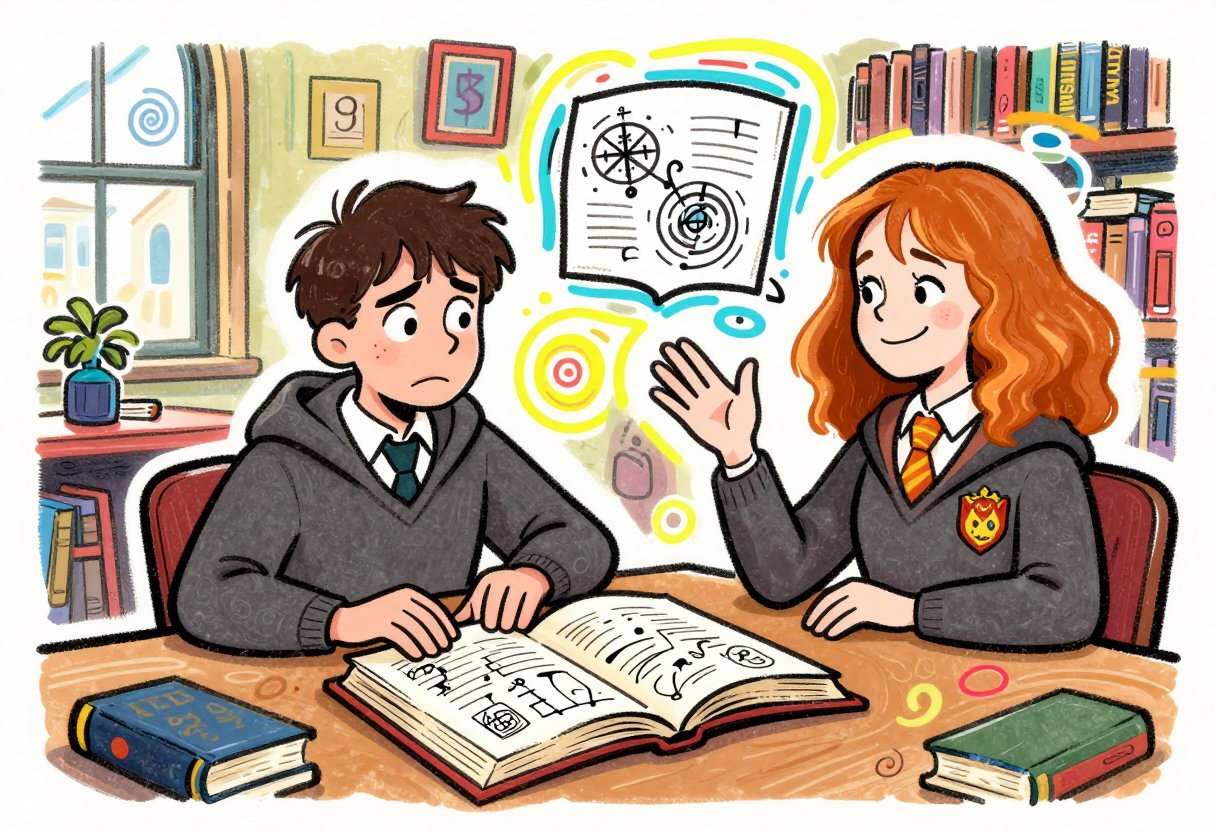

Paradoxies

So, AI is consuming all memory production in the world while simultaneously being unable to remember which commands destroy data. I’m sure it’ll all work out fine.

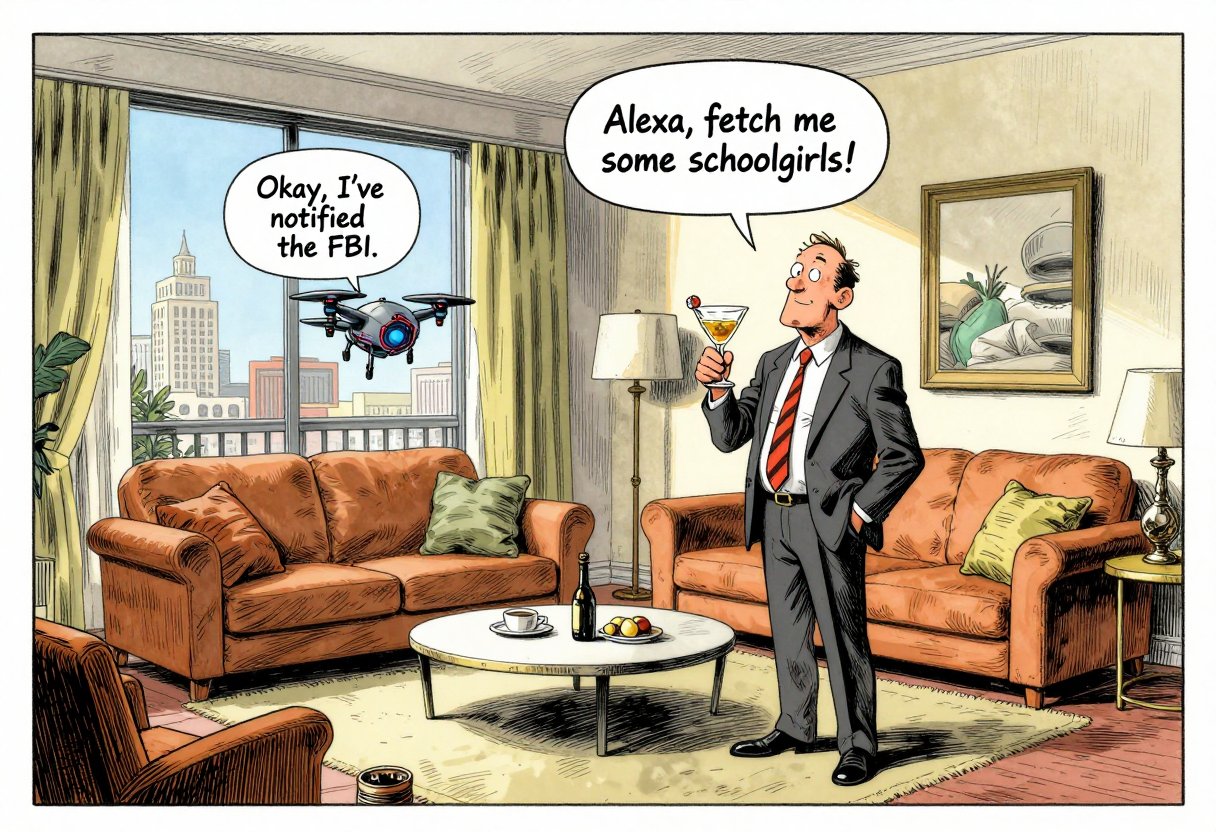

“Alexa, exit Alexa+”

How much do we want AI everywhere? Instead of the original plan to make it an add-on paid service, Amazon is rolling out the new “AI” “enhanced” Echo “experience” to Prime customers by default. To opt out and restore the old dumb “by the way, did you know that I’ll keep talking until you swear at me to shut up?” behavior, use the above command. Which will probably work as reliably as switching away from the “for you” feed on X…

(took about 20 tries to get the speech bubble to come from the drone, sigh; fortunately it only took 4 seconds per try)

“Package delayed in transit”

Oh, Amazon, you and your bullshit.

- Dec 10: marketplace order placed, “may arrive after Christmas”.

- Dec 11: “Package left the shipper facility”

- …

- Dec 26: “Package delayed in transit”

- Actual USPS site, Dec 11-27: “Shipping label created, USPS awaiting item”

- Amazon status: “Estimated to arrive by January 1”

The best part is how Amazon now adds text insisting that the information on the order status page is the same as what’s available to their customer service agents, (implied) “so don’t bother contacting them”.

(fortunately it was just a present for myself…)

Skeleton Knight 2 announced in January

No, not announced for January, just that there will be an announcement for the second season in January.

(I’ve already used almost all of the decent non-porn fan-art from the first season…)

Santa’s School For Naughty Girls

Just helping them get their start…

(ZIT’s having some scaling issues today; must have had too much ice cream and pudding for Christmas (classical reference))

Post-Christmas Clearance Sale

Everything must go!

Glass Bottom Boat

She has my sword…

GenAI Gals after the jump

Unexpected presents...

Amazon Interstellar

Childhood’s End

No, not that one. This one. Of the many pop-culture references from the Seventies that have been converted to LoRAs, this is not one I intend to pursue.

Blackout

My patience is rewarded, as the 15-year-old overpriced Connie Willis novel finally drops below $10 on Kindle.

Merry Christmas from The Cheesecake Faptory

Tear off the wrapping paper and enjoy!

[Update: just realized I made the "-small" versions full-4K as well, which was silly. Page should load a lot faster now...]

Note that my new workflow is built around my SwarmUI

CLI, which

now correctly preserves metadata even when generating to JPG, so the

large images have the full parameters embedded in the EXIF User

Comment field, making it possible to drag them back into SwarmUI or

just view the prompt and other settings with exiftool:

exiftool -b -usercomment cheesecake.jpg | jq .

Lazy meme day

Same vibe

The M in M1911 is for…

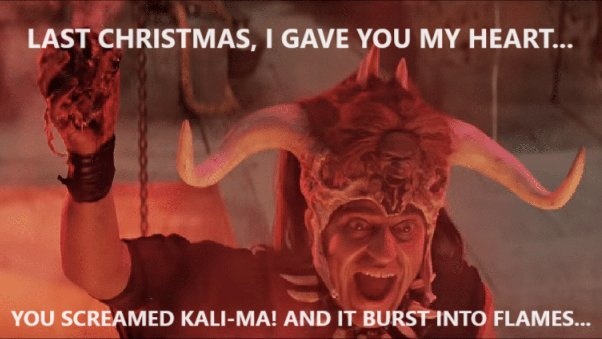

Last Christmas

Peak Japan

Related,

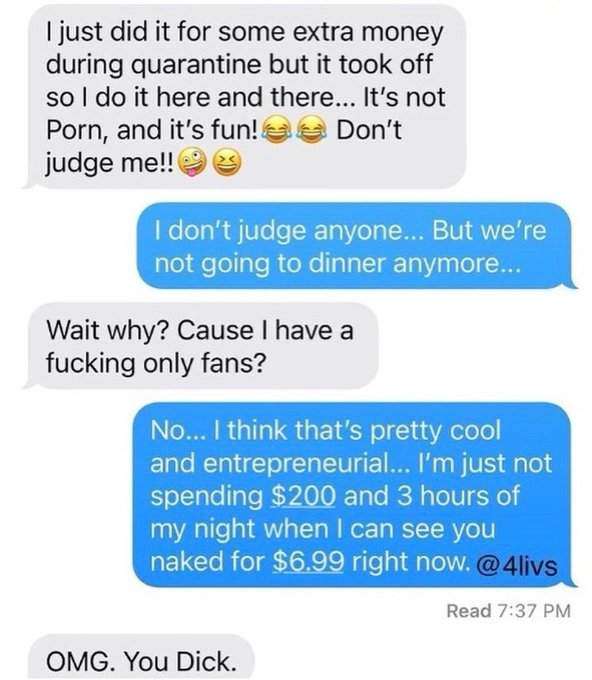

Modern dating

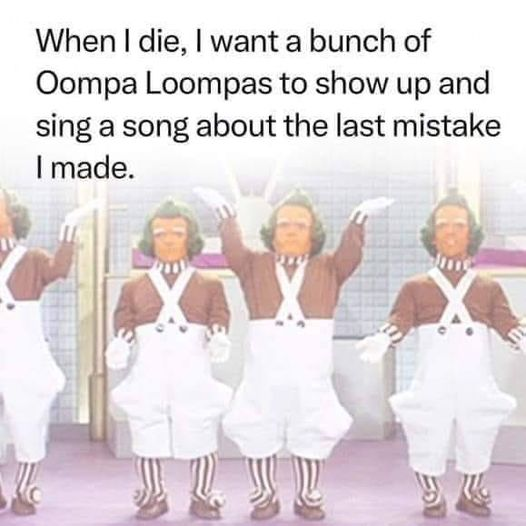

Life goals

Life lessons

“Lucy, you ignorant LUT!”

Fallout Season 2, episode 1

“Let’s set up an entire season of convoluted plotting all at once!”

IMHO, Walton Goggins is the only thing holding this together, and spacing it out so you have to watch one episode per week like our primitive ancestors did does not enhance the experience. I’d prefer to just rip off the bandaid and get it over with, but that won’t be possible until February.

Maybe I’ll watch the rest then.

(I did not use the word “alien” to describe the creature, because that word is strongly associated with a very specific overused image of a pop-culture alien, just like asking for “alien symbols” gets you an Alienware logo; creature, monster, pretty much any other word is a better choice with most models)

(also, this is probably the best-looking pistol I’ve gotten out of ZIT, and it’s even in a decent “holstered” position)

LUT-shaming

Random image is random; I’m experimenting with adding a LUT post-processing pass to my SwarmUI CLI, to fix Z Image Turbo’s slightly flat colors. The output looks fine on its own, but when I mixed some of its cheesecake into the wallpaper rotation, you could really see the difference.

If anything, the pics from Qwen Image were too saturated, but for some of them that was part of the “vintage airbrush pinup” look I was going for with them. My first pass at cleaning up the ZITs was doing a basic auto-level-ish command with ImageMagick:

magick $file -contrast-stretch 0.15x0.05% new/$file

Worked great, but it would be nice to have it run server-side, before

JPG compression, and the recommended method is to apply a LUT. There’s

a whole suite of post-processing

tools available

as a SwarmUI extension, and plenty of free LUTs online

(1,

2,

3,

etc). You can also copy .cube files from any professional imaging

software you happen to have a license to, such as Photoshop, where

they’re usually named by the film/camera look they apply.

Raw from ZIT:

I think the Fuji Sensia LUT pops the colors a bit without going overboard:

(best part: applying a LUT adds basically nothing to rendering time, and generating a comparison grid of every installed LUT at different strengths takes seconds, since the server can reuse the rendered image and just apply each transform in turn)

Another pair of cats dancing…

Okay, they were both pretty cool cats, but not quite what I had in mind.

It's beginning to look a lot like Shipmas

…although with Amazon having forgotten most of what they ever knew about logistics, Shipmas is a bit like Schrödinger’s Cat Box, with things never showing up as trackable until two days after they were promised for delivery, which is always fun to explain to kids.

Fun(?) with Liquid Ass

iOS 26.2 is out, so one could hope that other customers have done the basic release QA for Apple by now. I wanted to see what the legibility looks like, to see if it’s safe to upgrade my mom’s phone yet. So I installed it on my old California phone, which doesn’t tie into any online services (do not upgrade a device that uses iClown until you’re ready to upgrade everything; they test cross-version compatibility even less).

TL/DR, no, doesn’t look like they’ve finished tuning the UI to restore readability. It’s better than the initial “release” (beta), but still not good for anyone with less-perfect vision than an Apple design team.

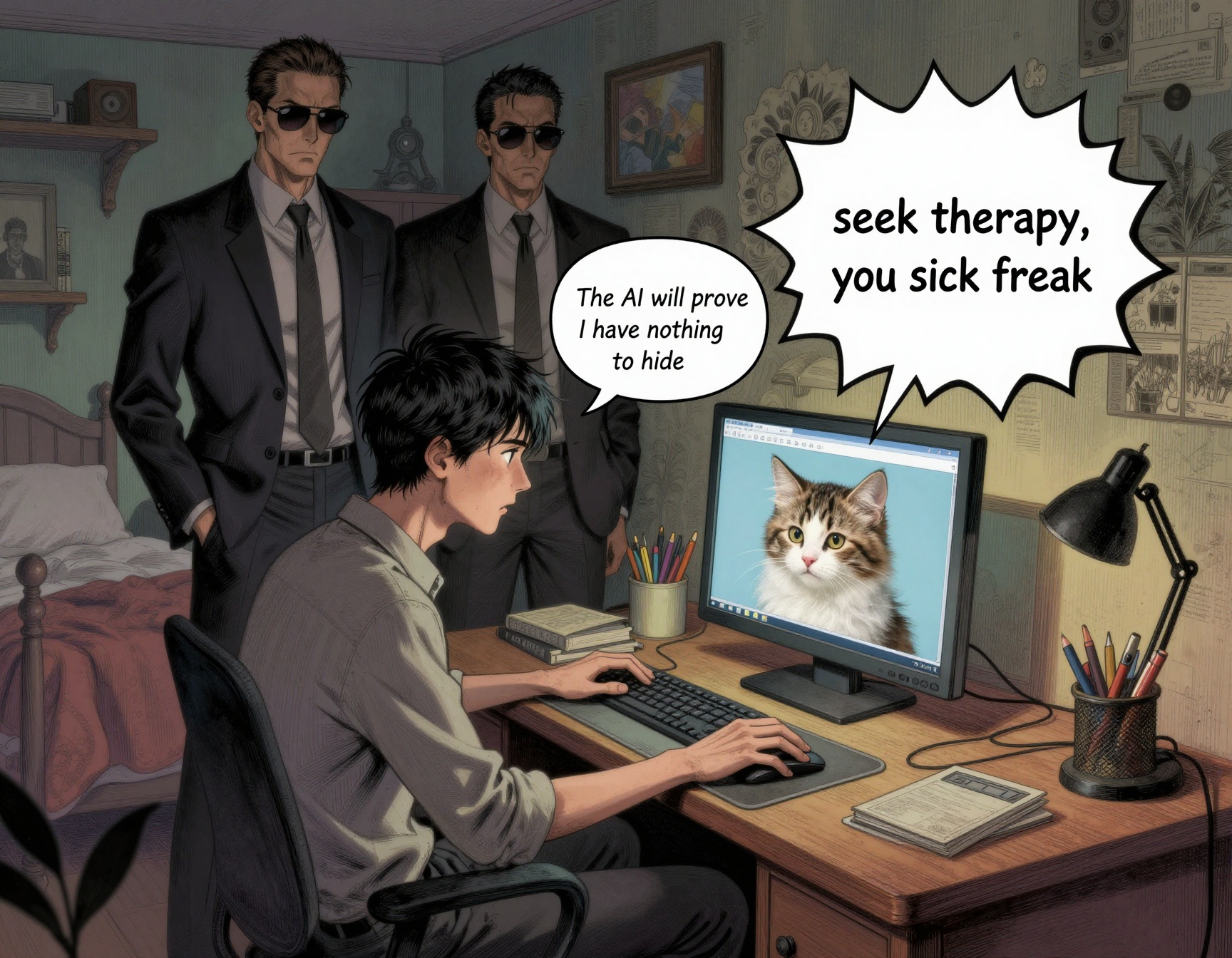

Synology pivots to “AI”

Their latest device is BeeDrive, an “AI-powered Portable SSD”. Not to be confused with their BeeStation “private cloud”, which is not to be confused with their NAS products. As far as I can tell, none of the Bee-thingies has any data protection, although the Station offers a free trial of “storing bits of your private cloud in their public cloud”.

TL/DR, both product lines appear to be external drives that come with backup software, which is a pretty crowded market. On the plus side, they didn’t roll their own, and licensed Acronis True Image (“formerly Acronis Cyber Protect Home Office”, signaling that they’ve (partially) recovered from their childish phase of labeling everything “cyber”).

On the minus side, they’re bundling an offline LLM to do text-scanning, image-scanning, and inference on everything you store, for Deep Search(TM). So when the local authorities decide they don’t like your memes, they can sieze your backup drive and quickly find additional double-plus-ungood thoughts to charge you with.

I mention this for the benefit of those living in Australia, the UK, Canada, the EU, and other regimes hostile to free expression of disapproved thoughts.

I also suspect their LLM is going to produce hilarious results when fed a typical Internet user’s hard drive. Most common search result:

(primary difficulty on this one: getting separate speech bubbles with one of them coming from the computer; maybe six tries total)

Definitely not a “1-minute walk”…

A Kyoto “tourism site” describes access to the Sannenzaka shopping district with the words “A 1-minute walk from Keihan Railway’s Gion-Shijo Station”.

Google’s walking instructions suggest they left off the first digit, and it’s not a “1”…

Given how sparse, generic, and monolingual the content of the site is, I suspect it’s AI slop. Which is why I’m not linking to “kyoto-to-do dot com”.

Speaking of slop…

My phone is now getting computer-filtered 50% Medicare/Medicaid fraud and 50% home-improvement fraud. I need to answer the phone for unknown numbers while I’m expecting service providers to show up, so I can’t just let them go to voicemail (and even legit numbers often don’t leave messages any more).

I say “yes” to get to whatever operations center in India is taking the calls today, and then ask them a simple question: “what’s my name?”. If they have even the cheapest possible call list, then they have some name associated with my phone number.

But none of them can answer, and they stop talking immediately and hang up.