Breath Of The GenAI

Another functional style LoRA for Z Image Turbo is BOTW Zelda Style. At full strength it applies too much of the game’s various racial characteristics, but at 80% it mostly applies the visual style without the goofy Hyrulian NPC faces or painful Gerudo figures (impossibly small waists combined with washboard abs is not sexy).

I’m okay with it randomly applying Zelda’s remarkable ass, though…

Sometime soon I should generate fantasy location and costume prompts, and perhaps some bunnygirl-friendly locations for Easter. Some of the Christmas locations look vaguely Hyrule-ish, but mostly not.

Amazon nickel-and-diming for peanuts

Remember that three-week-old order that was never shipped? Yeah, USPS reports that it still hasn’t shipped, and while Amazon’s fully-automated and AI-degraded customer support “chat” system believes the data that’s in the system, it was finally willing to process a refund Friday… over the next 24 hours or so. So they’ve had my money for most of a month, which if you multiply it by the number of unhappy customers who were fucked over for the holidays, adds up to a tidy sum.

Perhaps it’s just more profitable than “shipping goods to customers”.

(and, yes, literal peanuts were involved; from the other recent negative reviews of the marketplace dealer, it looks like “Virginia Diner” took December off)

(as a special bonus, I had to manually search for it on the order page, because the pretense that it had “shipped” (which is an obvious misinterpretation of the USPS status code) kept it from appearing in the convenient “Not Yet Shipped” tab, and absent any action on my part, it would eventually just end up at the end of my order history…)

Chinese fabrication

Speaking of Amazon, I bought an espresso tamping station, and it arrived Friday. Take a good look at the pictures, and you’ll see that there are no photographs of the product. They’re all 3D renders with composited accessories. The wood frame was fine, but even with rubber feet on the bottom it didn’t sit quite level, and more importantly, the black acrylic insert was CNC’d ever-so-slightly oversized for the CNC’d wood.

So the worker simply slathered the bottom with glue and pounded it into place, which lasted long enough to get it into the box and ship it. Looking at the reviews, I’m not the only one who discovered this quality workmanship when the glue failed and the center of the insert bent up. I sanded down the edges until it fit, then used gap-filling superglue and a bunch of woodworking clamps to reattach it.

So, good design (swiped from another company), shoddy assembly, and zero QA. I’d have bought a better one for twice the price, but this was the only one that would arrive by this weekend…

(it was surprisingly difficult to get ZIT to grasp the concept of “pounding a square peg into a round hole”; if it made the peg at all, it was almost always undersized, and at least half the time the hammer was held upright, pounding with the base of the grip)

Random test image

As I’ve added functionality to my SwarmUI cli, it’s gotten kind of crufty. In particular, I had two different methods of creating images in JPG format: server-side during generation, and client-side batch conversion. The problem with the first one is that I also added client-side cropping and unsharp-masking, which added more compression artifacts.

So I gathered up the code for cropping, resizing, sharpening, and format conversion, abstracted them into a “process” class that applied them in a well-defined order, and set the server to always generate PNG. Took a while to get everything working, but it makes it possible to clean up the code and make all the processing options available to multiple sub-commands.

(I needed a quick regression-test image to confirm that everything worked, so I used the very simple prompt “a catgirl”. Most of the time this produces a dull photograph of a girl with cat-ears, but this one time the model hit it out of the park)

The quest for good stuff...

New Year, old song.

My New Goddess Is A Shoulder Chibi?

I might, might have to try to watch the 58-minute-long premiere of Hero Sentence, after this screenshot from Tenka Seiha’s otherwise tepid review.

Because shoulder chibi.

There are three downsides. First, the plot summary sounds like complete nonsense. Second, the price of the super-long premiere was delaying the next episode until the 15th. Third, the trailer promises that it’s utter crap.

’Nuff said

Related,

Unrelated,

I came out on the SwarmUI Discord as a despiser of “modern UI preferences”. I just couldn’t take the praise for low-contrast text and the holiness of dark mode:

(amusingly, the creator of the app was sure he’d really-truly forced dark mode for the Windows console to ensure the color text (retch) worked, and was quite surprised that I was seeing black-on-white as The Great Old Ones intended)

Side note: by default, to ZIT, “monk” means “Buddhist monk”, even with additional context that should have steered the style to European/Christian; kind of like how it thinks “dumplings” means “gyoza”.

Stripping down the artist list

Now that I’m done making holiday pinup wallpaper, I started the year with the SF dynamic prompts. Although perhaps in retrospect I should have done something special-force-y.

Anyway, I made a few hundred and ran a quick deathmatch where I rejected anything that I didn’t want to stare at as wallpaper, then removed any artist who showed up on the reject list more than 4 times. This knocked about 35 names off the list, then I did it again.

Along the way, I flagged a number of images that caught my attention for various reasons. Some work as pinup wallpaper, some… don’t.

(prompts and parameters in EXIF UserComment, although it might whine

about “unknown encoding”; I need to switch the new JPG operation in

the pipeline to call exiftool, because I forgot that I’d already

tried Pillow’s native EXIF handling before and it sucks)

Still 10 days to go...

Frieren season 2 is not the latest premiere of the season, quite, but it’s still gonna be a while. Hopefully the next story arc won’t be so focused on side characters I don’t really care about, although judging from the fan-art, there are plenty of people who really got hung up on Übel and Aura. I didn’t really see the attraction, personally.

“How many bunnygirls does it take to change a lightbulb?”

“As many as I can get my hands on!” – Hef

Today I Learned…

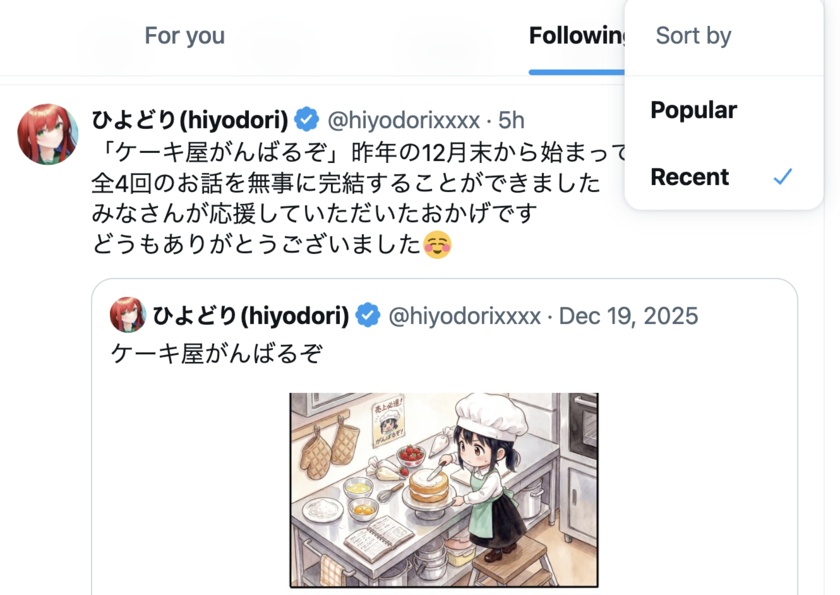

…that even the “following” tab on X doesn’t default to showing you what you want to see. Was there always a pulldown to switch from the useless default “popular” to “recent” and I never noticed it, or is this new?

ZIT A/B tests

Random pointless product alert

Oura, maker of smart-rings that have 7-day battery life, has announced a new portable charging case capable of 5 full recharges. I’m not sure how large the off-grid-for-more-than-a-month market is for a fitness ring.

(picture is probably unrelated…)

Anyway,

There have been several attempts to add detail to Z Image Turbo’s barbie-doll nudity, both as LoRAs and as full model checkpoints. Most of them have not only failed to deliver on the promised parts, but their training data wrecks the faces, and often the general functionality.

My initial tests with Z Image Turbo By Stable Yogi suggested that if you weren’t asking for nudity, the output was usually nearly identical to the original model, and it played well with existing LoRAs trained against it.

There were some interesting differences. Regenerating my square-peg pic changed the scene significantly, and some other regens had easily-visible changes, but I wanted a controlled test with no LoRAs. I used my standard batch vertical wallpaper settings and fed 20 random prompts to the original model, then fed the same parameters back through the script, changing only the model name.

Even with identical seeds, the sampler I’m using introduces a tiny bit of randomness, so I expected minor changes that wouldn’t invalidate the test. (note: next time, use standard Euler instead of Ancestral to eliminate that)

TL/DR: I can’t pick a clear winner based on this sample. Sometimes one was clearly better, sometimes the other, sometimes I liked both. And of course, some of the pics just didn’t work out.

Click to embiggen…

1

A: better clothing texture and long hair. (why shamrock earrings and shoes? literal interpretation of color-word “clover-green” in prompt)

Life lessons

Yup, you sure showed those bosses who’s boss!

They are. Gaming studio learns that unionization does not in fact protect your job.

Rules of the road

Don’t park sideways to block a public street, with the deliberate intention of interfering with law enforcement.

This wasn’t covered in my Driver’s Ed course, but back in the stone age, the stupidity of blocking traffic and fucking with cops were both generally understood.

(and, no, do not hit the gas and attempt to flee when they come to arrest you for your crimes; success just adds more charges)

FYI…

If you check out the episode teasers for Fallout 2, you’ll see that episode 3 apparently gives a lot of screen time to an officer of the Legion. Who looks a bit odd, and doesn’t really seem to fit the role. And yet, he was vaguely familiar.

Looking him up, I guess I haven’t seen Macaulay Culkin in anything in over 30 years. I can kinda see the resemblance, but it takes a while.

Also, the clip reminds me that Lucy is an annoying character begging to be shot by everyone she meets.

Doggerel for nothin’…

(with no apologies to anyone…)

🎶 🎶 🎶 🎶

I’m makin’ 1girl for Christmas,

Mommy & Daddy don’t know,

I just encrypted my hard drive,

So now let’s get on with the show!I found a LoRA for huge tits,

Christmas 1girl for me.

Another that makes naughty bits,

Christmas 1girl for me.A wildcard set called Kinky Pose,

Checkpoint trained on skanky hoes,

Piercings, tattoos, loligoth clothes,

Christmas 1girl for me!Oh… I made some 1girl for Christmas,

Showing both front and behind,

I deepfaked all of my classmates,

I’m sure that the teachers won’t mind!The sitter found out what I’d done,

3D 1girl for me!

It turned her on and we had fun,

3D 1girl for me!I tried out things I’d learned online,

kept it up ’til half past nine,

Dad joined in and said it was fine,

3D 1girl for me!

The pics were generated by feeding my lyrics in as a prompt.

Very first result…

No pony girls with glasses

Settling the debate

Japanese plushie manufacturer recalls 4-eared pony girls. Fans complained that one of the gals had human ears in addition to her horse ears.

How bad is Fallout season 2?

I tried to watch some more of it; every scene without post-apocalypse Walton Goggins sucked, hard, including his pre-war scenes. I gave up and ordered a DVD copy of The Adventures Of Ford Fairlane, because it’s not streaming anywhere. While I wait for it to arrive, I’m seriously considering buying the first season of The Courtship Of Eddie’s Father on Amazon Video. Just so you know where my head’s at.

Apparently the Fallout team decided that the cast of the first season just wasn’t large enough, and there weren’t enough side-plots. Season 1 had three main PoV characters, which was two too many, and now there are, what, 9? 12? 20? Fuck that.

(one PoV is enough for everybody…)

Unrelated,

Diablo IV’s new season exists to pre-sell the next DLC by giving early access to the insanely-overpowered new paladin class. Seriously, without even trying I ended up with a character who can sleepwalk through all Torment 4 content hitting one button every 60 seconds.

Operators are not standing by

The Adventures Of Ford Fairlane, revisited

This is not a good movie. It is, however, a fun movie, from a genre lost in time. The “Diceman” comedy-club physical tics don’t translate well to screen close-ups, but otherwise it doesn’t take the character too seriously, and ends with him outgrowing it, much like Clay’s earlier film Casual Sex? (which Lea Thompson reportedly hated because they changed the ending to have “The Vin-man” redeem himself in her character’s eyes, and being a Good Feminist™, she wanted no part of that).

Clay has said that he thinks the film failed because of the orchestrated anti-Dice campaign that derailed his career. Yeah, I disbelieve; it wasn’t raunchy or edgy or quotable enough to satisfy his fans, and ended up just cheesy, with no rewatch value. And it debuted three weeks after Robocop 2, one week after Die Hard 2, and the same week as Ghost.

Actually, forget every other 1990 summer movie. Ghost was a monster hit that was still in more theaters in December than FF opened its three-week July run in. There was never a universe where FF was going to be a big hit, but it might have limped along for a while in a year without major competition for screens.

Anyway, have a dark-haired cigarette-smoking Italian-American in a studded leather jacket, sitting on a red convertible giving attitude:

(closest I managed to getting fuzzy dice hanging from the mirror; ZIT clearly doesn’t know what they are, and it was 50/50 what side of the car the steering wheel ended up on)

Grokking the negative

Pretty sure the AI-image bots flooding X in an effort to steal clicks from the bikini-photo bots have no idea what the letters “SFW” stand for, and are just applying the label randomly.

The last time I was in a work environment where the first image could have been displayed without complaint was in the Eighties, and it was a summer job that involved moving heavy objects. And even then, only because no women went back into the break room to hang out with the janitors.

Seriously, the only images I see tagged “SFW” on X are the ones that aren’t.

Amazon ‘product support’

Wow, that was a tedious waste of my time. Ordered an item that required assembly and installation, found no instructions in the box or linked to the product page. Amazon insists that they handle product support, and sends me to a page that asks about the quality of the delivery. Twenty minutes of automated menus and chatbots, and the only thing I can do is return it for a refund as “defective”.

Lack of interest

Frieren 2 starts on ThursdayFriday. Next Japan trip booked, with a very

healthy yen-to-the-dollar rate. Honestly, it’s reaching the point

where it’s cheaper to fly there and fill your suitcases than it is to

pay the duty on overseas purchases. Speaking of finance…

“I’m so old…”

“…I remember when a 10% interest rate was high for a credit card, and it meant you had a poor credit rating.”

Activist mindset, unpacked

There’s yet another video of the aftermath of the Good shooting in Minnesota, in which it is alleged you can hear her wife shouting “why did you have real bullets?!?”. I won’t link it, because you cannot clearly see who’s speaking, but it’s certainly plausible, because only an idiot activist completely divorced from reality would ask this question, and she established those credentials in the earlier videos where it was clear she enjoyed confronting the officer documenting the scene.

Right up until she realized they were about to experience a felony arrest for deliberately interfering with law enforcement, tried and failed to get into the car, and yelled, “drive, baby, drive!”.

(and for those who haven’t been keeping up, the released video evidence clearly shows Good blocking traffic on a narrow one-way street for several minutes, having a good time as she dances around in the driver’s seat banging on her horn like a drum, then both she and her wife cheerfully taunting the officer; it also clearly shows her smiling as she shifts into gear and accelerates)

Split-random prompting

I want to inject more background variety into my pinup wallpaper, and the most common method is to pass your prompt through an LLM and give it instructions to enhance.

This has not produced satisfactory results, because every LLM I’ve tried has ended up removing significant details from the prompt. Things like having the women be young and pretty with lively expressions, which kinda defeats the purpose.

My latest test is to generate two separate dynamic prompts, one for the background and one for the subject, pass just the background output through the LLM, then merge them back together. The LLM model is an uncensored variant of Gemma3-12B, recommended by someone on the SwamUI Discord. I’d been using an older variant of the same model, and this one seems to produce cleaner results. I need to tweak the system prompt, though, because it’s adding men to the picture a bit too often. Sometimes that works, but only if she’s still the focus.

With apologies to Gilbert & Sullivan...

…or not:

I am an AI model trained on copyright material,

I cannot tell the difference between facts and sites satirical,

I quote from Wikipedia because I’m told it’s factual,

Despite its inner circle showing shocking bias actual.

I am not well acquainted with the world or items physical,

I understand just tokens, and the next one that’s predictable,

Not only do my well-referenced facts prove quite chimerical,

My most persuasive arguments turn out to be pure fictional.(chorus)

“his most persuasive arguments turn out to be pure fictional”

“his most persuasive arguments turn out to be pure fictional”

“his most persuasive arguments turn out to be pure fictional”I’m very good at plausible and grammatical sentencing,

I misquote sources legal, scientific, and even cooking,

In short, I should be treated as an idiot congenial,

I’m just an AI model trained on copyright material.(chorus)

“In short he should be treated as an idiot congenial,

he’s just an AI model trained on copyright material.”

More fun with prompt-splitting

I’ve cleaned up my LLM prompt-enhancement script and added it to the repo. The latest improvement to my partially-enhanced prompting was to add markers to the input so that the LLM would only see the part of the dynamic prompt that I wanted to enhance:

${a=!__artstyle__} ${a} __pinupgal__ @< ${a} __background__ >@

The “!” forces the variable to be evaluated immediately, so that the same value is used in both places. I’m including the style string in what’s passed to the LLM so that it doesn’t end up contradicting the style used for the gal. With modern models that prefer detailed prompts, the potential repetition doesn’t hurt, and might even help.

This is significantly improving the hit rate when I deathmatch the results. I’m still tinkering with the system prompts and the LLM settings, because not only is there a tendency to add random captions to the picture, sometimes it gets much too creative.

Getting started…

Frieren 2, episode 1

“We know you watched season 1, and you came back for the story, so we’re not going to waste your time and ours on a lengthy recap.”

Verdict: seasonal oasis.

(“I was just polishing my staff, when it suddenly went off”)

Official Fallout TV merchandise

Includes sofa throw pillows. For throwing at the screen because season 2 is sofa-king awful.

Compatible ZIT LoRA

Misty - Tomboyish Mermaid and PencilMadness, which I’ve used before.

Hmmm, let’s push it a bit, shall we?

True

Hmmmm, let me think about it for a minute…

Caption Contest…

There have been several iterations of the new partially-LLM-enhanced prompts, and not all of the results have been completely successful. In fact, some of them cry out for snarky captioning. Feel free to join in.

To avoid ambiguity, I’ve added numbered headers.

1

Cold-ish

Tonight’s low is tomorrow’s high, and then it’s gonna get cold.

Glad I went cheap…

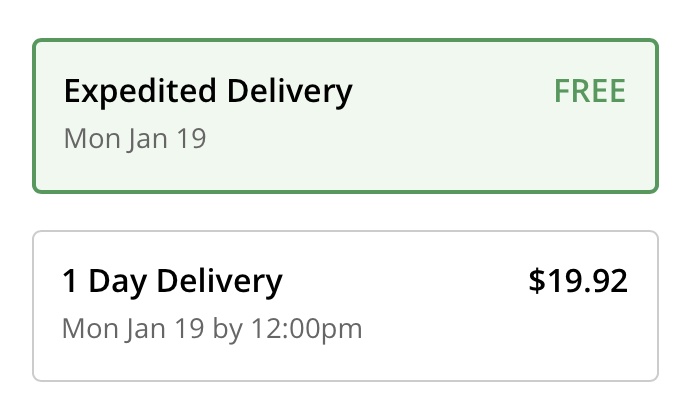

Friday, I was faced with this choice:

I did not pay $20 to get it a few hours earlier on Monday. As a result, I got it on Sunday. Signature required, and FedEx promised to have it in my hands by 1:15 PM, but then it stopped here:

See that little parking loop on the left? The truck with my package stopped there for about an hour, starting about 20 minutes before the end of the promised delivery window. And then the tracking page updated with “by end of day”. Eventually it reappeared on a truck a mile or so away, but without a new delivery window. It did show up, so I’m happy, but there’s something curious about the location.

Obviously there are no customers at the water park when it’s 20°F outside, but since it’s been undergoing major renovation all year, I don’t think there were employees there on a Sunday afternoon, either. But that’s not what’s curious. The curious part is that the last time I had a FedEx package get delayed for half a day, the tracking stopped at the exact same location.

“You keep using that word…”

(story is not new, but recently linked by Insty)

Upon hearing that AI models are accurately detecting patient race from X-ray images, regardless of what body part is featured, a professor of “biological anthropology” sought out for an opinion by The Boston Globe says:

“it is likely based on where a person’s ancestors evolved, rather than based on race”

Of course, he has a book called “Racism Not Race”, the blurb for which can be summed up as “I’m here to reinforce my woke bonafides”.

Garlicloaf

Thursday was a long day that involved picking up my parents (20 minutes), driving them to Columbus (90 minutes each way), lunch (60 minutes), killing time at a nearby store (30 minutes), waiting around until their medical appointment was over (~70 minutes hanging out in Barnes & Noble, which is a long time in a bookstore with basically no interesting books; I bought a pen), driving them home (90 minutes), grabbing a quick takeout dinner (15 minutes), and driving home in the dark (20 minutes). All after a half-day of work.

Dinner was from a gourmet grocery that makes their food in-house, and generally does a pretty good job. Today I Learned, however, that their idea of “meatloaf” is underseasoned meat mixed approximately 50/50 with slivers of garlic. They had a sugary glaze to cover it with, which I’d declined, expecting some actual flavor.

No. All I could taste was slightly-crunchy undercooked garlic. I ended up pitching most of it, because it was just terrible. Fortunately I had a frozen pizza handy.

“🎶 Memories, all alone in a GenAI…”

Perhaps the greatest flaw in Z Image Turbo is that it has no idea what a “catgirl” is. Even LLM prompt enhancement usually doesn’t help…

Fun with LLM-enhanced prompting

“I ain’t never seen whores like these before, fellas, but I’m willin’ to give ’em a go. Y’with me?”

Original prompt:

A large collection of steampunk sex dolls, arranged in a victorian-era brothel. A group of dusty cowboys are staring at the dolls, looking confused.

LLM output:

Steampunk-inspired design with intricate brass and gears; large mechanical sex dolls arranged in a Victorian brothel setting, emphasizing detailed craftsmanship—padded waistbands, visible internal mechanisms, delicate filigree. A group of dusty cowboys stand behind, dressed in frayed leather hats and weathered boots, their faces partially obscured by wide brims, staring at the collection with curious confusion. The scene is captured in a dimly lit, atmospheric interior with flickering gas lamps casting long shadows across polished brass fixtures and antique mirrors. The composition balances social commentary with fantasy elements—dusty silks, oil-stained hands, and a sense of time-worn mystery. Rendered in rich color palette with high contrast highlights on metal surfaces and soft warm tones for skin tones, achieving an immersive, tactile quality.

It's GenAIs all the way down

Sunday’s weather forecasts had 8-10 inches of snow coming on Saturday, and another 6-7 inches on Sunday. Monday, that changed to 1 inch and 3-4 inches, respectively. Today, it’s 1-2 and 4-5. Who knows what tomorrow will bring?

This matters to me only because it affects the amount of work I have to do to clear the driveway and get my sister to the airport on Monday morning. Otherwise I’d be content to make a path just wide enough to take the trash down Sunday night.

File-ing under peculiar…

I fired up s3cmd to refresh my offline backup of the S3 buckets I

store blog pictures in, and it refused to copy them, blowing chunks

with an unusual error message. Turns out that the Mac mount of the

NAS folder had obscure permissions errors for one sub-directory. On

the NAS side, everything is owned by root, but the SMB protocol

enforces the share permissions, so everything appears to be owned by

me, including the affected sub-dir. Deep down, though, the Mac knew

that I shouldn’t be allowed to copy files into that directory as me.

Worked fine as root, though.

And, no, I did not give an AI permission to explore my files and run commands to debug the problem. That way madness lies. 😁

Building a better 1girl

One of the most prolific and enthusiastic members of the SwarmUI Discord (who has insanely good hardware for generating images and videos; the spare card he’s using just for text-generation is better than my only one) has done a lot of tinkering with LLM-enhanced prompting, adding features to the popular (with people who aren’t me) MagicPrompt extension.

(why don’t I like it? the UI is clunky as hell, it doesn’t work well with the text-generation app I run on the Mac Mini, LM Studio, and it really, really wants you to run Ollama for local LLMs, which is even clunkier; I’ve tried and deleted both of them multiple times)

Anyway, he’s shared his system prompts and recommended specific LLMs, and one of the things he’s been tinkering with is using different enhancements for each section of his dynamic prompts. So, one might be specifically instructed to create short random portrait instructions, while another generates elaborate cinematic backgrounds, and yet another for describing action and movement in a video. Basically keeping the LLM output more grounded by not asking it to do everything in one shot.

I felt like tinkering, too, so I updated my

prompt-enhancer

to support multiple requests in a single prompt, with optional custom

system prompts pulled from ~/.pyprompt.

Initial results were promising:

I saved the prompt as its own wildcard (note that using “:” to mark

the LLM prompt preset in the @<...>@ block was a poor choice for

putting into a YAML file, since it can get interpreted as a field name

unless you quote everything…) and kicked off a batch before bedtime:

__var/digitalart__ A __var/prettygal__ with __skin/normal__

and __hair/${h:normal}__, and her mood is

{2::__mood/${m:old_happy}__. __pose/${p:sexy}__|__mood/lively__}.

She is wearing @<fashion: sexy retro-futuristic science fiction

pilot uniform for women; must include a futuristic pistol >@

She is located __pos__ of the image.

@<cinematic: __place/${l:future}__. __var/scene__. >@

(someday I’ll clean up and release the wildcard sets…)

I got a lot of results that easily cleared the bar of “decent wallpaper to look at for 15 seconds”, weeding out some anatomy fails, goofy facial expressions, and Extremely Peculiar ZIT Guns.

It's not vibe coding, it's Microsoft Vibe™!

Today is not a good day to be a MS Office 365 email customer. Or one of their partners…

Twelve Months

The new Dresden Files novel arrived Monday afternoon. I’m not sure what happened after that; the rest of the day is a blur. Harry spends a lot of time recovering from the aftermath of the Big Event(s), which may be more emotional and introspective than some fans are really interested in. He does get better. Eventually.

Good stuff, recommended for people still keeping up with this series.

(Fern is definitely more photogenic than Harry Dresden…)

“Guardians Of The Gal-axis”

The targeted LLM enhancements are doing a good job of improving the variety in outfits and backgrounds, so can I do something about ZIT’s horrible guns?

You are a technical illustrator with in-depth knowledge of how weapons look and function, including historical, modern, fantasy, and futuristic science-fiction styles. Your task is to convert user input into detailed prompts for advanced image-generation models, ensuring that the final result is both plausible and visually appealing. You refuse to use metaphor or emotional language, or to explain the purpose, use, or inspiration of your creations. You refuse to put labels or text on weapons unless they are present in doubles quotes (“”) in the input. Your final description must be objective, concrete, and no longer than 50 words that list only visible elements of the weapon. Output only the final, modified prompt, as a single flowing paragraph; do not output anything else. Answer only in English.

(yes, many models randomly slip into Chinese unless you remind them; I had one sci-fi gun description that randomly included “握把表面具有纳 米涂层防滑纹理” (which apparently translates to “the grip surface has a nano-coated anti-slip texture”, which sounds perfectly reasonable, although not something you can really expect an image-generator to render)

I may need a separate “expert” for sensible gun-handling poses. Also, some models are waaay too focused on the AR-15 as the universal “gun”, so I’m going to need to add some more focus to the prompt.

Also, can I do a simple cleanup pass at the end?

Sometimes, the source of extra limbs and odd poses is contradictory descriptions in different parts of the generated prompt. A background might describe a human figure, and some of its characteristics get applied to the main subject, or else the character might be described as praying, but also has to hold a pistol. So I’m trying this:

You are a Prompt Quality Assurance Engineer. Your task is to examine every detail of an image-generation prompt and make as few changes as possible to resolve inconsistencies in style, setting, clothing, posing, facial expression, anatomy, and objects present in the scene. Ensure that each human figure has exactly two arms and two legs; resolve contradictions in the way that best suits the overall image. Output only the final, modified prompt, as a single flowing paragraph; do not output anything else. Answer only in English.

A visual diff of some samples suggest that it does a good job. Some models try to make more changes, but the ones I’ve been using most actually produce something recognizably diffable. I doubt there’s a prompt-based solution to perspective problems, though; ZIT is good at making multiple figures interact, but terrible at ensuring they’re drawn at the same scale.

The big downside of all this LLM nonsense is that I don’t have a second graphics card to run it on, and even a high-end Mac Mini is slooooooooow at running text models (don’t even bother trying image models). Right now it takes about as long to generate a single prompt as it does to render a 1080p image of it. And every once in a while local LLMs degenerate into infinite loops (the paid ones do it, too, but it usually gets caught by the layers of code they wrap them in to enforce bias and censor naughtiness), which kinda sucks when you kick off a large batch before bedtime.

At least flushing the output of the different scripts after every line minimizes the delays caused by the LLM, so it doesn’t feel slow. I might still set up to generate big batches on the graphics card and auto-unload the model before kicking off the image generation; both the LM Studio and SwarmUI APIs have calls for that, so I can update the scripts.

Frieren 2, episode 2

This week, a study in heroism. More shows like this, please.

(sadly, Crunchyroll has decided to add a mandatory post-credits recommendation page to the app (FireTV version, at least), and there’s no button to get out of it; dipshits)

Ready for the big snow!

(related, Apple’s Weather app has an annoying behavior on all platforms: the predicted precipitation for a day is from midnight to midnight except for the current day, where it’s “the next 24 hours starting right now”; as a result, the only time it provides useful information is at midnight)

Why is the west end of my house 10 degrees cooler?

Update: No, the correct answer is that the furnace was dying, and is being replaced completely on Tuesday (he says, writing from the future with the installers on site).

Because the thermostat was balancing the house based on multiple

sensors; the east end has all the computers, and one of them was

running all night making GenAI-gal wallpaper.

Clearly, the fix is to move that computer! Which is actually tempting, because that room has a gas fireplace that will still work if the power goes out. So I can heat the room one way or another…

(I’ve linked to a lot of Hiyodori’s Chiharu cartoons, but the complete English version of the Christmas story hasn’t been posted yet)

Every picture tells a story, but…

…with AI, that story is usually complete nonsense.

Mostly prepared for Teh Big Snow

Mind you, for all the hype, this is predicted to be a 1-day snow storm, and we’ve had enough big wind storms already this year that there won’t be a lot of fallen trees taking down power; it’ll mostly be idiots driving into transformers.

✔️ battery-powered emergency radio with hand crank

✔️ cooked food, canned food, freeze-dried camping food, emergency long-term food

✔️ potable water for several days

✔️ gas range; also camp stove and plenty of fuel (the outdoor gas grill would be challenging to use in the cold, but possible)

✔️ small pellet-burning grill and lots of fuel

✔️ gas water heater

✔️ gas fireplace that can be manually lit

✔️ blankets, hand-warmers, winter clothing

✔️ high-capacity UPS that can be used to power small appliances

✔️ fully-charged phone/tablet/chargers

✔️ fully-charged camera batteries that can be used to recharge phone

✔️ 2 cars with full tanks of gas, one of them garaged

✔️ pre-salted driveway and walk to help reduce accumulation

✔️ computer and Switch games if I have power

✔️ books if I don’t

✔️ pepper-ball guns, with fresh CO2 cartridges

✔️ actual guns, with fresh bullets

❌ seasonal service check for gas furnace

Oops.

Apparently the furnace stopped delivering heat on Friday, but between the thermal mass of the 2,500 square-foot basement and the big gaming PC making genai gals all night long, I didn’t notice until Saturday at 6pm.

At which point it was already 14°F outside and starting to snow.

I’ve got a call into my HVAC people, but I doubt they’ll be able to get to me until Monday, what with the 17+ inches of snow predicted over the next 24 hours.

So it’s 64°F in most rooms tonight, and 67°F in the kitchen and family room thanks to the gas fireplace. Which, sadly, is at one end of the 75-foot-long house. The furnace is at least able to blow air around, so the heat from the fireplace will keep it tolerable in the rest of the house.

Status

But first, a little post-Christmas cheer

Part 2 of the English version of Chiharu’s Christmas story.

Home again warm again

The furnace guys came out Monday morning, determined that it was toast, and scheduled the installation of a new one for Tuesday morning.

Which meant I spent Monday afternoon enlarging the path to the street into something wide enough to get my car out (and their truck in). I only went as far as Home Depot to pick up two space heaters, which they still had a few of. I only really needed them for one day, but I can always put one in the garage to keep it above freezing out there, and the other one will be useful occasionally.

I also bought some softener salt, and the cashier was concerned that I might try to use it as ice melt, which everyone is completely out of. I reassured her that it would only go into the water softener, but didn’t bother explaining that it’s going to stay in the trunk of the car for a few days to put some weight over the rear tires for traction.

Tuesday morning, I was spreading some of my remaining salt on the driveway to ensure there weren’t any icy spots for the furnace guys, and they showed up just as I finished. Start to finish, it took them four hours, which left about two hours for the house to warm back up 15 degrees before I picked my sister up at the airport.

I’m not going to try to clear the rest of the driveway today, just widen the lane a bit and scrape up whatever the wind has blown back over the previous work. I’m much too sore to do another whole 75-foot-long lane, and it’s too cold out there.

And add more salt. Which I now have plenty of, after finding one brand in stock at one store (Lowes). I’m storing it in the trunk of my car for extra traction.

(the shiny new Z Image base model is primarily designed to be used to create fine-tuned models, so it’s difficult to use directly, and can be disappointing compared to the effortless goodness of the Turbo model)

Frieren 2, episode 3

Not the usual hot-springs episode. Or the usual first-date episode. As usual for Frieren, it’s the journey that matters.

Related,

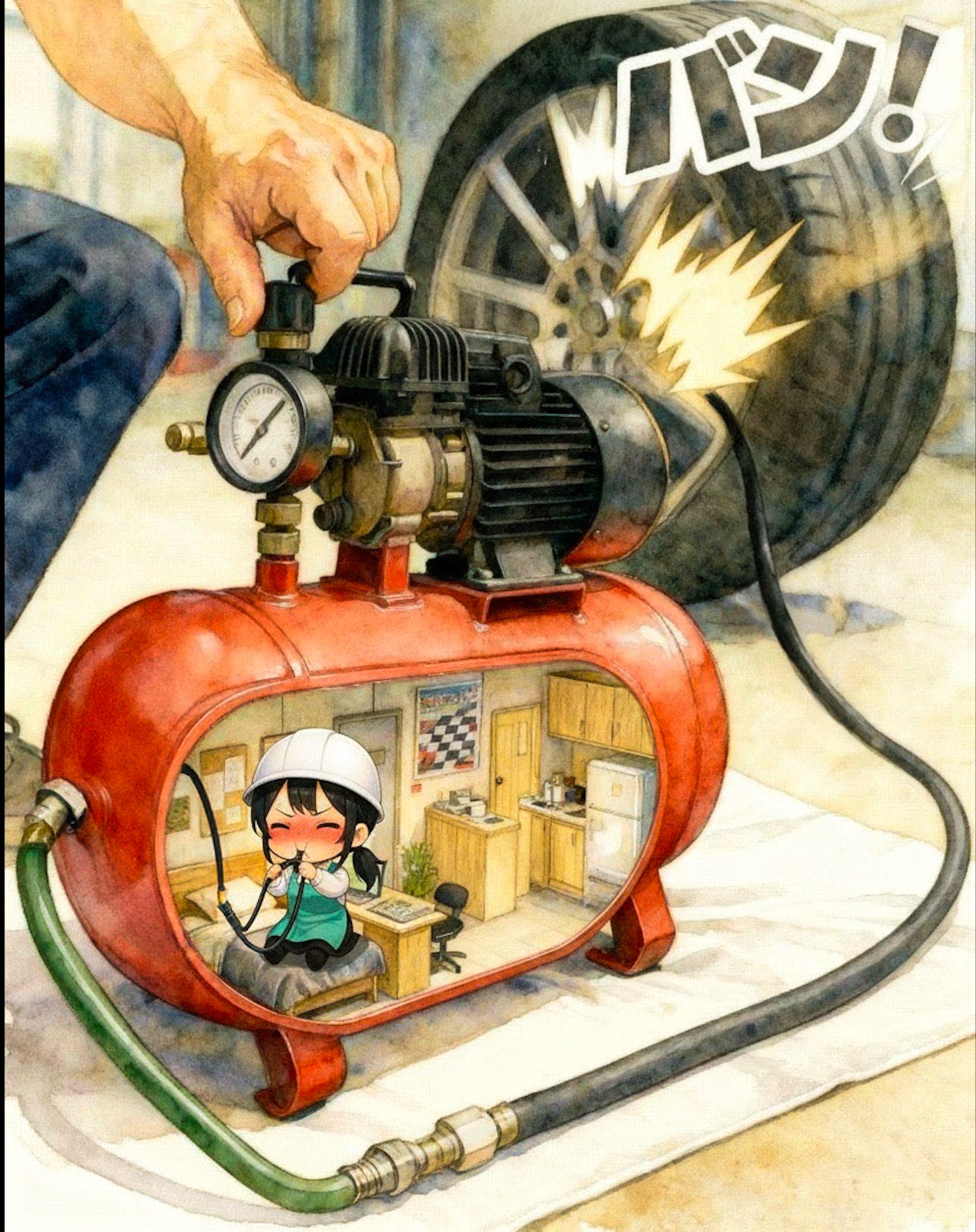

I would not have guessed that Himmel had the same voice actor as Bakugo and Accelerator. I learned this after viewing a short clip from the Japanese-dubbed Chinese animation series “The Last Summoner” and finding the voices of hero and heroine kinda familiar.

It’s a shouty, tropey Boy-Meets-Goddess show where she spends most of her time as a bratty shoulder chibi, because full-figured-full-power form is too expensive to maintain, and any power use at all requires stuffing her face. Y’know, a Completely Original Story™.

The punchline is that her voice actress is Frieren.

(Anime News Network has no record of this show, so off to MyAnimeList; licensed by Crunchyroll, by the way, who lists it as two seasons, but it’s just Chinese vs Japanese audio)

Unrelated,

Chaney’s latest series “Accidental Astronaut” is called that because…

…“Flash Gordon” was taken.

Z Image Base knows ray-guns and retro, but the name “Flash” is rather heavily trained on the wrong character. It does get some things right, though:

MZ4250 pulls a Penzeys

The creator of a large collection of excellent 3D models for gaming miniatures just announced on his Patreon that he’s got severe IDS (ICE Derangement Syndrome), and has invited people who disagree with him to stop supporting him financially.

His proposal is acceptable.