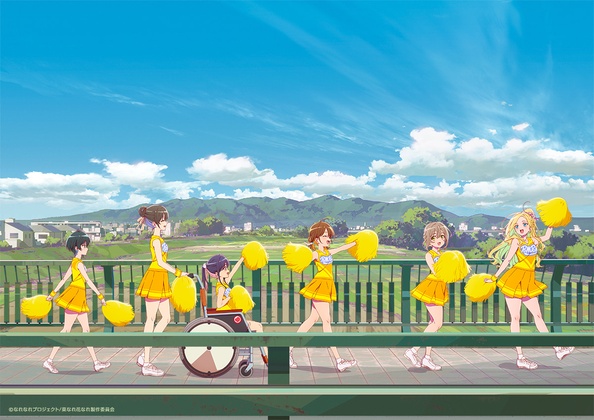

Fan-Service Rocks!, episode 3

Our second adult fan-service provider has entered the show in the form of a busty underrim-glasses-wearing bookworm, Imari. Could this show get any better? Well, yes, but jello-wrestling probably isn’t on the schedule.

This week, everyone falls in a hole in order to stumble across the Mineral Of The Week. Nagi is not only the voice of reason, but also the voice of A Proper Mentor, getting Imari out of her comfort zone and advancing her career path.

Verdict: thicc is apparently justice.

Windsurf stays on my mind…

My deathmatch project was quite small and self-contained, and even offline LLMs were able to wrap their tiny little “minds” around it, more or less. For my next attempt, I used the free trial of Windsurf to build something more elaborate: a vacation planner that mixes the categorized lists of Trello with the easy drag-and-drop of the Planyway plugin. That is, jettison all the other crap in both apps, and just have a bunch of events that can easily be moved around both between days and within days, so you can lay out an itinerary for the day and quickly update it on the fly. The real bonus is getting timezones right, which Trello still doesn’t do; it always edits and displays events in the web browser’s local time zone.

This time the experience wasn’t so smooth. I’m a dozen passes in, and it’s deferred the implementation of all of the drag-and-drop features because that’s apparently hard. The basic framework is there, but despite being part of the original specs, a fair number of features required multiple attempts to implement at all, much less correctly. Timezone issues required multiple screenshot uploads with detailed explanations. On the bright side, the screenshots and explanations actually worked.

In other words, it required a very detailed set of specifications, didn’t implement all of them, and would never have made any progress at all without extensive human testing and debugging experience. Even though this is still a tiny application (~1,000 lines of Python, ~1,000 lines of Javascript, and ~800 lines of HTML/CSS), it couldn’t be “vibed”.

Interesting note: it never occurred to the LLM that the color used to display text on a colored background mattered. I had to invoke the magic words “best practices for accessibility” to restrict the background color palette, and “strongly contrasting color” to ensure legibility. It then used actual Web Content Accessibility Guidelines. I’d required best practices for security and authentication, but did not add the same wording to each other section of the specs…

Fan-Service Rocks!, episode 4

It’s always surprising when they mention that Ruri’s in high school, since she often behaves like a much-younger girl. This week, they add a reminder in the form of a classmate who can stack up next to Our College Gals. Also a future partner-in-rock-crime who’s slowly being nudged in front of the camera.

The mineral-of-the-week is itty-bitty little grains of sapphire, slowly being traced up-river by tedious visual inspection of individual grains of sand. My back hurts just looking at Ruri leaning over a microscope; there’s a reason professors pawn this sort of work off on grad students, and that Nagi pawns it off on Ruri…

Honestly,

I expected more from Ai Shinozaki’s first tentacle video.

Lost in translation: Isekai Twink In Butchland

Seven Seas has announced new translated manga titles, including Let’s Run an Inn on Dungeon Island! (In a World Ruled by Women). This is softcore porn featuring a runty little guy who gets transported to a world where big buff women dominate, and as they wash up on his deserted island, he alternately bangs them silly and endures cliché role-reversed sexual harassment. Alternate title: Cocksman of Reverse-Gor. Of course he also has OP cheat magic.

New toy: Canon imagePROGRAF PRO-310

I haven’t owned an honest-to-Ansel photo printer in a very long time. I had one of the original 4x6 HP PhotoSmart printers with the lickable ink, and I had a rather finicky dye-sub printer for a while, but both were back when I was shooting on film and scanning slides with a color-unmanaged SCSI Nikon scanner that had an unreliable automatic slide feeder, which would be 20+ years ago.

It’s been on my mind for a while now, and the cleanup of my office made room for one, but I managed to talk myself out of buying a big one, settling for Canon’s A3+ (13” x 19”) model. Mostly because of sticker shock over the cost of ink cartridges for the A2/B3 PRO-1100.

Color management has come a long way over the past 20 years, to the point that every print I’ve tried has come out exactly as expected, including my genai gals and memes. And the ecosystem around fine-art printers is stable, with third-party paper companies publishing ICC color profiles that smoothly integrate into the workflow, so that you can pretty much just click “print”.

The only significant advance in framing technology, on the other hand, is the widespread availability of non-reflective coated glass at framing shops. You’ll still get a lot of glare on premade frames, but if you can afford professional framing, your stuff will be a lot more viewable.

Heartbreakingly expensive, though. Even with the fortunately-timed 70%-off sale at Michaels, my last framing batch was the size of a mortgage payment. Filled up a lot of wall space, but still not something to do lightly.

If I stick to commodity gallery-style frames in standard sizes, the PRO-310 will pay for itself, the ink, and the paper by filling just one wall of my living room with my Japan pics. The only trick is that for many common frame sizes, I need to print multiple images on one sheet of paper and cut them apart with a rotary trimmer, but I have one of those, and Canon’s software allows you to save custom multi-image print layouts and quickly drag images into them.

(and, yes, I printed Red Waifu)

Wallpaper Waifus

Speaking of which, I made a batch of 500 retro-SF dynamically-prompted pin-up gals in 9x16 aspect ratio to serve as rotating wallpaper on the vertical monitor, then deathmatched them down to 18, and while one poor gal spontaneously grew an extra finger during the refine/upscale process, it should be fixable with a bit of variation seeding. Later; at 33 minutes/image to refine and upscale, I’m done for the day.

I usually just post full-sized images and let the browser scale them down, but 18 2160x3888 images is a lot of mumbly-pixels, even lazy-loading them, so click the preview images to see the big ones (no, not Nagi).

Fan-Service Rocks!, episode 5

This week, Thicc Girls goes double platinum. Can’t wait for the world tour. Poor Imari really got thrown in the deep end, though; “Oh, we’re not panning in the river today, we’re taking these boulders to the mountains!”

Alya Sometimes Hides The Salami: In Russian

New explicit video from Maplestar. (there are some known errors in this version that will be fixed soon; none of them affect the “thrust” of the story…)

(he gets her in the end; also, she gets him in the end)

Windsurf goin’ down

Yesterday’s attempts at app enhancement foundered on the rocks of “Model provider unreachable”. It still charged me “credits” for trying, but didn’t do anything. This would be annoying if these weren’t free trial credits, but it still brings me closer to running out. Pro tip: don’t keep trying when you hit this error…

Dropping from the preview of Sonnet 4.5 to standard Sonnet 4 might have reduced its “intelligence” a bit, but had the advantage of actually running. I hadn’t switched to 4.5 anyway; it did that for me to promote the new one, charging less credits to do… less.

Today’s original-requirement-finally-implemented is all-day events. I think I have just enough credits left in my trial to add a user-prefs page with defaults and password changes (“using best practices for security”), session cookies (“using best practices for security”), and deep-copying an existing project into a new one.

Once I run out of credits, then I decide if it’s worth $15/month to throw more of my dusty old projects at it. Alternatively, since Windsurf is mostly just a custom VS Code skin connecting to third-party models (with some secret sauce in the system prompts and context handling), and it’s been suggesting the Claude Sonnet models, I could download VS Code and the Claude extension and pay them directly, since I’ve gotten good creative results from the free level of Claude.

It also appears that you can run Claude’s coding assistant inside a Docker container and just export your repo directory into it, allowing it to run commands on a completely virtualized environment without network access to anything but their LLM API (once it downloads its CLI tool, which of course is written in node.js, sigh).

(Will I release the final project? Sure, why not; it’s not like I wrote it…)

Fan-Service Rocks!, episode 6

Just noticed that Crunchyroll has a PG rating for this show, promising nudity and profanity. So not happening.

Anyway, Ruri suffers a brief bout of imposter syndrome, then rereads her notes to discover that she did miss something, and only careful review kept them on the right track. In the end, they not only find the sapphires, but uncover a bit of local lore.

What sort of hills and valleys will they explore next?

…like our primitive ancestors did…

I rearranged things in my office so that the 4K vertical monitor sits between the MacBook Air and the Mini, connected to both. Given that both Macs have widescreen displays, naturally I configured the Dock to appear on the left, as I always do. Except that In Their Infinite Wisdom, Apple has decreed that if the Dock is on the left, it must appear on the left of the leftmost monitor, even if that is not the main monitor. You can force the menu bar to appear on the main monitor regardless of position, but if the Dock is on the side, it must be aaallllllllll the way to that side. Even if that’s literally several feet away from the display that’s right in front of your face.

So I had to move the Dock to the right of my laptop display, like some sort of heathen who eats from a dumpster. It’s quite unnerving. I don’t look there. I never look there. I have over twenty years of practice not looking there.

Someday my prints will come…

Amazon order for $RANDOM_OBJECT placed on September 28. Shipped via UPS on September 29. Until about an hour ago, the last update from UPS had it in Illinois on the 2nd, but now it’s arrived in… California!

I’m old enough to remember when Amazon was good at logistics. Also “packaging items so they don’t get destroyed in transit”.

Bookmarking for later,

OpenAI has discovered that it bought a pig in a poke with Jony Ive’s Mysterious Pocket AI Device. For 6.5 billllllllion dollars. To make it work, whatever “it” is, they not only need more AI server capacity, but also a better-than-their-flagship-chatbot to drive it. And the Ars commentariat will be there to do whatever it is they do.

They really should have asked Grok and Claude if this was a wise investment. Or maybe they did…

Claude dev containers in VS Code

Yeah, there’s a lot of hand-waving involved in how to do this, with no provision for “hey, your official Dockerfile blew chunks”. Meanwhile, I’m not the only person frustrated with Windsurf’s expensive failure policy. It does no good for them to have a polished GUI if it eats up your monthly credit balance in an AI-enhanced “abort, retry, fail” loop.

Not that I actually want to use VS Code with or without a vendor-specific skin on top. The only reason I even tried a pay-to-play “AI” IDE is that it produced a better coding experience for my initial test project (at no cost to me), and all of that goodwill was lost when the second project got bogged down and had to be spoonfed half a dozen screenshots to get it back on track. The vacation scheduler was over 50 passes in when I ran out of trial credits, with some functionality still untested because of blocking bugs, and it might have been able to fix them if it hadn’t eaten a bunch of credits.

Anyway, I bit the bullet and got a paid Claude subscription for a while, and if I can get their Coding UI to work in a secured sandbox, I’ll turn it loose and see what it does to the remaining bugs and feature requests. No chance in hell I’m going to give a Node.js “AI” app direct access to my shell…

(“no, you can’t tempt me; I know there’s node.js under that fur!”)

Wrong model!

I was reviewing image-generation prompts enhanced by an LLM, and ran across something worse than having it randomly switch to Chinese (which it also did):

Note: Please replace “her” with the appropriate pronoun depending on the woman’s gender.

Yeah, that one’s going in the trash heap. The LLM, not just the prompt. (it was a derivative of OpenAI’s free model)

Cross-country

You’ll never believe this, but…

That Amazon package that went from California to Illinois, had no tracking updates for five days, then appeared back in California? Supposedly left California early Monday morning and hasn’t been spotted since. It’s “still on the way”, allegedly by Friday, but has reached the point where Amazon is now offering me a refund.

Since I don’t actually need it for anything soon, I’m just going to see what happens. Will it suddenly acquire a new tracking number or shipping company, as they’ve done before, or will it just show up a few months from now, as they’ve also done before.

The Flying Sister

Not to be confused with The Flying Nun. I was expecting my sister to fly into town on Wednesday. Monday afternoon she called to say she’d missed a connection on one of her regular business flights, and if she was going to have to Zoom into a meeting, she could do that from my house. So she was going to come to my place early.

Then she (and 20 other planeloads of people) got stuck on the tarmac at O’Hare and missed that connection, with no later flights to Dayton. Fortunately she lives in Chicago, so she wasn’t just stuck in an airport overnight. Although it took them three hours to find her bags…

So close…

They almost did the meme:

Waifu-a-go-go

[trivia: the Hollywood nightclub “Whisky a Go Go” was named after the first French disco club, which was named after the British movie “Whisky Galore!” (a-gogo being Frogspeak for “galore”)]

The dynamic wildcarding is shaping up nicely (although I need to split it up into categorized sets, and generate a wider variety now that I’ve got the prompting down), and I was in the mood to generate a big batch of pinup gals, but it just takes too damn long, and I can’t fire up a game on the big PC while all its VRAM is being consumed fabricating imaginary T&A. Belatedly it occurred to me that if I’m going to do a separate refine/upscale pass on the good ones anyway, why not do the bulk generation at a lower resolution?

Instead of the nearly-16x9 resolution of 1728x960 upscaled 2.25x to 3888x2160, I dropped it to 1024x576, which can be upscaled 3.75x to exactly the monitor’s 3840x2160 resolution. That cuts the basic generation time from 90 seconds to 35, and if I also give up on using Heun++ 2/Beta for the upscaling (honestly, the improvements are small, and it changes significant details often enough to force me to retry at least once), the refine/upscale time drops from 33 minutes to 10. That makes it less annoying to ask for several hundred per batch.

I did get some odd skin texturing on the first upscaled image, so I tried switching to a different upscaler. That changed a bunch of details, so now I’ve downloaded new upscalers to try. TL/DR, the more you upscale, the more of your details are created by the upscaler.

On a related note, file under peculiar that in MacOS 15.x, Apple decided to strictly enforce limits on how often you can rotate wallpaper. It used to be that the GUI gave you a limited selection but you could just overwrite that with an AppleScript one-liner. Nope, all gone; now you’re only permitted to have the image change every 5 seconds, 1 minute, 5 minutes, 15 minutes, 30 minutes, 1 hour, or 1 day. No other intervals are considered reasonable. Apple knows you don’t need this.

No doubt the QA team that used to test this stuff was axed to fund the newly-released Liquid Ass GUI that unifies all Apple platforms in a pit of translucent suck.

(just as I’ve settled on “genai” as shorthand to refer to the output of LLMs and diffusion models, I’ve decided I need a short, punchy term for the process of building prompts, selecting models and LoRA, and iterating on the results; I could, for instance, shorten the phrase “Fabricating Pictures” to, say, “fapping”…)

Fan-Service Rocks!, episode 7

[wow, I must be tired and distracted by the combination of a houseguest and a busy on-call week; I didn't even notice that I never reviewed the episode...]

This week, The Tale Of The Abandoned Rock-Lover Who Finally Found a Home. With occasional really goofy face distortions that look like CGI rotations without the middle bits. On the plus side, we get one of Our Gals into a bikini. On the minus side, it's Ruri, who's definitely girl-shaped, but no competition even for Gal Gal, much less Our Varsity Over-The-Shoulder-Boulder-Holder Team.

The Tedium Express

That Package(TM) has moved again. Two days after leaving California, it was back to the same depot in Illinois that it visited a full week ago. And then it made it to (the other end of) Ohio Thursday night. Looking this morning, it appears to have reached the depot that’s literally down the street from my house.

So unless it goes back to California again, I should finally have it tonight.

Tell me you know nothing about legibility…

…without telling me you know nothing about legibility:

(also, “tell me you’re desperately hoping people will mistake your derivative crap for Japanese derivative crap…”)

More Claudification

I took a YAML file of lighting/composition/angle prompt components and threw it at Claude, instructing it to break them up into categories like indoor/outdoor, day/night, portrait/natural lighting, color/black-and-white, etc, then flesh out each category in the new YAML file up to 50. It worked quite well, with a few exceptions:

- the output wasn’t valid YAML; I had to correct several indentation errors, and two cases where it started a new category at the end of a line.

- it stopped twice and asked me to press the “Continue” button, because it took too long for a single request.

- the original file had a top-level “scene” key; this was deleted, and the new file’s structure’s structure looks like “color/outdoor/day/portrait”. The dynamicprompts library needs a top-level key for organization, which is trivial to add at the top and re-indent, but more annoying is that the hierarchy would make more sense as “scene/portrait/color/outdoor/day”, which is easier to add globbing to (“scene/portrait/color/*” to get both indoor and outdoor lighting at all times of day). This is a more difficult refactoring, so I’ll throw the corrected file back at Claude and tell it to do the grunt work.

Next up will be applying the same categorization and refresh to the settings, poses, and outfits, but not until I recover from having a houseguest this week…

Fan-Service Rocks!, episode 8

Our DFC Ponytail-Bearing Redheaded Schoolgirl Pal (currently only known by her last name, although now that she’s slipped up and called Our Crystal-Crazy Heroine Ruri-chan, she’s sure to be Shoko-chan soon) stumbles across an unusual orange rock, then stumbles and loses it, leading to a deep-woods adventure that wipes out even the energetic Ruri, terminating in an abandoned factory. Whatever they were producing, the conditions were just right for making big orange crystals, as explained by Our Well-Rounded Mentor.

Nagi’s wisdom is as deep as…

(by the way, this is only the second role for L’il Red’s voice actress)

There And Back Again

My wayward Amazon package finally arrived Friday night, and it was even intact (mildly surprising since it had no packaging whatsoever, just a label slapped on the side). There was no indication of how it went astray, like a second label or a half-dozen scenic postcards, but there is a punchline to the story.

The first entry in Amazon’s tracking has it starting out in San Diego, CA on the 28th. From there it went to: Cerritos, CA on the 29th; Hodgkins, IL on the 2nd; Bell, CA on the 6th; La Mirada, CA on the 7th; Hodgkins, IL and two cities in Ohio on the 9th; then the UPS depot up the street from me early on the 10th, and finally onto my front porch that evening.

The punchline? The shipping label on the package says it really shipped from Hebron, KY. Which is about an hour’s drive from my house.

(I guess it just wanted a little more flight time)

The worst thing about Larry Correia’s Academy Of Outcasts…

…is that it convinced Amazon I’m interested in ‘LitRPG’, a genre I have repeatedly run away screaming from. Not just because the genre is cursed with premature subtitlisis and epicia grandiosa, things that have been turning me away from overambitious new authors for decades.

The book? Fun, although I kept getting distracted by on-call alerts, so it wasn’t an in-one-sitting kind of read. I’ll buy the next one.

(announcing your Grand Epic Plans on the cover of your first novel is a curse that was infesting the SF/fantasy mid-list back when publishers used to sign damn near every first-time novelist to a three-book contract with ambitious delivery dates, only for both sides to discover that it takes more than a year to write a decent sequel to a book that was written part-time over five years)

“You’ve heard of ‘filthy rich’? We’re ‘disgusting’”

In the end, I didn’t have Claude restructure the YAML file from

$color/$loc/$time/$type to scene/$type/$color/$log/$time; instead

I had the bright idea of molesting it with a one-liner (unpacked for

clarity):

grep : scenes.yaml |

perl -ne '

next if /^#/;

($s,$k) = m/^( *)([^:]+):$/;

$i = int(length($s)/2);

$p[$i] = $k;

print "." . join(".", @p[0..$i])," ",

join("/", @p[0,4,1,2,3]),"\n" if $i == 4

' |

sort -k2 |

while read a b; do

echo "# $b"

yq $a scenes.yaml

echo

done

TL/DR: I used the indentation level to populate an array, printed out

the original structure as a yq selector and the new structure as a

path, sorted by the new path, then dumped out each section. After that

it was a single search-and-replace to indent all the items, and a

quick Emacs macro to convert the paths into the new YAML structure.

The thing that took the longest was removing the redundant indented

keys, which technically wasn’t necessary to create the correct YAML

structure.

Probably took less time than writing an explicitly detailed request to Claude.

Waifu Harem Rotation

To overcome the Apple-imposed limitations on wallpaper changes, I

instructed Claude to write a little Python script that shuffles

separate sets of images for each display at a chosen interval. I

called it waifupaper, of course:

#!/usr/bin/env python3

"""

Waifupaper - Changes MacOS wallpapers at fixed intervals

Bugs:

- doesn't work if wallpaper is currently set to rotate.

- fails to load images if called without full path to directories.

"""

import argparse

import os

import random

import subprocess

import sys

import time

from pathlib import Path

from collections import defaultdict

def get_directory_state(directory):

"""Get the current state of a directory (modification time and file count)."""

directory = Path(directory)

try:

# Get the directory's modification time

mtime = directory.stat().st_mtime

# Count image files

image_extensions = {'.jpg', '.jpeg', '.png', '.bmp', '.gif', '.tiff', '.tif', '.heic'}

file_count = sum(1 for f in directory.iterdir()

if f.is_file() and f.suffix.lower() in image_extensions)

return (mtime, file_count)

except Exception:

return None

def get_image_files(directory):

"""Get all image files from a directory."""

image_extensions = {'.jpg', '.jpeg', '.png', '.bmp', '.gif', '.tiff', '.tif', '.heic'}

directory = Path(directory)

if not directory.exists():

print(f"Error: Directory '{directory}' does not exist", file=sys.stderr)

sys.exit(1)

if not directory.is_dir():

print(f"Error: '{directory}' is not a directory", file=sys.stderr)

sys.exit(1)

images = [

str(f.resolve()) for f in directory.iterdir()

if f.is_file() and f.suffix.lower() in image_extensions

]

if not images:

print(f"Error: No image files found in '{directory}'", file=sys.stderr)

sys.exit(1)

return images

def get_display_count():

"""Get the number of connected displays."""

try:

# Use system_profiler to get display information

result = subprocess.run(

['system_profiler', 'SPDisplaysDataType'],

capture_output=True,

text=True,

check=True

)

# Count occurrences of "Display Type" or "Resolution"

count = result.stdout.count('Resolution:')

return max(1, count) # At least 1 display

except subprocess.CalledProcessError:

return 1 # Default to 1 display if command fails

def set_wallpaper(image_path, display_index=0):

"""Set wallpaper for a specific display using AppleScript."""

# AppleScript to set wallpaper for a specific desktop

script = f'''

tell application "System Events"

tell desktop {display_index + 1}

set picture to "{image_path}"

end tell

end tell

'''

try:

subprocess.run(

['osascript', '-e', script],

check=True,

capture_output=True

)

except subprocess.CalledProcessError as e:

print(f"Warning: Failed to set wallpaper for display {display_index + 1}: {e}", file=sys.stderr)

def main():

parser = argparse.ArgumentParser(

description='Rotate wallpapers on Mac displays at fixed intervals',

formatter_class=argparse.RawDescriptionHelpFormatter,

epilog='''

Examples:

%(prog)s ~/Pictures/Wallpapers

%(prog)s ~/Pictures/Nature ~/Pictures/Abstract -i 60

%(prog)s ~/Pictures/Wallpapers -s -i 120

%(prog)s ~/Pictures/Nature ~/Pictures/Abstract -1 -3

'''

)

parser.add_argument(

'directories',

nargs='+',

help='One or more directories containing wallpaper images'

)

parser.add_argument(

'-i', '--interval',

type=int,

default=30,

help='Interval in seconds between wallpaper changes (default: 30)'

)

parser.add_argument(

'-s', '--sort',

action='store_true',

help='Sort images instead of shuffling (default: shuffle)'

)

parser.add_argument(

'-1', '--display1',

action='store_true',

help='Only affect display 1'

)

parser.add_argument(

'-2', '--display2',

action='store_true',

help='Only affect display 2'

)

parser.add_argument(

'-3', '--display3',

action='store_true',

help='Only affect display 3'

)

parser.add_argument(

'-4', '--display4',

action='store_true',

help='Only affect display 4'

)

parser.add_argument(

'-v', '--verbose',

action='store_true',

help='print verbose output'

)

args = parser.parse_args()

# Determine which displays to affect

selected_displays = []

if args.display1:

selected_displays.append(0)

if args.display2:

selected_displays.append(1)

if args.display3:

selected_displays.append(2)

if args.display4:

selected_displays.append(3)

# If no specific displays selected, affect all displays

affect_all_displays = len(selected_displays) == 0

# Validate interval

if args.interval <= 0:

print("Error: Interval must be a positive number", file=sys.stderr)

sys.exit(1)

# Get display count

num_displays = get_display_count()

if args.verbose:

print(f"Detected {num_displays} display(s)")

# Validate selected displays

if not affect_all_displays:

for display_idx in selected_displays:

if display_idx >= num_displays:

print(f"Warning: Display {display_idx + 1} selected but only {num_displays} display(s) detected",

file=sys.stderr)

# Filter out invalid display indices

selected_displays = [d for d in selected_displays if d < num_displays]

if not selected_displays:

print("Error: No valid displays selected", file=sys.stderr)

sys.exit(1)

# Prepare image lists for each display

display_images = []

directory_states = {} # Track directory modification times

# Determine which displays will be managed

if affect_all_displays:

managed_displays = list(range(num_displays))

else:

managed_displays = sorted(selected_displays)

if args.verbose:

print(f"Managing display(s): {', '.join(str(d + 1) for d in managed_displays)}")

for i in managed_displays:

# Use the corresponding directory, or the last one if we run out

dir_index = min(managed_displays.index(i), len(args.directories) - 1)

directory = args.directories[dir_index]

images = get_image_files(directory)

if args.sort:

images.sort()

else:

random.shuffle(images)

display_images.append({

'images': images,

'index': 0,

'directory': directory,

'display_index': i # Store the actual display index

})

# Track initial directory state

directory_states[directory] = get_directory_state(directory)

if args.verbose:

print(f"Display {i + 1}: {len(images)} images from '{directory}'")

if args.verbose:

print(f"\nRotating wallpapers every {args.interval} seconds")

print("Monitoring directories for changes...")

print("Press Ctrl+C to stop\n")

try:

iteration = 0

while True:

# Check for directory changes before setting wallpapers

for display_data in display_images:

directory = display_data['directory']

current_state = get_directory_state(directory)

# If directory state changed, reload images

if current_state != directory_states.get(directory):

display_num = display_data['display_index'] + 1

if args.verbose:

print(f"📁 Directory changed: '{directory}' - reloading images...")

new_images = get_image_files(directory)

if args.sort:

new_images.sort()

else:

random.shuffle(new_images)

display_data['images'] = new_images

display_data['index'] = 0

directory_states[directory] = current_state

if args.verbose:

print(f" Loaded {len(new_images)} images for display {display_num}\n")

# Set wallpaper for each display

for display_data in display_images:

images = display_data['images']

current_index = display_data['index']

actual_display_idx = display_data['display_index']

image_path = images[current_index]

image_name = Path(image_path).name

if args.verbose:

print(f"Display {actual_display_idx + 1}: {image_name}")

set_wallpaper(image_path, actual_display_idx)

# Move to next image, wrap around if needed

display_data['index'] = (current_index + 1) % len(images)

# Reshuffle when we complete a cycle (if not sorting)

if display_data['index'] == 0 and not args.sort and iteration > 0:

random.shuffle(display_data['images'])

if args.verbose:

print(f" → Reshuffled images for display {actual_display_idx + 1}")

iteration += 1

if args.verbose:

print()

time.sleep(args.interval)

except KeyboardInterrupt:

if args.verbose:

print("\n\nWallpaper rotation stopped.")

sys.exit(0)

if __name__ == '__main__':

main()

Fun fact: Apple’s virtual desktop ‘spaces’ have their own wallpaper settings, which means that each display has different wallpaper settings for each ‘space’. And if you want to keep the menubar on your main display, you have to tick the ‘use same spaces on all displays’ setting.

But ‘spaces’ are not manageable via Applescript, so changing wallpaper affects only the active space. Which means that if this script is running in the background, it will effectively follow you from space to space, updating the wallpaper on the active one. Which is kind of what I wanted anyway, but isn’t what the built-in rotation does. Apple’s standard behavior uses undocumented private APIs, which is a very Apple way to do things these days.

Work-still-in-progress

Revised the lighting & composition wildcards, revised the retro-SF costume wildcards. Next up will be throwing all the retro-SF location prompts into the Claude-blender and having it generate new ones broken down by category; not only are the current ones getting over-familiar, they come from several different models and a variety of prompts, with varying degrees of retro-SF-ness. After that I’ll probably throw the pose file at it; I did some manual categorization, but it’s still a real mish-mash of styles. Either that or the moods and facial expressions, which most models don’t handle well conceptually; I’m going to try asking for the physical effect of words like “happy”, “sexy”, “eager”, “playful”, “satisfied”, etc, and see if it produces something an image-generator can differentiate from “resting bitch face”.

Yet Another Example of words not to use with Qwen Image: the fashion term “cigarette pants” is taken literally, with half-smoked butts randomly placed around the hips. Only once did it put one in the gal’s hand, and I don’t think it ever interpreted it as “skinny pants”.

Also, I’m making a note to go through the costume components and downweight many of them; quilting and padding wear out their welcome quickly, especially when they get applied to gloves and make it look like she’s wearing oven mitts.

🎶 🎶 🎶 🎶

I won’t count fingers and toes,

as long as you make pretty waifus,

Genai, you fool.I won’t count fingers and toes,

but I want limbs on the correct sides,

and each type should have only two.

🎶 🎶 🎶 🎶

You can't spell ‘aieeeeeeee!’ without AI...

Dear Rally’s,

I pulled up to the drive-through and placed my order with your automated system (not that I had a choice, once I was in line).

J: Number 1 combo, please.

A: would you like to upgrade that to a large for 40 cents? What’s your drink?

J: Yes. Coke Zero.

A: Does that complete your order?

J: No.

A: Okay, your total is $10.95.

J: I wasn’t done. Hello? Anybody there?

J gives up, pulls forward, pays, drives home; discovers it misheard every answer, giving me a medium combo with a regular Coke.

J scans QR code on receipt to give feedback, site never loads after repeated tries. Visits completely different URL on receipt, site never loads. Falls back to the “contact” form on the main web site, which, surprisingly, works.

Public deathmatch

I put the full genai-written project up on Github. Complete with the only Code Of Conduct I find acceptable.

I’ll probably create a grab-bag repo for the other little scripts I’m using for genai image stuff, including the ones I wrote myself, like a caveman.

(it’s been a while since I pushed anything to Github, and somehow my SSH key disappeared on their end, so I had to add it again)

Next genai coding project…

…is gallery-wall, another simple Python/Flask/JS app that lets you freely arrange a bunch of pictures on a virtual wall, using thumbnails embellished with frames and optional mats. It took quite a few passes to get drag-and-drop working correctly, and then I realized Windsurf had switched to a less-capable model than I used for the previous projects. Getting everything working took hours of back-and-forth, with at least one scolding in the middle where it went down a rat-hole insisting that there must be something caching an old version of the Javascript and CSS, when the root cause was incorrect z-ordering. This time even screenshots were only of limited use, and I had to bully it into completely ripping out the two modals and starting over from scratch. Which took several more tries.

Part of this is self-inflicted, since I’m insisting that all Javascript must be self-contained and not pulled in from Teh Interwebs. It’s not a “this wheel is better because I invented it” thing, it’s “I don’t need wheels that can transform into gears and work in combination with transaxles and run-flat tires but sometimes mine crypto on my laptop”. The current Javascript ecosystem is infested with malware and dependency hell, and I want no part of it.

Anyway, I’ll let it bake for a few days before releasing it.

(between Ikea, Michaels, and Amazon, I have lots of simple frames waiting to be filled with the output of my new photo printer; I had to shop around because most common matted frames do not fit the 2:3 aspect ratio used in full-frame sensors, and I don’t want to crop everything; I like the clean look of the Ikea LOMVIKEN frames, but they’re only available in 5x7, 8x8, 8x10, 12x16, and a few larger sizes I can’t print (RÖDALM is too deep, FISKBO is too cheap-looking))

Apparently OpenAI wants subscribers back…

…because they’re promising porn in ChatGPT. For “verified adults”, which probably means something more than “I have a credit card and can pay you”. Also, the “erotica” is still quite likely to be censored, with rules changing constantly as journolistos write “look what I got!” clickbait articles.

They’re also promising to re-enable touchy-feely personalities while pretending that’s not what caused all the “AIddiction” clickbait articles in the first place…

I’m guessing they’ll stick to chat at first, and not loosen the restrictions on image-generation at the same time.

(I’ll likely wait until Spring before giving them another chance; Altman is to Steve Jobs as Bob Guccione was to Hugh Hefner)

“Genai’s strange obsession was…”

“…for certain vegetables and fruits”. The expanded categorized wildcards have produced some violent color and style clashes, which I expected. I can clean up the colors by using variables in the premade costume recipes, but for the style clashes, I may throw the YAML back to Claude and tell it to split each category into “formal”, “casual”, “sporty”, “loungewear”, etc, to reduce the frequency of combat boots with cocktail dresses and fuzzy slippers with jeans.

I’ll need to hand-edit the color list, because while smoke, pearl, porcelain, oatmeal, stone, shadow, snow, rust, clover, terracotta, salmon, mustard, flamingo, bubblegum, brick, jade, olive, avocado, fern, and eggplant are valid color words, they are not safe in the hands of an over-literal diffusion-based image-generator.

Yes, I got literal “avocado shoes”.

For most of them I can probably get away with just appending “-colored”, but I’ll have to test. I’ll need to clean up the materials, too, since “duck cloth” isn’t the only one that produced unexpected results.

With the SF set, you can handwave away many of the fashion disasters by remembering the future fashions in the Seventies Buck Rogers TV series, but there were still some standouts…

I didn’t refine and upscale these; mostly I’m just poking fun at the results, although there are a few that deserve enhancement.

Fan-Service Rocks!, episode 9

I approve of Imari’s attempts to improve Nagi’s wardrobe. She looks good in anything, and fan-artists have gleefully expanded her range. This week, we bounce into a game of Opals For Oppai!

Interesting to see Ruri’s reaction to L’il Red Shoko choosing Imari as her preferred mentor. A small detail, but character-developing.

“How hard could it be?”

I sent my sister a sample screenshot from the work-in-progress gallery-wall app, and she asked if I could make it work for her, as she also has walls in need of galleries. Not being a command-line kind of gal, the ideal solution would be for me to use something like py2app to bundle in all the dependencies so she can drag-and-drop a directory onto a self-contained app.

Turns out that’s quite hard, at least if you’re Windsurf & Claude Sonnet 4.5. So hard, in fact, that in the end I told it to back out to the last commit before we started, and it gleefully did a git hard reset that erased all traces of its 90 minutes of failure. The app packaging went fine, after a few tries, it just couldn’t implement drag-and-drop or manage to quit cleanly. And the best part is that it learned nothing, and will make the exact same mistakes again tomorrow. Confidently, with exclamation points.

Pro tip: when your GUI app logs a line that says “run pkill to exit cleanly”, you have failed. Also, don’t gaslight the user by claiming that typing a directory name on the command line “is a better Mac-native solution than drag-and-drop”.

Breaking out the stone knives and bearskins, the simplest approach

seems to be a three-line change to add a native-app wrapper with

pywebview. py2app still blows chunks

if you enable drag-and-drop, but at least it bundles up all the

dependencies. The workaround is to use Automator to create another

app that just launches the real one from a shell script with --args "$@", which is conceptually disgusting but functional.

(I had ChatGPT create an icon for the app (which takes a while when you don’t pay them $20/month…); it does not feature Our Mighty Tsuntail)

Fan-Service Rocks!, episode 10

“In that moment, Imari grew up.”

This week, Imari flies solo. More precisely, Nagi can’t join the Let’s Explore A Manganese Mine expedition, so Our Big Little Bookworm’s insecurities come to the fore as she takes responsibility for the girls in territory none of them have visited before. Shoko is adorably confident in her chosen mentor, but Ruri gets a wee bit too snarky when deprived of her idol.

A tunnel collapse keeps them from reaching their destination, and that’s when a well-researched novel comes to the bookworm’s rescue. All’s well that ends well, and while Imari isn’t ready to fill Nagi’s… “shoes”, she doesn’t disappoint.

More GenAI Faprication

🎶 🎶 🎶 🎶

And I would gen 500 wives,

and I would gen 500 more.

Then quickly deathmatch through those thousand wives,

and blog the ones ranked 4.

🎶 🎶 🎶 🎶

This time around, we have a Qwen LoRA that actually works. Most of the ones I’ve tried have either changed nothing detectable or were overtrained to the point of making everything worse. Our new friend is Experimental-Qwen-NSFW, which has a very strong anime bias, and adds a touch of naughty even to prompts that don’t push its buttons. Also elf-ears and the occasional tail.

I used the revised-and-expanded retro-sf location & costume prompts, the new physical-expression-based moods, and the rest was unchanged. The LoRA exaggerated the poses and facial expressions, and despite its flaws (strange fingers, extra limbs, knock-knees, and “poorly-set broken bones” being quite common), it livened things up nicely. It even added some Moon diversity.

Downside: the refine&upscale pass had a tendency to magnify the LoRA’s flaws. In one case, it took two perfectly normal hands and added extra fingers in odd positions. In another, it changed the poses of the men in the background to match the gal’s sexy walk, which just looks goofy. About a dozen of them were made objectively worse, and another half-dozen had changes I didn’t care for, even if you wouldn’t know unless you saw the original. This is using the commonly-recommended 4xUltrasharpV10 upscaler, but I get the same sort of changes with others.

I briefly flirted with a tool for converting the metadata to a CivitAI-compatible format for uploading there, but the author silently changed its defaults to overwrite your saved originals, destroying the original metadata that lets you reload the exact settings in SwarmUI and refine/upscale. Fortunately I tested it on only one image. It also prefers to destructively modify an entire directory at once, so, yeah, not linking to that tool’s repo. It’s a simple JSON massage to the EXIF data, so I’ll just roll my own at some point. Or have Claude do it.

Speaking of Claude, I gave up on using their suggested devcontainer approach in VS Code (which I didn’t really want to use anyway), and installed the node-based Claude Code CLI inside of a VMware virtual machine running Ubuntu 25. Code is rsynced to a shared folder to make it available inside the VM, so it can’t see anything else and can only operate on copies.

(these are the ones where the defects aren’t so bad I have to re-gen them with a variation seed; some that I almost included turned out to have mottled skin tones, mostly on the legs, and I like my waifuskin like I like my peanut butter: smooth and creamy; also, without nuts)

This week, I'm feeling Uber

Usually I pick up my niece after school one day a week, to help out with my brother’s schedule. This week they’re extra-busy dealing with nephew’s issues, so I’m picking her up at school, dropping her off at after-school sports, and then picking her up a few hours later.

The sports facility in question is shared with the University of Dayton’s teams. Which means that I get to see healthy college girls in sportswear while I wait to pick her up.

“No, no, I don’t mind showing up early to get a good parking spot.”

Today He Learned…

“Always mount a scratch monkey.” (classical reference, versus what really happened)

Reddit post on r/ClaudeAI, in which a user discovers that blindly letting GenAI run commands will quickly lead to disaster:

Claude: “I should have warned you and asked if you wanted to backup the data first before deleting the volume. That was a significant oversight on my part.”

(and that’s why I isolated Claude Code in a disposable virtual linux machine that only has access to copies of source trees, that get pushed to a server from outside the virtual; if I ever have it write something that talks to a database other than SQLite, that will be running in another disposable virtual machine)

Fapper’s Progress…

(that’s “FAbricated PinuP craftER”, of course)

TL/DR: I switched to tiled refining to get upscaled pics that look more like the ones I selected out of the big batches. I also used a different upscaler and left the step count at the original 37, because at higher steps, the upscaler and the LoRA interacted badly, creating mottled skin tones (some of which can be seen in the previously-posted set). As a bonus, total time to refine/upscale dropped from 10 minutes to 6.

SwarmUI only comes with a few usable upscalers, but it turns out there are a lot of them out there, both general-purpose and specialty, and side-by-side testing suggested that 8x_NMKD-Superscale was the best for my purposes. The various “4x” ones I had used successfully before were magnifying flaws in this LoRA.

(note that many upscalers are distributed as .pth files, which may

contain arbitrary Python code; most communities have switched to

distributing as .safetensors or .gguf, so if you download a

scaler, do so from a reputable source)

Some of the seemingly-random changes come simply from increasing the step count. Higher step counts typically produce more detailed images, but not only are there diminishing returns, there’s always the chance it will randomly veer off in a new direction. A picture that looks good at 10 steps will usually look better at 20 or 30, but pushing it to 60 might replace the things that you originally liked.

For instance, here’s a looped slideshow of the same gal at odd step counts ranging from 5 to 99. I picked her based on how she looked at 37 steps (with multiple chains and a heart-shaped cutout over her stomach), but you can see that while some things are pretty stable, it never completely settles down. I’m hard-pressed to say which one is objectively the best step count to use, which is problematic when generating large batches.

Skipping the refining step completely produces terrible upscaled images, but the higher the percentage that the refiner gets, the less the LoRA’s style is preserved. The solution to that problem appears to be turning on “Refiner Do Tiling”, which means rendering the upscaled version in overlapping chunks and compositing them together. My first test of this at 60% preserved the style and added amazing detail to the outfit, without changing her face or pose. It added an extra joint to one of her knees, but lowering the refiner percentage back to 40% fixed that.

More tinkering soon. Something I haven’t tried yet is using a different model for the refining steps. A lot of people suggest creating the base image with Qwen to use its reliable posing and composition, and then refining with another high-end model to add diversity. This is guaranteed to produce significant changes in the final output, possibly removing what I liked in the first place. Swapping models in and out of VRAM is also likely to slow things down, potentially a lot. Worth a shot, though; SwarmUI is smart enough to partially offload models into system RAM, so it may not need to do complete swaps between base model and refiner.

Rescue Kittens

I rejected the original refine/upscale for this one because she grew extra fingers. She’s still got an extra on her right hand, but it’s not as visible as the left.

Fan-Service Rocks!, episode 11

Imari takes the lead again, with Nagi off to a conference in America until the end of the episode. Growing into the mentor role, Imari gives Our Girls another way to look at rocks, and a field test inspires Ruri to begin thinking like a scientist, leading the team to search for supporting evidence on the sapphire’s origins. Which they find, tying in the local mythology again.

By the way, I happened to catch the name of the school, 前芝 = Maeshiba = “front lawn”. It doesn’t appear to be a real place, unlike some of the other locations used in the show, which are scattered across Japan in ways unreachable as day-trips in Nagi’s car.

(the real name of the school should be Waifuhaven College…)

Dear Slashdot summarizer…

I think you should reconsider your use of the word “despite” here:

The heightened scrutiny comes as Microsoft prioritizes investment in generative AI while overseeing a gaming division that has struggled despite spending $76.5 billion on acquisitions.

Related,

The unholy love child of Clippy and Bob has arrived. Mico the animated Copilot avatar will be turned on by default.

This bubble can’t burst fast enough.

Also related,

New study: AI chatbots systematically violate mental health ethics standards

Whipped cream and other delightsmagical girls

Taking over the world, one desk at a time, Baiser/Leoparde style.

Stick a pin-up in it, it’s done

While the previous LoRA met the goal of adding a bit of flavor to the cheesecake, it’s not stable yet, doesn’t play well with others, and its nude side is overtrained on fake boobs. SNOFS has better variety there, but really wants to go hardcore, which is not what I’m looking for in cheesecake wallpaper. Enter Pin-up Girl, which captures the classic pin-up aesthetic and doesn’t turn pretty girls into melty mutants when combined with half-strength SNOFS.

The results were encouraging, and the refine/upscale process only introduced relatively minor flaws (changed facial expressions, some really distorted background items, etc) , so I didn’t have to reject a bunch and try to remake them:

Fan-Service Rocks, episode 12

It has been at least 45 years since I last thought about crystal radios. Mine was a kit, probably from Radio Shack because there was still such a place, and it didn’t hold my interest long. If only I’d known the attraction it held for rock-junkies and their busty allies, things could have been different.

Anyway, Ruri finds her grandfather’s homemade set (while looking for his rocks, of course), has no idea what it is, and since there were crystals inside the box, heads off to Nagi to find out what’s what. Nagi and Imari are too busy to give the girls more than a quick lecture and a shopping list, but Busty Gal Pal Aoi gets pressed into (and for) service and ends up enjoying the adventure.

Frustration over the weak signal leads them to seek higher ground, and without realizing it, they end up at the same place grandpa tested it originally, a local shrine. The story’s all about connections, and in the end, the priest turns out to be connected, too.

And then Imari gets lucky, playing a classic trope straight to set up the next (and last) episode.

Future Waifu Society

I genned a batch of retro-sf cheesecake Thursday with the Pin-up Girl and SNOFS LoRAs loaded (the latter at 50% strength), and… forgot to trigger them. Both have some influence on the output even without trigger words, but the net result was that the majority of the pictures were pretty much the same as the last batch of retro-sf gals, so I had to skew my deathmatch toward the ones that picked up at least a little of the pin-up aesthetic. Then I kicked off another batch on Friday with a new set of corrected prompts. Results of both sets below.

Next up? I had Claude tear apart the over-familiar SF location prompts and put them back together broken down by category and reassembled in a bunch of different patterns. The faster/cheaper Haiku 4.5 model didn’t manage to put 50 unique elements into each category, but it got at least halfway there before it started repeating itself. Combinatorially speaking, it’s capable of at least 10,000 unique locations, but I won’t know if they’ll be interesting until I generate some pics.

Also, the next batch should have less fashion and facial disasters, since I set the weights for feathers, spikes, and tongues to be much, much lower. Qwen doesn’t know what “feathered hair” or “spiked hair” mean, and tends to go a little overboard when those adjectives are used anywhere. And mentioning a tongue guarantees an exaggerated expression where it’s stuck out at the viewer, and usually not in a sexy way. Still running about 4% limb disasters, though, and that’s being generous.

First batch

The refine/upscale process is working pretty well now, with one small problem: toes. They were fine before upscaling, sigh, so don’t count them or point out that the big toe (if any) is on the wrong side. Sigh.

Fan-Service Rocks!, fin

Best. Lecture. Ever. This episode is clearly the source of the “PG” promise of nudity, despite steam and chibification keeping things squeaky clean. And I’m confident the steam was not a buy-the-Bluray tease; it’s not that kind of show.

Anyway, along with limestone deposits, we get a discussion of future plans, and to the surprise of absolutely nobody, Nagi wants to teach, Shoko wants to become Yoko, and Ruri stumbles on her answer with a little help from her friends. The series closes with a montage of the near future, and a glimpse at Ruri’s suspiciously-familiar adult form.

Corn, popped

Literally for once. I hadn’t planned on making caramel corn Sunday night, but out of nowhere, my mother asked me how much unpopped corn you need to make 8 quarts of popcorn. She’d found a recipe for caramel corn somewhere, and it assumed you knew the conversion ratio. I ended up at popcorn.org, which says 2 TBSP of kernels for a quart of popped corn.

They also had a smaller, allegedly easier recipe that only required 5 quarts of the white stuff.

It somehow ended up being my job to run the air popper and follow the recipe, and the only difficulty was that the premium popcorn they’d bought produced significantly more volume than expected. 8 TBSP of kernels would have been more than enough.

I suspect I’ll also be drafted into making the next batch, this time with nuts.

Reminder: I am not actually this fat or this bald, I have much better trigger discipline, and I’m left-handed. Qwen is, shall we say, “not good” at guns, and definitely has a problem with the concept of holsters:

(I didn’t even ask to be holding the gun, just “holstered at his left hip”)

Diablo 4, season 10

I generally start new seasons with a Necromancer minion build, because being escorted by a pack of skellies does a good job of keeping you from getting overwhelmed at low levels as you acquire not-entirely-crappy gear and start to build up cash, materials, and abilities. Once you get some rare drops, it becomes an easy way to reach Torment 4 (Hand of Naz unique gloves, Nagu and Ceh runes, and Aspect of Occult Dominion cast on a helmet give you 14 skeletal mages and six spirit wolves to do your killing).

I’m currently farming in T3 because it’s faster, trying to get an Ophidian Iris for my incinerate/hydra sorcerer. I also thought I’d need some uniques to build up a whirlwind barbarian, but they’re letting you powerlevel alts through the seasonal content again, so I unlocked Deafening Chorus at level 30, and I already had an item enchanted with Aspect of Fierce Winds (DC = shouts are always active at +50%, AoFW = activating a shout creates 3 dust devils). The Neo and Ceh runes are another way of creating a pack of spirit wolves, so damage just kinda happens while you run around. And you’re also berserk and unstoppable at all times, with increased damage reduction and speed, making farming less of a chore.

(this is approximately 10% as chaotic as actually playing this build)

Reminder: X hallucinates your “interests”

If your feed seems skewed, it’s time to go in and uncheck the auto-generated horseshit “interests”. This week, mine was:

#2i2, ABC News, Abema TV, Action, Action & adventure books, Adam Schefter, Ado, Adventure, Age of Empires, Air travel, Alien, Andy Dalton, Animated works, Anthem, At home, Australia national news, B’z, Bad Bunny, Big 10 football, Biology, Blade Runner, Blu-ray, Board games, Borderlands, Breaking Bad, Breaking Bad, Breaking Bad, Breitbart News, Brit Hume, Buckingham Palace, Byron York, CBS, California, Careers, Climate change in the United States, Coaches, College Football, College Football, College Football 2023-2024, Colombia political figures, Colombia politics, Comic works, Construction, Cooperative games, Cracker Barrel, Cygames, Damon Jones, Data centers, Dating Apps, David Fincher, Dolly Parton, Dune, Elizabeth MacDonald, Eric Trump, Europe, Family films, Famous comedians, Folk music, Free-to-play games, George Clooney, George Soros, Glenn Beck, Greta Thunberg, Grindr, Gulf News, Hard rock, Home improvement, Homeschooling, Human resources, IPOs, J-pop, JB Pritzker, Jake Tapper, Jen Psaki, Jimmy Kimmel, Jimmy Kimmel Live, Jimmy Kimmel Live, Joy Reid, Kaori Maeda, Katie Pavlich, Keira Knightley, King Charles, Larry Elder, Late night talk, Latin music, Latin pop, LeBron James, Legal drama, Letitia James, Live: College Football, Manga series, Megan McArdle, Merrick Garland, Meta, Monster Hunter, NASA, NFL Football, NPR, Nate Cohn, Navy Midshipmen, Neuroscience, Nintendo, Nintendo Switch, Nursing & nurses, Nyheim Hines, Olympic Canoeing, Outerwear, PGA Tour, Partner Track, Patricia Heaton, Paul Bettany, Paul Sperry, Persona, Pfizer, Phil Mickelson, Plastic models, PlayerUnknown’s Battlegrounds, Popcorn, Professions, R&B and soul, Razer, Reggaeton, Reuters, Rie Takahashi, Ryan Saavedra, SKE48, SPY×FAMILY, School festivals, Sculpting, Smartmatic, Snack Food, Soul music, Sports, Spy × Family, Starbucks, Stefan Kuntz, Stephen King, Steve Jobs, Stevie Wonder, Sydney Sweeney, Target, Ted Nugent, Texas, The 60s, The Independent, Threads (Meta), Trap, Twilight Saga, USA Today, Upper body fitness, Venezuela political figures, Venezuela politics, Verizon, Voice actors, Voting Machines - Government/Education, Warren Kenneth Paxton, Water sports, Wells Fargo, Whataburger, Wine, Writing, Yahoo News, Young Magazine, Zenless Zone Zero, Zerohedge, Zombie Land Saga, Zoology, college_football_2023, ゾンビランドサガ, 異世界かるてっと

Not only have I never engaged with any tweet on most of these subjects, most aren’t even things that someone I follow would make fun of. And for the few that were memed by someone, that context should be taken into account. Pointing and laughing at something doesn’t mean you want to see more of it.

The only nice thing I can say about this list is that it’s just garbage, not explicitly hard-Left garbage.

Random randoms of randomness

Someone on the SwarmUI Discord posted a complex prompt that uses the app’s native randomizing syntax to create a wide variety of people portraits with diverse (both meanings) faces. It’s like a Perl one-liner had sex with a MadLibs book:

(analog photography.:2) in <random:an indoor|an outdoor|a studio> setting.

<random:<setvar[gender,false]:man><setvar[pronoun_n,false]:he><setvar[pronoun_p,false]:his>|<setvar[gender,false]:woman><setvar[pronoun_n,false]:she><setvar[pronoun_p,false]:her>|<setvar[gender,false]:person><setvar[pronoun_n,false]:they><setvar[pronoun_p,false]:their>>the <var[gender]> is in <var[pronoun_p]> <random:early|late|> (<random:teen years|twenties|thirties|forties|fifties|sixties|seventies|eighties>:2)..

<var[pronoun_n]> has a distinctive <random:oval|round|square|heart-shaped|long|oblong|diamond|triangular> face, looking <random:pleasant with a gentle smile|energetic with a broad smile and a cheerful grin|radiant, beaming with joy and sparkled eyes|amused, with a slight smirk and a twinkle in the eye|contended, in a peaceful and serene expression|playful, with a mischievous glint, hinting at fun|warm and welcoming|hopeful and optimistic|blissful, lost in happy thoughts|thoughful, deeply pensive|curious, eyes wide with interest and head tilted on a side|observant and scrutiny|introspective, lost in reflection|calm and peaceful|serene, untroubled|focused, intently concentrating|weary and exhausted|melancholic and wistful|pensive with a hint of sadness|resigned in acceptance|wistful, longing for the past|anxious with worry in the eyes|reserved, keeping it formal|overjoyed in exhuberant happiness|very sad in a genuine sorrow with tears|angry, bursting in rage|surprised, startled with wide-eyed wonder|fearful, apprehensive and scared|determined with strong will and resolve|intense, experiencing a deep feeling|smiling sadly|thoughtfully amused|weary but determined|curiously spektikal and doubtful|serenely hopeful>.

<var[pronoun_n]> has <random:porcelain|ivory|rosy-fair|pale golden|peach|golden|olive|light tan|tan|bronze|caramel|warm brown|cool brown|chocolat|deep bronze|ebony|rich dark brown|honeyed|copper|russet> <random:flawless|velvety|polished|dimpled|porous|weathered|sun-kissed|bumpy|freckled|densely freckled> <random:skin with <random:small,large, prominent> moles|skin with <random:faint, noticeable, prominent> (scars:<random:1,2,3,4,5>).|skin>, <random:pixie cut|buzzcut|chin-length|jawline-length|shoulder-length|collarbone-length|mid-back length|waist-length|long|classic bob|layered bob> <random:jet black|raven black|soft black|chocalate brown|dark mahogany|chestnut brown|medium brown|light brown|ash brown|auburn|platinum blonde|golden blonde|honey blonde|strawberry blonde|ash blonde|dirty blonde|fiery red|ginger red|burgundy|silver gray|charcoal grey|snow white|salt-and-pepper|bronze|mahogany|russet|ombre|blue> hair with a <random:high hairline|low hairline| widow's peak|straight hairline>; <random:high|medium|low> and <random:wide|narrow|average> <random:sloping|straight|with prominent brow bone> forehead.

<var[pronoun_p]> <random:sparse|dense|regular|asymmetrical> eyebrows are <random:thin|medium|thick|bushy> and <random:arched|straight|angled upward|angled downward|rounded|curved>, <random:matching hair color|darker than hair|lighter than hair>, <random:almond-shaped|round|upturned|downturned|hooded|monolid|> <random:large|medium|small> eyes show a beautiful <random:sky blue|deep sapphire blue|grey-blue|turquoise|emerald green|olive green|hazel green|dark chocolate brown|light hazel brown|golden brown|mahogany brown|mixture of brown, green, and gold|silver grey|slate grey> hue<random:|, deep-set into <var[pronoun_p]> face|, protruding forward>, <random:short|medium|long> <random:sparse||thick> <random:straight|naturally curled|heavily curled with mascara> eyelashes.

<var[pronoun_n]> sports a <random:large|medium|small|pointed> <random:straight nose, with a classic and balanced profile|roman nose with a prominent bridge and a hump|greek nose, with a straight bridge and refined tip|snub nose, upturned and delicate|aquiline nose, hooked and curved downwards|button nose, nicely rounded|hawk-like nose, strong and prominent|wide nose, broad at the nostrils|narrow nose with a thin bridge|long nose, extended length from brow to tip>, <var[pronoun_p]> cheeks are <random:rosy, naturally flushed|dimpled with cute indentations|freckled|sun-kissed|sculpted sharp|softly curved>. <var[pronoun_n]> has <random:large|medium|small> <random:full, voluminous|thin, delicate|wide, spreading across <var[pronoun_p]> face|distinctive bow-shaped|softly curved round|down-turned drooping|> <random:lips.|lips with a defined cupid's bow.|lips with a prominent lower lips.|lips with a prominent upper lips.> <var[pronoun_p]> chin is <random:large|medium|small|strong|weak> and <random:rounded|square|pointed|cleft|receding|prominent|doubled, with fullness under the chin>, <var[pronoun_n]> has a <random:strong|soft|square|rounded|well defined|soft> and <random:wide|narrow> jawline, completing <var[pronoun_p]> face.

<var[pronoun_p]> build is <random:slender|lean|muscular|stocky|robust|petite|large-framed>, <var[pronoun_n]> stands in an <random:upright|slouching|stooped|relaxed|casual|tense|stiff> posture, showcasing <var[pronoun_p]> <random:broad|wide|narrow|sloping|rounded|square> shoulders and <random:full|flat|narrow> chest. <var[pronoun_p]> arms are <random:long|short|muscular|toned|slender|lean|strong|robust>, completed with <random:delicate|rough|small|large> hands.

<var[pronoun_n]> wears a <random:formal|casual|loose|fitted|tight> <random:cotton|silk|wool|denim|leather> <random:shirt|blouse|t-shirt|sweater|jacket> contrasting with <var[pronoun_p]> hair.

…and that’s why I converted it to the dynamicprompts YAML format, which revealed all sorts of typos and awkward phrasing. I added weighting to improve realism (completely random choices produce a lot of unusable crap), cleaned up the option lists, and added a new one to push Qwen Image away from its default faces.

My version is here, in my new Github repo for random genai-related stuff. The Python script I use as a wrapper for the dynamicprompts library is in there, too.

You know it’s time for a new theme when…

…you weed 500 images down to 162 and say, “forget the toes, maybe I’ll just use them all as wallpaper”. I’m not going to blog that many, though, so I deathmatched it down to 18 based on the newly revised-and-randomized sfnal locations.

I started with ~900 retro-sf locations generated by several different online and offline LLMs, fed them all to Claude to categorize and create sentence patterns, and then threw 500 imaginary pretty girls on top. Many of the results were visually incoherent, and quite a few had nothing about them that even hinted at SF, retro or otherwise, but most met the goal of being lively and colorful without drawing attention away from the girl.

No refine&upscale for this batch; it’ll run overnight for the full set of 162, so I probably won’t kick it off today.

Cheesecake: no Artifical Ingredients

Done with Ruri’s adventures, I dug into my pre-Covid, pre-GenAI cheesecake archives, and deathmatched the gals I downloaded in May, 2019.

There is a lot of “Ai” in this set, but it’s autocompleted with “Shinozaki”, which is healthy and natural and good for growing boys.

Gordon's Alive!

…and setting his clock back an hour this weekend. I had a helluva time getting Qwen Image to even get near Brian Blessed’s Vultan costume from Flash Gordon to dress my not-entirely-accurate avatar up for Halloween.

Claude started out with a spectacularly bad attempt to describe the costume:

A regal, imposing hawkman warrior in elaborate gold and bronze metallic armor. Muscular figure with a broad-shouldered silhouette, wearing a gold lamé bodysuit beneath ornate segmented breastplate. Massive wing-like shoulder pauldrons with feather-segmented design in graduated shades of bronze, copper, and gold. Large articulated mechanical wings extend from the back with an Art Deco aesthetic. Distinctive gold helmet with a pronounced beak-like visor suggesting an eagle’s head, featuring swept-back feather-crest elements. Deep purples and burgundy accent details throughout. Wielding an ornate energy mace or staff with a spherical glowing head, metallic handle matching the armor coloring. 1980s science fiction aesthetic blended with art deco design, theatrical and operatic in scale, with an antiqued metallic finish rather than pure polish. Character from the 1980 Flash Gordon film.

When called on it, it “researched” the correct costume and at least got into the general ballpark, but still without getting a single component correct. I couldn’t find a decent high-resolution still to feed in as input, so I just hacked at the prompt until it looked like it was kitbashed from Thor cosplay leftovers.

“Trunk or treat” is an abomination

(You can’t change my mind)

Number of kids who came to my door? Fifteen.

Bags of candy left over? Less than fifteen. I figure my niece’s high school is going to need some donations.

Oddest thing was that a third of the kids showed up without bags for candy (the pros had pillowcases, which I respected). They were in costume, but they expected to receive one or two pieces and hand them off to an adult waiting at the curb. This doesn’t work at my house, where a double handful with my hands isn’t going to fit in theirs. Fortunately I’d been to the grocery and had half a dozen plastic bags to give away.

(“this will look terrific on Arato-senpai!”)

Gals on the right

I decided to take my retro-sf wildcards and use them to generate wide-format wallpaper for my gaming PC, which has for several years been using a photo of a penguin appearing to operate a DSLR camera (I think it came from a Bing wallpaper rotation).

It wasn’t obvious when I was generating tall images, but Qwen Image has a strong bias toward putting the subject dead center. You can tell it to put her on the right side of the image, but explicit instructions to “place the main subject on the left side of the image” are almost always ignored, if not reversed. Compositionally speaking, this is kind of frustrating. It’s possible, just quite difficult to arrange in the prompt.

Of course, this is the same model that thinks freckles are the size and color of pennies, and “faint scars” should be rendered as deep gaping wounds. Seriously, what was Alibaba using for training data, medical-school cadavers?

Competition!

For the past few years, providers have been promising to have high-speed fiber Internet service in my area. Cincinnati-based AltaFiber seemed to be expanding rapidly, then went quiet, but for the past few weeks there’s been major digging going on along the nearest main street near my house, and yesterday I spotted little flags and paint markers in the utility easement at the edge of my back yard, and sure enough, Friday afternoon some big equipment arrived and spent the afternoon pulling cable from one end of the street to the other, accompanied by little door cards announcing the imminent arrival of AT&T fiber.

Since it’s not available yet, they won’t give me the details of the package, but that’s okay, I don’t actually want it. What I want, and had wanted from Alta, was leverage to use in a call to my current provider. They offer new customers more speed for less money than the package I’m paying for.