Nothing about current events

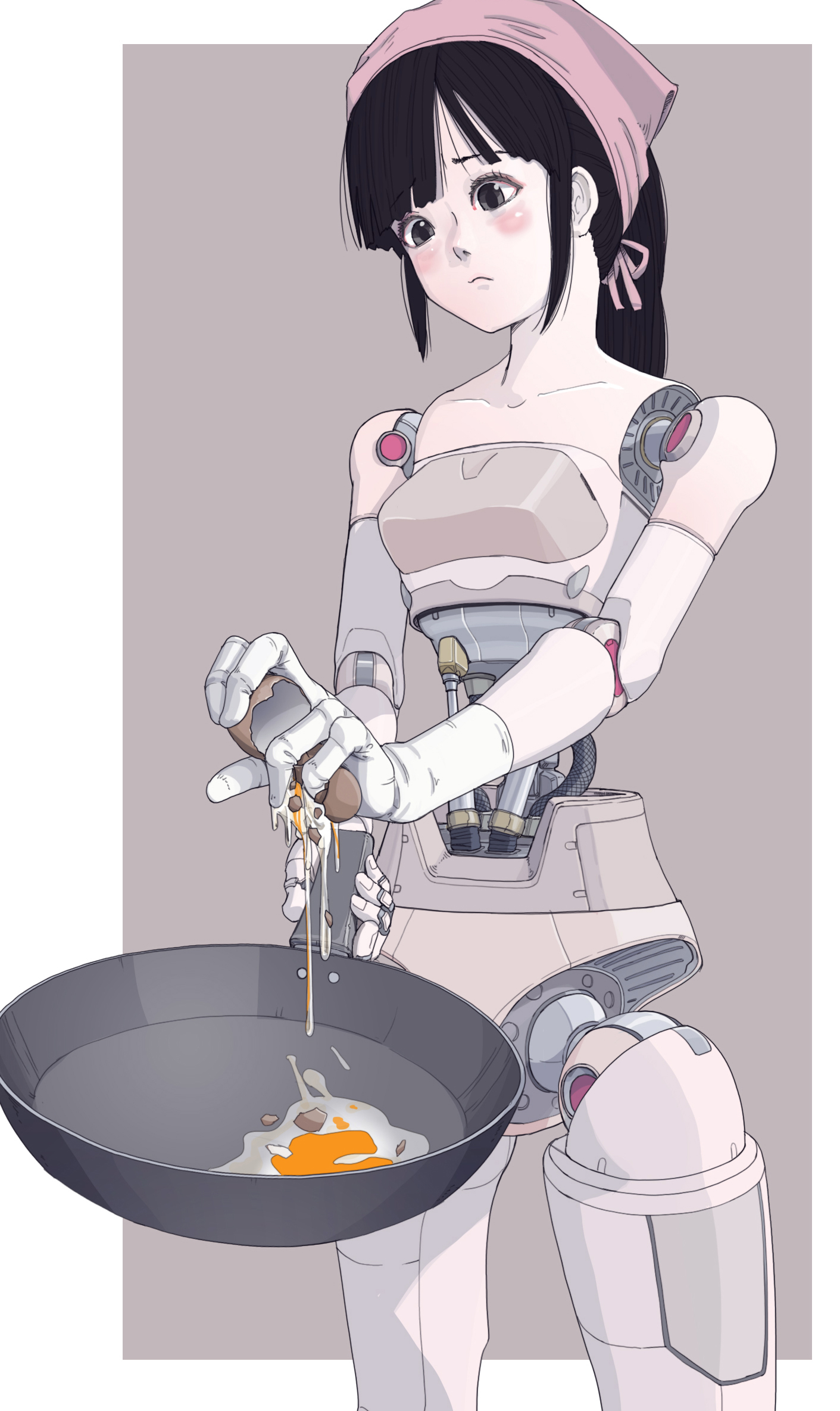

I’d rather find a second waifu than write about evil right now…

(I found her in a bulk-wildcard-testing run where I set it to use the

default sampler/scheduler, CFG=3.5, Steps=37, which took 1.5

minutes/image; cranking the steps up to 60 and using Heun++2/Beta

improved quality at the cost of taking 6.75 minutes; refining it at

40% and upscaling it by 2.25x with

4xNomosUniDAT_bokeh_jpg

eliminated the artifacts and significantly improved detail, but took

25.75

minutes.

Worth it?)

(trying to do a 4x upscale ran out of memory after 45 minutes…)

Fiverr lays off 30% of employees…

…to focus on AI. Meanwhile, Fiverr freelancers are making a bundle cleaning up after AI.

🎶 🎶 It’s the cirrrrrrcle of liiiiiiife 🎶 🎶

Wow, Your Forma was worse than I thought…

Some reviewers made a fuss about the chemistry between the two leads being due to their voice actors being married in real life. Not any more.

Maybe she wasn’t willing to do the Sylphy voice in bed… 😁

(official art from the light novels, where she’s a busty little chibi; I’ve already used up all the fan-art, and I didn’t have any luck with the LoRAs)

Now that’s a knife dock!

OWC is selling a Thunderbolt 5 dock with 3x USB-A 3.2 ports, 1x USB-C 3.2 port, 4x Thunderbolt 5 ports (1 used for upstream), 1x 2.5Gb Ethernet port, and 2x 10Gb Ethernet ports with link aggregation support. For those who need their Mac Mini to have multiple 8K monitors and serious NAS bandwidth.

It’d be kind of wasted on me, so I’m thinking I’ll settle for the CalDigit Thunderbolt 4 dock: 4x Thunderbolt 4 (1 upstream), 4x USB-A 3.2.

Out of context

“Reasoning” models tend to do better at prompts like “give me a list

of N unique action poses”, except when they dive up their own assholes

and start generating reams of text about the so-called thinking

process they’re following faking. I’ve had them spend 10+ minutes

filling up all available context with busywork, without ever returning

a single result. If they do return something, the list is often

non-unique or cut off well before N.

But the context is the real problem, because if you only got N/2 results, you’d like to coax it to finish the job, but there’s a good chance that the “thinking” has consumed most of the context, and most models do not support purging context in the middle of generating a response.

It would also be useful to copy the setup of a known working chat and generate a completely different kind of list. I definitely don’t need the context of a search for poses and lighting setups when I ask for festive holiday lingerie ideas.

You can’t just reload the model with larger context and continue the chat. You can’t fork the chat into a new one; that preserves the context, too. What you want is to wipe all answers and context and preserve the settings and your prompts, so you don’t have to set up the chat again. In LM Studio, this functionality doesn’t seem to exist.

So I wrote a quick Perl script to load the JSON conversation history and remove everything but your prompts.

#!/usr/bin/env perl

use 5.020;

use strict;

use warnings;

use JSON;

open(my $In, $ARGV[0]);

my $json = join(' ', <$In>);

close($In);

my $conv = decode_json($json);

# remove token count

$conv->{tokenCount} = 0;

# rename

$conv->{name} = "SCRUBBED " . defined $conv->{name} ? $conv->{name} : "";

# remove all messages not from user.

my $out = [];

foreach my $msg (@{$conv->{messages}}) {

push(@$out, $msg) if $msg->{versions}->[0]->{role} eq "user";

}

$conv->{messages} = $out;

print encode_json($conv);

This dumps a fresh JSON conversation file to STDOUT, which can be

given any filename and dropped into ~/.lmstudio/conversations.

Why Perl? Because I could write it faster than I could explain the specs to a coding LLM, and I didn’t need multiple debugging passes.

(BTW, 32K context seems to be a comfortable margin for a 100-element list; 16K can be a bit tight)

Digits and Shadows

It’s kind of amazing how the “state of the art” keeps advancing in AI without fixing any of the known problems. LLMs hallucinate because they must, and image-diffusion engines can’t count fingers or recognize anatomical impossibilities because they don’t use anatomy. All the alleged coherence you hear about in AI output is either sheer accident or aggressive post-processing.

Let’s examine a few types of Qwen Image failures:

“Thumbs, how do they work?”

Trying to put your own hand into a position seen in a generated image can be awkward, painful, or simply impossible if it’s on the wrong side.

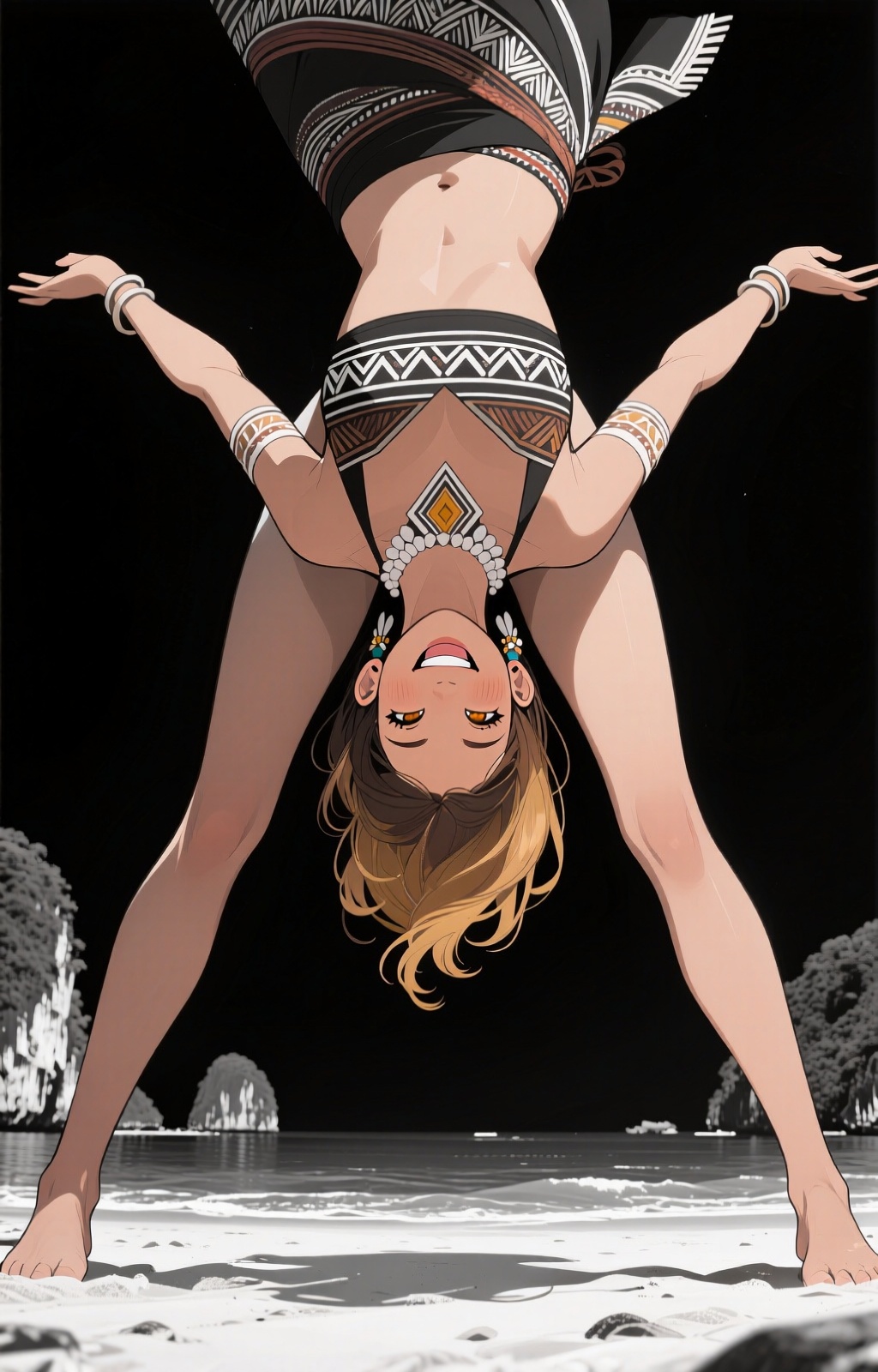

“Toes Over Hoes”

Qwen really, really hates inverted and mid-air poses. They’re statistically unlikely, so at every step, the diffusion process has a high chance of adding legs that touch the ground. Every step you add to the generation process increases the chance that a faint hint of shadow will be transformed into a second pair of legs.

…or a second pair of arms…

“Got a leg, leave a leg; need a leg, take a leg”

Extra limbs are usually obvious, but what about the missing ones?

“Shadow by a shadow be exposed”

Sometimes diffusion seems to have an amazing grasp of how shadows fall on skin under different lighting conditions. Then something reveals the smoke and mirrors.

“Left, right, left, right, there’s none of the enemy left, right?”

The errors are fractal. First you’re counting fingers, then checking to see what side the thumbs are on, then you find yourself carefully following a leg to see if it’s hidden behind the other one or just missing, and suddenly you realize that she has three left arms and two of them are on the right side. Or her wrist is broken because joints can’t do that. Or her legs are twisted 90 degrees to the left without even a slight twist at the hip. Or she’s lying on her stomach with her waist rotated 180 degrees. Or, or, or, …

(at 5 min/pic, my original method of just generating hundreds of gals and doing a very aggressive 5-star deathmatch has kind of broken down)

Honorable mentions

Shoe glue:

When she gets back to her Coppertone ad, remind her to take off the shoes before tanning.

Well, this date’s off to a rocky start…

I am removing all wildcards with the phrase “small birthmark”; whoever tagged the training data for Qwen Image did not know what those words mean. Freckles often turn into a bad rash, too.

Also “crown braids”, sigh.

(“bird’s-eye view” adds literal birds, or just their heads, like sports-team logos floating in mid-air)

What’s with the ropes? The LLM-generated prompts describe certain poses “like she’s pulling on an invisible rope”. I don’t object to the results, but I might move them to a different category…

This is a combination finger fail, perspective fail, and Escher fail, where the right hand simply doesn’t know where the right foot is, and cheerfully puts them both on the same impossible plane to satisfy the “one hand placed on ground” instruction.

I wasn’t expecting a laser there

Apparently neither was she.

Not a physics engine!

…and you were doing so well…

A crown braid without a literal crown, pleasant scenery on the way down, and then her plastic feet melted together.

Comments via Isso

Markdown formatting and simple HTML accepted.

Sometimes you have to double-click to enter text in the form (interaction between Isso and Bootstrap?). Tab is more reliable.