Diversions

(we now resume our regularly scheduled randomblogging, until the next slaughter of innocents by Left-wing domestic terrorists)

Boxxo Or Bust 2, episode 11

This is really not a good week for the show to go grimdark, revealing Our Shoe Fetishist’s bloody, revenge-fueled path. I’m just not going to watch it right now.

Here’s something wholesome instead:

Warner Bros wants to be the Broadcom of streaming

CEO insists HBO Max should be priced like diamonds. Six customers are so much easier to support than six million.

Not a fan

I bought a new unmanaged 10Gb switch, because my existing one only had 2 10Gb ports with the rest being 2.5Gb. Three years ago, the price of a switch with 8 10Gb RJ45 ports was a bit too spicy, but now it’s just annoying.

What really hurts is when a desktop switch has a fan loud enough to be heard from the next room. And I need it to be in the same room. For comparison, I barely notice the Synology NAS that’s on the same desk, even when it’s doing a RAID scrub. For the sake of my sanity and productivity, I guess I’ll be building the switch a padded crib…

New Monitor(s)

The new monitor is an “ASUS ZenScreen Duo OLED 360° Foldable Portable Monitor (MQ149CD)”, which is quite a mouthful, but the TL/DR is that it’s two 1920x1200 HDR panels that can be used separately or together, portrait or landscape. To use it as two separate monitors, you need to use two cables, but despite the one-inch gap between the panels, you can still get good use out of it as a single 1920x2400 display.

I’m using the 1/4”-by-20 tripod screw to mount it higher than the kickstand, and despite all claims of portability, yeah, you have to plug it in to drive it properly (1 of the 3 USB-C ports is power-only). Right now I’ve just got it hooked up with HDMI, because my current dock was low on free USB-C ports, but once I get things rearranged I’ll separate them, because Lightroom’s window layout is a bit inflexible, and the one-inch gap is bad for photo editing. Better to use the application’s “secondary display” support.

This does make me want a vertical 20+ inch 4K HDR portable monitor, though. I just don’t want it to be from a company I’ve never heard of before, which is what’s all over Amazon.

“Shared memory and fancy NPUs are no match for a good graphics card at your side, kid.”

While I’m discussing new toys, I’ll mention that I also picked up a refurbished M4 Pro Mac Mini, which in theory has much faster “AI” performance than my M2 MacBook Air. With 64 GB of RAM, it can run mid-sized offline LLMs at a decent pace, and even do quick image captioning if I downscale raw images to a sensible resolution.

What it can’t do is more than very basic image generation. It’s not the memory, it’s the cores: not enough of them, and not fast enough. This matches what I’ve heard about the insanely-pricy Mac Studio and the merely-way-overpriced systems built on the AMD Ryzen AI Max+ 395. Their performance comparisons are based entirely on running models that simply don’t fit into a consumer graphics card’s VRAM. “Our toaster is much faster at running this 48 GB model than a 24 GB Nvidia 4090!”

The Mini is a huge speedup for Lightroom and Photoshop, though, which made it worthwhile for me. Any “AI” playability is a bonus.

(I have never seen a human being hold a camera this way)

Related, “Dear Draw Things programmers”

Come back when you discover the concept of legibility. Dipshits.

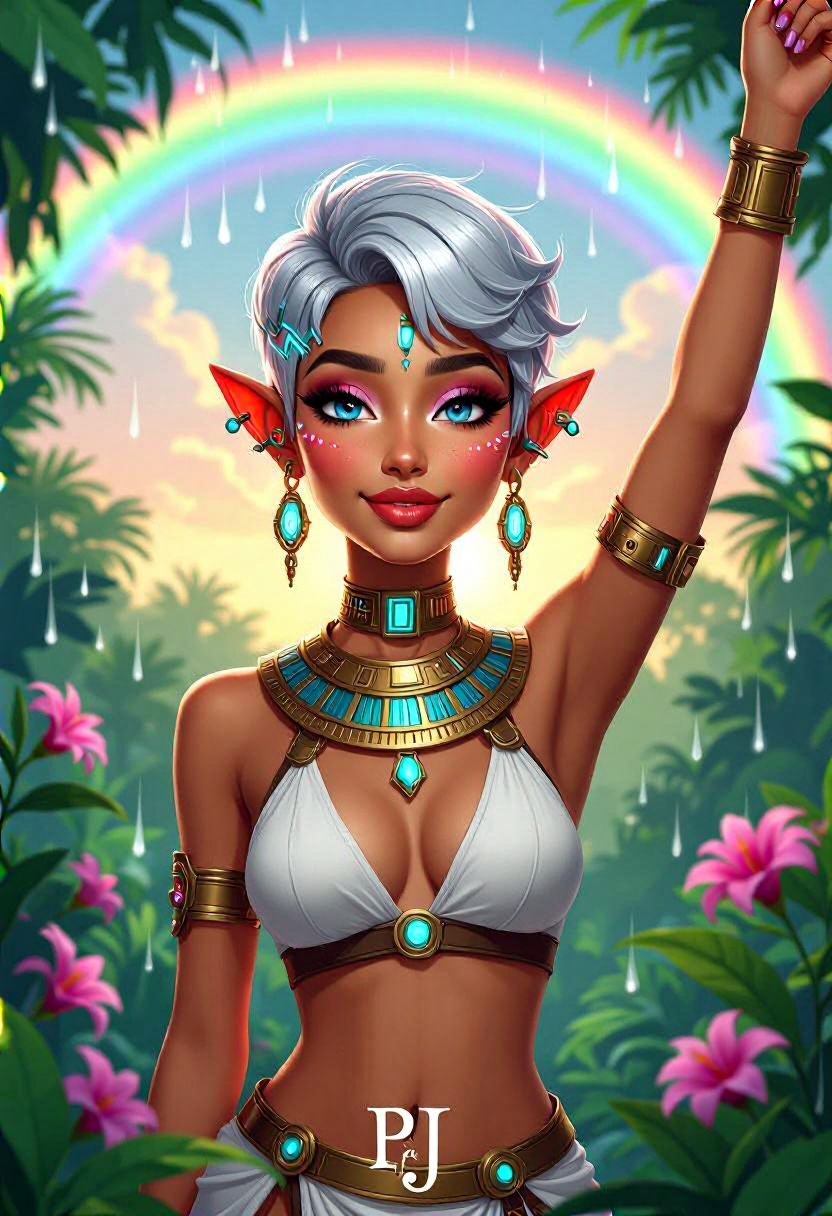

Accidental Disney Princess

I was testing a few more LLM-enhanced dynamic prompts, and one in particular stood out: a series of very consistent images of a fresh-faced young gal who could make Bambi’s woodland friends sit up and beg.

4K resolution, crisp and highly detailed, create an illustration that exudes a high-budget aesthetic. Depict an average height, lovely ethnic Egyptian woman who is 18 years old with a petite figure. She has deep blue eyes, lobed ears, a straight nose, a wide chin, an U-shaped jaw, dimpled cheeks, and a flat forehead. Her heart-shaped face highlights her gentle features. Her skin is healthy and alabaster white, with festive holiday makeup that complements her almond-shaped eyes and full lips. Her hair is steel gray, styled in a cybernetic pixie cut with metallic edges and glowing circuit-like patterns. The woman has a happy expression as she stands with one arm raised, bathed in luminous waves of light. The background features a lush jungle filled with singing flora, glittering with raindrops under the radiant colors of a rainbow sun. Subtle dawn light filters through, creating an aerial perspective with open framing and pastel tones that evoke a gentle awakening. The composition highlights her graceful form against the vibrant greenery, capturing a dreamlike atmosphere.

Sadly, the prompt that worked so well in Qwen Image was… less successful with other models that don’t have an embedded LLM to parse complete sentences sensibly, and failed completely with a few that couldn’t handle the combination of the Beta scheduler and the Heun++ 2 sampler (I may redo those with their defaults).

It’s not that the others all failed, they just didn’t produce consistent characters who looked like my princess and/or lived in her enchanted forest.

HiDream actually made a pretty consistent character, but with elf ears and multiple ear piercings. And random text. And extra fingers.

Omnigen2 was consistent about only one thing: showing titties.

SDVN8 made a bunch of completely different forest princesses without even a touch of cyber. SDVN7 ditto.

Now to see if I can put her elsewhere and make her 3D…

__style/photo__ of __char/cyberprincess__ She has a __mood/_normal__ expression. __pose/sexy__. Her location is __place/_future__. __scene__.

Pretty good, but I didn’t get lucky with the random expression. Qwen Image thinks this is “warm”; to me it looks like typical model deadface. No finger problems, but a rather deformed toe. Still, it kept the costume style pretty consistent despite having no guidance in the prompt. I’m not even sure how I’d describe the outfit details in order to replicate it, except for specifying that I want the version with the bare midriff and the slit skirt; maybe I’ll ask a vision-enabled LLM for fashion tips.

Livening her up

I wasn’t surprised that the expression didn’t work out; the embedded LLM doesn’t make it any better at figuring out emotional language, so you need to keep it literal, so I switched to my mood/happy wildcard that uses words like smiling, laughing, etc.

Negative heart

Qwen Image is not immune to typical diffusion bleeding; “heart-shaped face” has been adding literal hearts all over the place, so let’s see what happens when I add “heart” to the negative prompt.

Sigh. Yup, still a diffusion model at heart. So to speak.

Back to 2D

__style/illustration__ of __char/cyberprincess__ She has a __mood/happy__ expression. __pose/sexy__. Her location is __place/_future__. __scene__.

Really need to start giving this gal some shoes. Among other things, it hides the terrible twisted toes Qwen is prone to conjuring.

Hey, look, it gave her shoes!

…because the prompt had her “sitting on her heels”, which it interpreted as high heels instead of her heels.

She might be giants

…and now I want to name my yummy waifu…

Princess Lay-me and Queen Nefertitty are too on the nose, I think. 😁 I might go with Sylphy, since I just named the Mac Mini after the one from Dungeon Chibis; the name can do double duty.

Anime-style came out a bit younger

Comments via Isso

Markdown formatting and simple HTML accepted.

Sometimes you have to double-click to enter text in the form (interaction between Isso and Bootstrap?). Tab is more reliable.